GPU chip maker Nvidia doesn’t just make the devices that end up in some of the largest supercomputers in the world. It is, as a designer of chips, packaging, and systems also a big user of traditional HPC applications and a pioneer in the use of AI, particularly when it comes to the company’s autonomous vehicle initiatives.

This is why Nvidia has not one, not two, not three, but four supercomputers on the latest Top500 supercomputer rankings, which were announced this week at the International Supercomputing 2019 conference in Germany.

We have written about two of the machines before. The first was the Saturn V cluster based on the DGX-1P system with “Pascal” Tesla P100 GPUs from the fall of 2016, and the follow-on Saturn V Volta cluster that debuted a year later with the Tesla V100 GPU accelerators. The original Saturn V system, which is still in operation, had 124 DGX-1P nodes, lashed together using 100 Gb/sec EDR InfiniBand in a two-tier fat tree network. The DGX-1P nodes had a pair of 24-core “Broadwell” Xeon E5-2698 v4 processors running at 2.2 GHz as their serial motors. The Saturn V system delivered 4.9 petaflops of peak double precision floating point performance and, was able to push 3.31 petaflops running the Linpack matrix math test w, yielding a computational efficiency of 67.5 percent. At 349.5 kilowatts, that works out to 9.46 gigaflops per watt sustained, and at an estimated cost of $18 million at list price (after a decent discount), that also works out to around $4,450 per teraflops sustained.

The Saturn V Volta kicker that was revealed by Nvidia in November 2017 had 660 nodes and was much more of a beast. By moving to Volta, the next-generation Saturn V computer, which is also still in operation, had a total of 5,280 Volta GPUs and delivered 40 petaflops of peak performance. For some reason, Nvidia only tested a 33 node chunk of the Saturn V Volta machine using Linpack rather than testing the whole machine’s 660 nodes.

That Saturn V Volta slice had a theoretical peak performance of 1.82 petaflops at double precision, and yielded 1.07 petaflops on the Linpack test; that is a 58.8 percent computational efficiency – considerably lower than on the original Saturn V. But the Saturn V Volta slice only burned 97 kilowatts and that worked out to a very impressive 11.03 gigaflops per watt sustained. That slice of the system probably had a list price of around $5.6 million and a street price of around $4.5 million, which works out to around $4,200 per teraflops, a little bit less than the original Saturn V. The full Saturn V Volta machine would have cost 20X that, or around $90 million if you wanted to buy it, but Nvidia probably got it all at cost and with the GPUs dominating the overall price, you can bet it was a lower than even $40 million for Nvidia to build this 40 petaflops system itself.

Last November, at the SC18 supercomputing conference in Dallas, Nvidia unveiled “Circe,” a cluster based on its slightly revved DGX-2H systems, which have sixteen Volta GPUs overclocked a bit so they run at 450 watts instead of 350 watts, all hooked together by the NVSwitch GPU interconnect inside the system and with eight 100 Gb/sec InfiniBand EDR network interfaces from Mellanox to hook the nodes to each other to share data for calculations. The DGX-2H machine has slightly faster 24-core “Skylake” Xeon SP-8174 Platinum processors than the regular DGX-2 was launched with earlier in 2018. We think it costs about $435,000 per DGX-2H node at list price based on the $399,000 list price for the regular DGX-2 system that debuted a little more than a year ago.

The Circe cluster had 36 nodes, and had a peak double precision rating of 4.24 petaflops and a Linpack rating of 3.06 petaflops, for an operational efficiency of 72.2 percent, which ain’t bad. (Nvidia is getting better at running for Linpack, and NVSwitch ain’t hurting, either.) Our best guess is that the Circe cluster cost around the same as the original Saturn V cluster, or around $18 million at list price, with Nvidia paying less than half of that to actually build it. We don’t have power ratings on the Circe cluster, so we can’t give you gigaflops per watt ratings, but the system cost around $4,700 per teraflops. That is about 6 percent more expensive than the original Saturn V cluster per unit of double precision work, but the number of GPUs has contracted by a factor of 2.3X (576 versus 1,312) and the computational efficiency is way up, too.

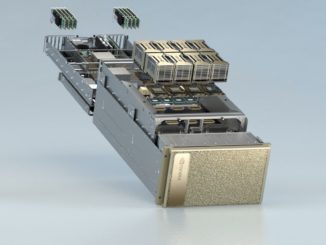

That brings us to the latest Nvidia internal supercomputer, called the DGX SuperPOD, shown in the image above. It is used for a variety of workloads, including graphics, speech translation, HPC, and healthcare, but it was built predominantly to support Nvidia’s self-driving car initiative.

The DGX SuperPOD system has sixteen racks of the DGX-2H overclocked nodes, which is a total of 96 of the machines, all connected using a two-tier InfiniBand network including director-class switches. The system is interesting in that it has ten rather than eight 100 Gb/sec ports per node – a 20 percent increase in bandwidth into and out of the server.

It is interesting that Nvidia did not use five or six or even eight 200 Gb/sec HDR InfiniBand adapters and matching switches from Mellanox. The DGX SuperPOD is rated at 11.2 petaflops peak and 9.44 petaflops sustained on Linpack. This machine burns 1 megawatt of power – overclocking does not come free – which is roughly three times as much as the original Saturn V system from 2016 did, but it only delivers 9.44 gigaflops per watt, or about the same thermal efficiency of that original Saturn V. And at an estimated $38.4 million street price, the DGX SuperPOD costs around 2.6X as much money. So there is some gain on price/performance. But this is really about delivering extreme performance.

Ian Buck, vice president and general manager of accelerated computing at Nvidia, tells The Next Platform that the DGX SuperPOD can train a ResNet-50 model in under two minutes, which is blazingly fast compared to these other clusters.

The thing to remember here is not nothing is free. That NVSwitch inside of the 96 systems in the DGX SuperPOD allow for larger machine learning training models to be created in a shared memory space of 512 GB, and the overclocked GPU accelerators allow for them to do more work, but at a cost of much higher energy consumption and heat dissipation. That’s the difference between a muscle car and a rocket sled, and customers have to know which one they need to drive.

Be the first to comment