The Roads To Zettascale And Quantum Computing Are Long And Winding

In the United States, the first step on the road to exascale HPC systems began with a series of workshops in 2007. …

In the United States, the first step on the road to exascale HPC systems began with a series of workshops in 2007. …

The National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory, one of the key facilities of the US Department of Energy that drives supercomputing innovation and that spends big bucks so at least a few vendors will design and build them, has opened up the bidding on its future NERSC-10 exascale-class supercomputer. …

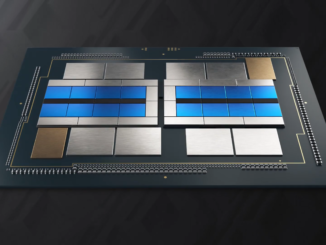

Historically Intel put all its cumulative chip knowledge to work advancing Moore’s Law and applying those learnings to its future CPUs. …

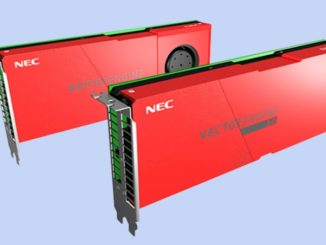

Updated: There is some chatter – some might call it well-informed speculation – going on out there on the Intertubes that Japanese system maker NEC is shutting down its “Aurora” Vector Engine vector processor business. …

One of the reasons why Intel can even think about entering the GPU compute space is that the IT market, and indeed just about any market we can think of, likes to have at least three competitors. …

There’s no resting on your laurels in the HPC world, no time to sit back and bask in a hard-won accomplishment that was years in the making. …

If memory bandwidth is holding back the performance of some of your applications, and there is something that you can do about it other than to just suffer. …

If a few cores are good, then a lot of cores ought to be better. …

The jittery economy hasn’t been kind to most semiconductor companies, even those like AMD and Nvidia that are growing in the datacenter. …

The HPC industry, after years of discussions and anticipation and some relatively minor delays, is now fully in the era of exascale computing, with the United States earlier this year standing up Frontier, its first such supercomputer, and plans for two more next year. …

All Content Copyright The Next Platform