Escher Erases Batching Lines for Efficient FPGA Deep Learning

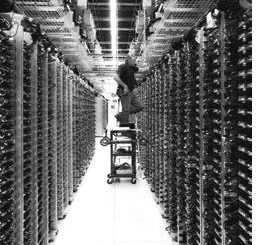

Aside from the massive parallelism available in modern FPGAs, there are other two other key reasons why reconfigurable hardware is finding a fit in neural network processing in both training and inference. …