There have been rumors that either Arm Ltd or parent company and Japanese conglomerate SoftBank would buy British AI chip and system upstart – it is no longer a startup if it is eight years old – Graphcore for quite some time. And yesterday the scuttlebutt was confirmed as Masayoshi Son got out the corporate checkbook for SoftBank Group and cut check to acquire the company for less money than its investors put in.

It has not been an easy time the various first round of AI chip startups that were founded in the wake of the initial success that Nvidia found with its GPU compute engines for training neural networks. Or, at least, it has not been as easy as it could have been to get customers and amass both funding and revenues on the road to profits.

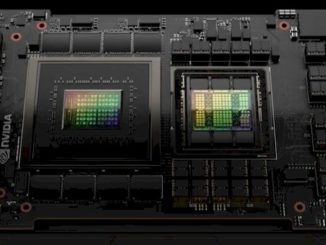

Most of these startups, in one form or another, emulate the clean slate approach that Google took with its AI-specific Tensor Processing Units, which were first deployed in early 2015 and which are now in their sixth generation with the “Trillium” family of GPUs, the first of which were revealed a month ago. The idea is to strip all of the graphics processing that comes from the GPU heritage and the high-precision floating point math required by HPC simulation and modeling out of the design and, in many cases, build in fast and wide networking pipes to interconnect these resulting matrix math engines into clusters to take on very large jobs.

BrainChip, Cerebras Systems, Graphcore, Groq, Habana Labs (now part of Intel), Nervana Systems (supplanted by Habana at Intel), and SambaNova Systems all were founded with the idea that there would be an explosion in the use of specialized chips to train AI systems and to perform inference using trained models. But the ideal customers to buy these devices – or to acquire these companies – were the hyperscalers and cloud builders, and instead of buying any of these compute engines or systems based on them, they decided to use a different two-pronged approach. They bought Nvidia GPUs (and now sometimes AMD GPUs) for the masses (which they can rent at incredible premiums even after buying them at incredible premiums) and they started creating their own AI accelerators so they could have a second source, backup architecture, cheaper option.

Even the shortages of Nvidia GPUs, which have been propping up prices for the past three years, has not really help the cause of the AI chip and system upstarts all that much. Which is odd, and is a testament to the fact that people have learned to be weary of and leery of software stacks that are not fully there yet. So beware Tenstorrent and Etched (both of whom we just talked to and will write about shortly) and anyone else who thinks they have a better matrix math engine and a magic compiler.

It is not just a crowded market, it is a very expensive one to start up in and to be an upstart within. The money is just not there with the hyperscalers and cloud builders doing their own thing and enterprises being very risk averse when it comes to AI infrastructure.

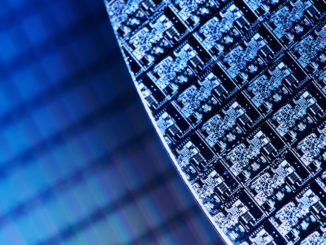

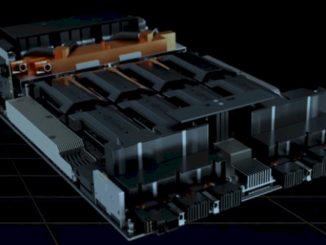

Which is why Graphcore was seeking a buyer instead of another round of investment, which presumably is hard to come by. With former Prime Minister Rishi Sunak pledging £1.5 billion for AI supercomputing in the United Kingdom, with the first machine funded being the Isambard-AI cluster at the University of Bristol, there was always a chance that Graphcore would get a big chunk of money to build its Good AI supercomputer, a hypothetical and hopeful machine that the company said back in March 2022 it would build with 3D wafer stacking techniques on its “Bow” series Intelligence Processing Units. But for whatever reason, despite Graphcore being a darling of the British tech industry, the UK government did not fund the $120 million required to build the proposed Good machine, which would have 8,192 of the Bow IPUs lashed together to deliver 10 exaflops of compute at 16-bit precision and 4 PB of aggregate memory with over 10 PB/sec of aggregate memory bandwidth across those Bow IPUs.

We would have loved to see such a machine built, and so would have Graphcore, we presume. But even that would not have been enough to save Graphcore from its current fate.

Governments can fund one-off supercomputers and often do. The “K” and “Fugaku” supercomputers built by Fujitsu for RIKEN Lab in Japan are perfect examples. K and Fugaku are the most efficient supercomputers for true HPC workloads ever created on the planet – K actually was more efficient than the more recent Fugaku – but both are very expensive compared to alternatives that are nonetheless efficient. And they do not have software stacks that translate across industries as the Nvidia CUDA platform does after nearly two decades of immense work. K and Fugaku, despite their excellences, did not really cultivate a widening and deepening of indigenous compute on the isle of Japan, despite the very best efforts of Fujitsu with its Sparc64fx and A64FX processors and Tofu mesh/torus interconnects. Which is why Fujitsu is working on a more cloud-friendly and less HPC-specific fork of its Arm server chips called “Monaka,” which we detailed here back in March 2023.

Japan ponied up $1.2 billion for build the 10 petaflops K machine, which became operational in 2011, and $910 million for the 513.9 petaflops Fugaku machine, which became operational in 2021. If history is any guide, Japan will shell out somewhere around $1 billion for a “Fugaku-Next” machine, which will become operational in 2031. Heaven only knows how many exaflops it will have at what precisions.

For the United Kingdom, the University of Edinburgh is where the flagship supercomputer goes, not down the road from where Graphcore is located in Bristol. Of the £900 million ($1.12 billion) in funding from the British government to build an exascale supercomputer in the United Kingdom by 2026, £225 million ($281 million) of that was allocated to the Isambard-AI machine and most of the rest is going to be used to build a successor to the Archer2 system at the Edinburgh Parallel Computing Centre (EPCC) lab.

Graphcore was never going to get a piece of that action because it builds AI-only machinery, not hybrid HPC/AI systems, no matter how much the British government and the British people love to have an indigenous supplier. If it wanted full government support, Graphcore needed to create a real HPC/AI machine, something that could compete head to head with CPU-only and hybrid CPU-GPU machines. Governments are interested in weather forecasting, climate modeling, and nuclear weapons. This is why they build big supercomputers.

Because of the lack of interest by hyperscalers and cloud builders and the risk aversion of enterprises, Graphcore found itself in a very tight spot financially. The company has raised around $682 million in six rounds of funding between 2016, when it was founded, and 2021, in the belly of the coronavirus pandemic when transformers and large language models were coiling to spring on the world. That is not as much money as it seems given the enormous hardware and software development to create an exaflops AI system.

The last year for which we have financials for Graphcore is 2020, which according to a report in the Financial Times saw the company only generate $2.7 million in revenues but post $205 million in pre-tax losses. Last fall, Graphcore said it would need to raise new capital this year to continue operating, and presumably the revenue picture and loss picture did not improve by much. It is not clear how much money was left in the Graphcore kitty, but what we hear is that SoftBank paid a little more than $600 million to acquire the company. Assuming that all the money is gone, then Microsoft, Molten Ventures, Atomico, Baillie Gifford, and OpenAI’s co-founder Ilya Sutskever have lost money on their investment, which is a harsh reality given that only four years ago Graphcore had a valuation of $2.5 billion.

None of that harsh economics means that a Bow IPU, or a piece of one, could not make an excellent accelerator for an Arm-based processor. SoftBank’s Son, who is just a little too enamored of the cacophonous void of the singularity for our tastes (we like the chatter and laughter of individuals and the willing collaboration and independence of people), has made his aspirations in the AI sector clear.

But so what?

All of the upstarts mentioned above had and have aspirations in AI, and so do the hyperscalers and cloud builders who are actually trying to make a business out of this. And they all have some of the smartest people on Earth working on it – and still Nvidia owns this space compute engine, network, and software stack, which is analogous to lock, stock, and barrel.

Son spent $32 billion to acquire Arm Ltd back in 2016. If Son is so smart, he should have not even bothered. At the time, Nvidia had a market capitalization of $57.5 billion, which was up by a factor of 3.2X compared to 2015 as the acceleration waves were taking off in both HPC and AI. At $32 billion, Son could have acquired a 55.7 percent stake in Nvidia. With Nvidia’s market cap standing at $3,210 billion as we go to press, that hypothetical massive investment by Son in Nvidia would be worth just shy of $1,786 billion today.

To put that into perspective, the gross domestic product of the entire country of Japan was $4,210 billion in 2023.

We had a burr under our saddle earlier this year, talking about how Arm should have had a GPU or at least some kind of datacenter-class accelerator to compete against Nvidia in its portfolio. We took a certain amount of grief about this, but we stand by our statement that Arm left a lot of money on the Neoverse table by not having a big, fat XPU, and here are we with Son having Arm in one hand and Graphcore in the other. But with the hyperscalers and cloud builders already building their own accelerators, the time might have passed where Arm can sell IP blocks for accelerators.

But maybe not.

There may be a way to create a more general purpose Graphcore architecture and take on Fujitsu for Fugaku-Next, too. Or to collaborate with Fujitsu, which would be more consistent with how the Project Keisuko effort to make the K supercomputer started out in 2006 with a collaboration between Fujitsu, NEC, and Hitachi.

There is only one sure way to predict the future, and that is to live it. We shall see.

More and more, always that way really, the cadre of design manufacturers determine what to build on production economies of scale.

Who wants to build 10s of thousands or a few 100s of thousands when you can build millions optimizing the acquisition of inputs. driving down cost, maximizing margin, reducing if not eliminating business risk over the long run.

The work around has always been right sizing the manufacturing relationship if and when that resource in the presence of end customer ‘product demand’ which means utility, semi-custom and price premiums to make up for the margin lost in small scale production.

To do this requires a regional rethinking about what is craft, emulation and manufacture.

Mike Bruzzone, Camp Marketing

I will add, when the system of manufacture makes your product untenable and therefore obsolete. mb