The HPC gurus of the world may have started moving into the hyperscalers and cloud builders in recent years, but they don’t tend to work for vendors and they tend to stay in one place and lean in. Bronis de Supinski, the chief technology officer at Livermore Computing at Lawrence Livermore National Laboratory, is one such guru.

He got a BA in mathematics in 1987 at the University of Chicago, did something for five years that left no Internet vapor trail, and then went on to get a PhD in computer science at the University of Virginia in 1998. At that point, de Supinski joined Lawrence Livermore, and has been there ever since. Teams that he led have won the Gordon Bell prize twice – in 2005 and 2006 – and he became an expert in HPC development environments, running the Application Development Environment and Performance Team for the Advanced Scientific Computing (ASC) program for the US Department of Energy. He was named CTO at Livermore Computing in 2012 and is responsible for the teams that co-design the large-scale HPC systems with the compute engine, networking, and system makers of the world and that implement and run those machines.

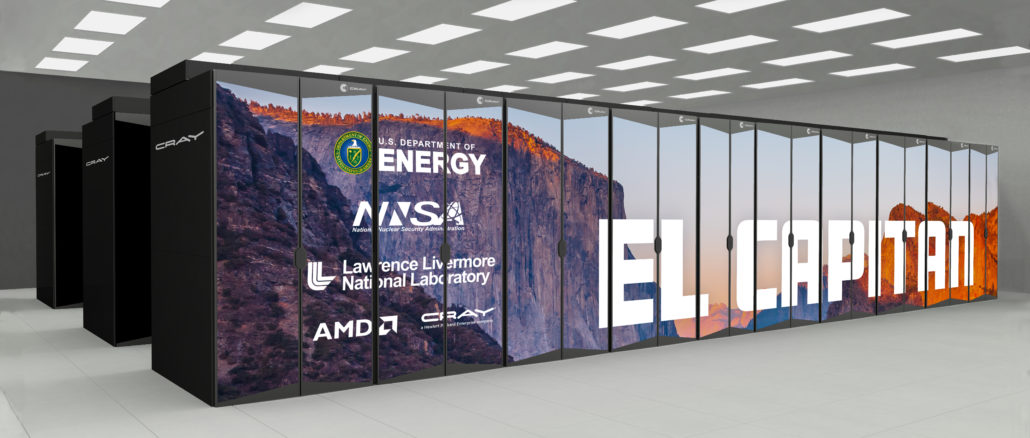

In the wake of the ISC23 conference, we had a chat with de Supinski about how it was deploying novel architecture AI machines and got an update on the “El Capitan” hybrid CPU-GPU system that will be its top-end machine – and very likely the most powerful HPC system in the world – when it is installed later this year.

Timothy Prickett Morgan: I understand why anyone peddling a novel architecture wants to get into Lawrence Livermore, and I understand that part of your mandate is to try new things – there is a long history of that – to see which neat new toys will be useful and which ones won’t.

Bronis de Supinski: I wouldn’t say that that’s our mandate.

The ASC program, to the extent it has had test beds for a long time, were at Sandia National Laboratories. But we do things because we think that they’re important for us to understand where we may be going in the future or we think that they have something to offer us today. And so these novel architectures that that we are fielding are mostly in the category that we think they will have something to really offer us in bigger systems in the future. But the ones that we are taking target things we think are interesting today and can be really useful in the future.

There are fifty or a hundred or even more companies – it feels like there are a million flowers blooming – and there are a heck of a lot AI accelerators out there, and we fielded from two companies – SambaNova Systems and Cerebras Systems – that we think are doing really interesting stuff.

TPM: What is the process for figuring out which ones are interesting to Lawrence Livermore, and what workloads are you aiming such novel architecture machines at? It’s not clear to us on the outside where such machines might be useful aside to state the obvious that they have massive arrays of mixed precision matrix math.

Bronis de Supinski: For any given system, it’s likely there are some specific questions involved. We are pretty willing to talk to just about anyone. There is some filtering on that. [Laughter] All the people who think that the CTO for Livermore Computing means that I that I am running our IT department, they go into the spam box. But for the serious players that are investigating things that potentially could be useful for large scale computing, we listen to them and see what they’re doing.

There are a whole bunch of companies out there right now that are not very interesting, or even more frequently, maybe they are interesting for somebody else. In the AI space, I would say that 90 percent of the companies are looking to develop a really energy efficient, high performance AI processor for embedded use. So they’re looking to run inference really efficiently. They’re not training. Power and performance is a Pareto curve. Everybody’s trying to be on that curve somewhere, but the bulk of them are looking to be well over on the power part, so their performance comes way down, too.

TPM: And the national labs like Lawrence Livermore definitely push it hard on the other part of that Pareto curve, where you’re willing to take on some heat to get real performance.

Bronis de Supinski: That’s definitely true. I mean, we’re not building embedded systems. But don’t get me wrong. That doesn’t mean we would rule out something because it was designed for an embedded system if we thought it would handle our workload well.

The key thing for us in this AI space is what we call cognitive simulation, and that’s really looking at ways to characterize for surrogate models. Here, AI models calculate something that we could do in one type of physics, in a multi-physics module or a multi-physics application. So in the vernacular of our code teams, that would be replacing a package with an AI model. Each kind of physics that they simulate is a package. In order to do that, we have to build up the model for that package. Maybe GPT-4 could be quickly trained on how to do it, but doesn’t have data for that model we need in the first place.

TPM: Well, given the national security implications of your workloads and the stewardship of nuclear weapons, I sure hope GPT-4 doesn’t have that data. . . .

Bronis de Supinski: Exactly. Ultimately, what that means is we have to value things that are going to be good at training. And one of the open questions for us as we get towards cognitive simulation is at some point are we going to want to be doing dynamic training?

One of the things that we’ve been talking right now is that we have something where we can build a pretty good model for the part of the space we think that the application is going to be running in. And so we just use that surrogate model for that package in the whole simulation. But you can easily envision a situation where as you go and simulate more complex things, maybe you don’t really know exactly what are the inputs are likely to look like for this package. And so maybe we don’t have an abundance of data or we have no data. So maybe we will run the detailed simulation in the actual physics model for a while and generate data to get it trained up. And then we have one part of the space and now we fill out this other part.

TPM: This sounds a bit like the way autonomous vehicles are being trained in a virtual world that rans orders of magnitude faster and with more variations than the real world.

Bronis de Supinski: Yeah, and it’s kind of like that. The important thing here is that the surrogate model is a lot faster. So that means we are very much valuing things with the potential to accelerate training significantly.

TPM: So when you train AI models using these novel architecture machines, will you run the AI inference on these same machines or will you run the inference on machines like ‘Sierra” today and “El Capitan” later this year?

Bronis de Supinski: Potentially, but again, it depends. Right now, in most of the experiments that we are doing, we are actually training and then we are running inference on the AI accelerator. But you can easily envision a situation where it’s easier to run the model on the HPC system – although the two architectures that we are investigating have some pretty good characteristics for actually running the inference as well. They can easily have the whole model loaded for inference and run it quickly. And then you get a benefit that if you can generate the calls into the model, send them over to the accelerator and have them come back while you are computing other packages on the system. And if I can fit it within the latencies that I’m getting on that, I’m getting parallelism out of it.

TPM: I have seen some experiments that Google has done on its TPUs and that Cerebras has done on its wafer-scale processors where they are running proper HPC-style simulation and modeling math calculations on these devices, albeit at a lower precision than FP64. Are you exploring that possibility, too? Maybe some of the calculations you do on CPUs and GPUs can be done on the Cerebras or SambaNova machines.

Bronis de Supinski: We are definitely talking about it and beginning to work on that some with both of these companies. Right now, both of those systems are single precision engines. There’s a bunch of stuff in, say, molecular dynamics that you can do that that’s pretty interesting in single precision, but some molecular dynamics needs double precision. I was just talking with some people earlier today, and I was asking how much of the code really needs to run in double precision floating point? It’s something we’re exploring, too.

But the general idea you need to consider is how these data flow architectures work. They are not storing stuff to memory, they’re flowing it through. They are only using their data movement to actually move to the next round of computation and you build up a massive pipeline. You don’t put data somewhere until you need it again, so these architectures offer some real benefits immediately in energy use and performance per watt. Storing stuff to memory takes a lot of time as well as a lot of energy.

TPM: If they had FP64 precision, would such machines be more useful? And can you convince them to do that?

Bronis de Supinski: We’re trying to talk them into it. [Laughter] But we are still asking how much 64-bit floating point is enough because it takes a lot more transistors to support 64-bit ALUs than it does the support 32-bit or 16-bit math. So we may be a fair way from getting to where we have enough understanding and can figure out how to get our applications coded in a way that they can use an almost a variable precision approach. And maybe there are more efficient ways to implement it.

This week I was thinking we could probably pretty easily detect some of this. Say that I’m doing something in one precision, and I’m getting huge rounding errors in that precision. Can I immediately move that over to a higher precision? And does that change the quality of my answer? And then the question becomes, you know, can I actually go back down to smaller precision?

So our next big system after El Capitan is coming in the 2029 to 2030 timeframe, and ASC is kind of trying to smooth that budget world a little bit so we are going to be a little bit later than the usual five years we would be between systems. And with that next machine, we are very definitely considering having some processes that are really targeted at accelerating AI or other parts of our overall workflow. Would that make sense in the overall system? And how do we structure the RFP in procurement such that somebody is encouraged to bid something like that? We very much don’t want to dictate the answer to somebody, but if somebody can come up with something interesting, we will listen. So we have to represent clearly the work that we’re trying to get done and the vendor has to propose a system that is going to get that overall work done most efficiently because that’s what we’re after.

You know, we like to see our systems do well on the Top500 because it’s a nice measure and it’s nice publicity. But in the end, our users don’t care. It kind of helps us maybe in recruiting CS staff, but our application teams believe it doesn’t matter that a machine is number one on the Top500 if our applications don’t run well on it.

TPM: Do you feel like giving an update on El Capitan as part of this?

Bronis de Supinski: I mean, you can ask questions, but I may not answer. [Laughter]

TPM: That’s always the case, eh? [Laughter] Are you installing El Capitan racks, and are there any components in them yet if you are?

Bronis de Supinski: We have started to get some deliveries of El Capitan equipment – I can say that.

TPM: Is it slated for the third quarter, or later? If I remember correctly, El Capitan is installed this year for and operational next year.

Bronis de Supinski: Installation is later this calendar year.

TPM: So there is no slippage, nothing weird, That’s good.

By the way, you and I are the only ones who are calling the compute engine in El Capitan the MI300A, and I suspect we are right. The A must surely mean the APU version of the GPU.

Bronis de Supinski: I talk to people at AMD and half the time they use A and the other half they don’t.

TPM: The A is for APU and it means they took a few two of the GPU chiplets out they put two CPU chiplets on the device. This seems really obvious to me. And I think there will be an MI300 variant that has eight GPU chiplets on it that doesn’t have any CPU chiplets because there’s going to be discrete use cases where you don’t want to locked down the ratios.

Bronis de Supinski: That’s not an unreasonable conjecture, but I can’t comment on AMD’s product roadmap. But I can say that when I look at the market, I could certainly see places where there are potential customers who would come to them for that. There is a trade off between how much more business would it drive compared to how much more it would cost to support that.

TPM: Well, when El Capitan is actually rolled out, I want to have an architectural discussion with you. I suspect that the Instinct MI300A that you got was not precisely the original plan. You maybe got some integration and high bandwidth memory you weren’t planning on originally. That’s my suspicion. You don’t have to say a word here.

The way I see it, you are helping AMD commercialize something interesting, and that is the job of the national labs. And I don’t care if you don’t think that’s your mandate. As a taxpayer, I do.

Bronis de Supinski: [Laughter] I actually do think that that’s part of my job if I want them to offer the best possible support for things. The more it’s a product and the more it’s something that they have lots of people that want them to support, the more they’re going to actually support it.

I’m sure you have heard of the Rabbit storage modules we like to talk about, and we’re very excited about.

TPM: Of course, we wrote about Rabbit two years ago.

Bronis de Supinski: So far, Hewlett Packard Enterprise does not call it a product and I am starting to think that they’re never going to call it a product. But I hope they’ll find some other customers for it. At least I know there are other people out there that are interested in it.

TPM: Well, we can strongly encourage them to reconsider that position. HPE, consider yourself strongly encouraged. . . .

Bronis de Supinski: And to be honest, I know they’re going to sell other systems with the MI300A because it is a really nice machine and as long as they are doing that, they might as well put these Rabbit modules in there. We are actively expecting to develop things that work rather like the Rabbits for our Linux commodity systems, too.

According to AdoredTV there are three versions planned, two like you discuss and a third which is cpu socket based with hbm.

https://youtu.be/L2KM-E9Ne84

There are apparently four. The MI300P is also on the drawing boards.

Can it run AI Crysis, aka the fast Walsh Hadamard transform, the most forgotten about, highly useful algorithm for machine learning?

https://ai462qqq.blogspot.com/

Great interview! The Rabbit storage cephalopods are indeed an interesting sub-unit of this overall system (while the main piece is obviously the MI300A).

I am a bit concerned about the focus on “cognitive simulation”. In my mind, it will be important to not just develop such simulation approach, but also to rigorously evaluate its pros and cons against the more normal methods (ODE/PDE, stats/regression), not just for efficiency and accuracy, but also for explainability. I’ll point, as an example, to Jonathan Resop’s 2006 M.S. Thesis: “A Comparison of Artificial Neural Networks and Statistical Regression with Biological Resources Applications”, where, for the application area, ARIMA models performed as well as ANNs, but with much fewer parameters, and these parameters could be ascribed proper process-oriented meanings. While demonstrative, the development of AI/ML models for their own sake (foregoing comparison with classical strategies), does not contribute very much to knowledge.

I’ll add that if the following statement is meaningful: “the scale and complexity of both experimental and simulated data has moved beyond that which humans can handle in their heads”, then, clearly, we should develop computational aids that are much unlike our “heads”, following on historical trends from clay tablets (for our brain’s inaccurate memory), to the abacus and slide rule (for our brain’s poor math skills), and so forth (i.e. anything but a brain-like device).

These concerns notwithstanding, I do find El Capitan awesome (and Livermore too)!

… yeah … but brains are great! They’re the nexus of our biological imperatives: 1) survive at all costs; 2) reproduce profusely, and; 3) rear many offsprings — who wouldn’t want a computer that strives to achieve that? q^8