When it comes to an economy, you get what we collectively expect. And given all of the talk and weird things that have happened in the early part of 2023, it is no surprise that companies are a bit leery about overinvesting in systems right now. And so even AMD, which has been outgrowing the server market for a number of years now, is starting to feel the macroeconomic pain a little.

But if things go the way Lisa Su & Co plan, the second half is going to be back to gangbuster, with the “Genoa” Epyc 9004 ramp proceeding apace, the “Bergamo” hyperscaler and cloud CPUs launching, and the “Antares” Instinct MI300 hybrid CPU-GPU being revealed and installed in the “El Capitan” exascale-class supercomputer at Lawrence Livermore National Laboratory.

(And yes, we are unofficially codenaming the MI300 “Antares” because AMD can’t seem to understand that we need synonyms. The MI100 was based on the “Arcturus” GPU and the MI200 series was based on the “Aldebaran” GPU, and so why not call the MI300 after one of the largest stars in the night sky, which is “Antares” and which is a red supergiant in the constellation Scorpio.)

In fact, Su confirmed on a call with Wall Street analysts that AMD expected for the second half of this year to be back on track with more than 50 percent growth compared to the second half of 2022, which was no slouch. However, Q1 2023 was a bit of a slouch and it looks like Q2 2023 is going to be as well.

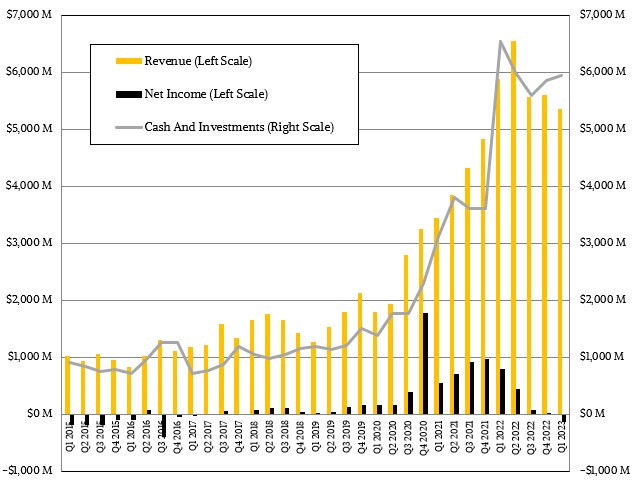

In the March quarter, AMD’s overall revenues were down 9.1 percent to $5.35 billion and thanks to heavy investing in its future datacenter roadmaps and a PC market that is still chewing on its CPU and GPU inventory from last year, the company posted a $139 million loss. Despite all of this, AMD’s cash pile stood at $5.94 billion, up 1.4 percent sequentially, but down 9.1 percent from the $6.53 billion it had in the bank a year ago.

Considering that many of the hyperscalers and cloud builders are no doubt waiting for the 128-core Bergamo Epycs and their Zen 4c cores, which were designed explicitly for some of their specific workloads, and that they have already gotten the lion’s share of the Genoa Epyc 9004s last year – and many of them even before the formal launch of the Genoa server CPUs in November 2022 – there is little surprise that they are now taking their feet off the CPU gas. Particularly with the exploding interest in large language models, which is driving their own consumption as well as what the cloud builders want to sell.

And with Intel’s “Sierra Forest” analog to Bergamo not coming to market until the first half of 2024, there is no worry about competitive pressure as such for AMD with the hyperscalers and cloud builders when it comes to Bergamo. It could have a lead time of three quarters over Intel for these many-cored server CPUs. (Intel will have a slight advantage in cores, at 144 for Sierra Forest compared to 128 for Bergamo, the first time in a long time that this has happened.)

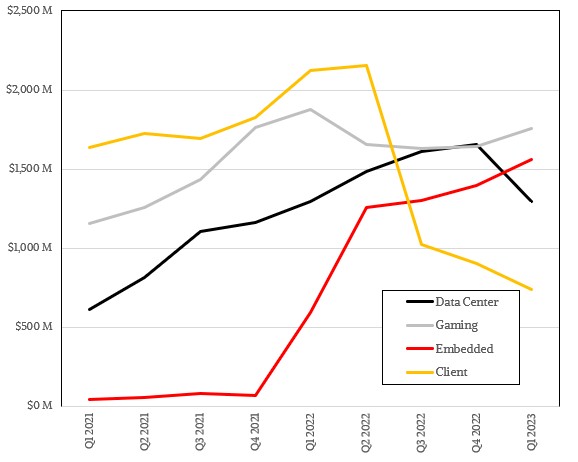

The investment in the CPU, GPU, and DPU roadmaps has come along at the same time that volumes are dropping down towards the place where AMD can only break even on what it sells in its Data Center group. In the March quarter, datacenter product sales were up a mere two-tenths of a point to just a tad under $1.3 billion, and operating income fell by 65.3 percent to $148 million. According to our model, which is loaded with numerology witchcraft just like those of our compatriots on Wall Street, AMD’s Epyc line brought in $1.22 billion in revenues, up six-tenths of a point year on year. Instinct GPU sales fell by 18.8 percent to $65 million, and Pensando DPU sales came in at around $10 million, thanks to a big installation at Microsoft that has been in the works for some time. We also estimate that Data Center group sales were down 21.8 percent sequentially.

By contrast, as we reported last week, Intel’s Data Center & AI group saw revenues decline by 38.4 percent year on year to $3.72 billion, and shipments of Xeon SP processors were off 50 percent; this group posted an operating loss of $518 million. And the Network and Edge group at Intel, which has some business in the datacenter, had sales of $1.49 billion, down 32.7 percent year on year and an operating loss of $300 million.

Back to AMD’s financials. We don’t know what AMD’s volumes were, but we do know that average selling prices were down because more of the CPU mix in the first quarter went to the cloud builders and hyperscalers. We reckon further that shipments fell faster than revenues because AMD is getting a higher ASP every quarter for its CPUs as it adds content to the architecture and sells more Genoa chips than the prior generation “Milan” Epyc 7003s.

We think that sales of Epyc CPUs to the cloud builders and hyperscalers came to $952 million, up 15.4 percent year on year but down 22.5 percent sequentially from Q4 2022. And that implies that sales of CPUs that ended up going to enterprises, telcos, smaller service providers, governments, and academia were $268 million, down 30.9 percent.

But what AMD president and chief executive officer Lisa Su is thinking about right now is getting competitive in the AI space, and she noted that the PyTorch AI framework had been ported to the ROCm environment for Instinct GPU accelerators and that the LUMI supercomputer in Finland that is based on Instinct MI250X GPUs and Epyc 7003 CPUs had been used to train a very large language model in Finnish.

“Customer interest has increased significantly for our next-generation Instinct MI300 GPUs for both AI training and inference of large language models,” Su explained on the call with Wall Street analysts. “We made excellent progress achieving key MI300 silicon and software readiness milestones in the quarter, and we’re on track to launch MI300 later this year to support the El Capitan exascale supercomputer win at Lawrence Livermore National Laboratory and large cloud AI customers.”

That last bit is new, and one wonders just how many CPU-GPU complexes El Capitan will take and how many will be left over for the cloud builders and hyperscalers to buy and deploy. It is hard to say how many “Hopper” H100 GPUs – some extended with “Grace” Arm server CPUs – that Nvidia can make, but we strongly suspect, given the keen interest in large language models, that demand will far exceed supply. That will drive up the price of the Nvidia GPUs and CPUs, and that will also compel at least some customers to consider the AMD alternatives.

Su also said that all of the AI teams across AMD’s various divisions and groups are have been combined into one single organization, which will be managed by Victor Peng, the former chief executive officer of FPGA maker Xilinx and the general manager of AMD’s Embedded group. The new AI group, which may be one of those virtual, cross-group things, will drive AMD’s end-to-end AI hardware strategy as well as its AI software ecosystem, including optimized libraries, models and frameworks spanning all of the companies compute engines.

“We are in the very early stages of the AI computing era, and the rate of adoption and growth is faster than any other technology in recent history,” Su explained. “And as the recent interest in generative AI highlights, bringing the benefits of large language models and other AI capabilities to cloud, edge and endpoints require significant increases in compute performance. AMD is very well positioned to capitalize on this increased demand for compute based on our broad portfolio of high-performance and adaptive compute engines, the deep relationships we have established with customers across a diverse set of large markets, and our expanding software capabilities. We are very excited about our opportunity in AI. This is our number one strategic priority, and we are engaging deeply across our customer set to bring joint solutions to the market.”

AMD missed the first wave of GPU acceleration for HPC simulation and modeling, but has caught up to a certain degree with the current CPUs and GPUs and ROCm stack. And it is far behind Nvidia in the second wave of GPU acceleration with AI training. But with demand exceeding supply, and with the help of the HPC community that also has to catch up with the hyperscalers, AMD has a good chance to get its piece of the AI pie. No matter what, AMD will have an easier time than Intel will with its GPUs and OneAPI stack because AMD has been a predictable supplier of CPUs and now GPUs.

Perhaps most importantly for Nvidia, AMD, and Intel, AI is pretty much recession proof right now. Call it the Dot Chat Boom if you must.

“(Intel will have a slight advantage in cores, at 144 for Sierra Forest compared to 128 for Bergamo, the first time in a long time that this has happened.)”

The above statement is not very accurate, Bergamo has more threads than Sierra Forest (256T vs 144T): Bergamo Zen4c cores are dual threads while the Sierra Forest E-Cores are mono-thread.

Threads are not cores. Threads are just a hardware mechanism to share a core. When you have high throughput workloads and high utilization, they don’t really help that much.

There may also be a bit of regression to the mean going on here. Not so much that AMD didn’t sell enough this quarter, as much as they sold a whole heck of a lot a year ago.

Just a suggestion, but the first chart with “net income” reflects GAAP data, their net including non-cash (IP, goodwill, etc.) write offs from purchasing Xilinx & Pensando, and stock incentives. It would be more realistic if you’re interested in showing the real money/cash they are making to show their non-GAAP net income that only includes actual money outlays.

I understand. But my attitude is, if the money got spent, then it ain’t really there. I judge by the net income and look at the larger picture. Xilinx is paying off, but it had a cost.

From ancient I-Ching, to modern AI-Ching, for hefty Ka-Ching! … Wing Chun or barraCUDA … may all Schools of valorous PhDs emerge victorious from this riveting contest! In the end, the only question that really matters is: Is Victor Peng really Ip Man, while NVIDIA is Shaolin, or is it the other way around (or neither, or both, …)? (eh-eh-eh!)

Number 1 priority? It should have been their #1 priority five years ago. They lost so much time on nVidia that I can’t see them ever catching up at all. Even Intel got the memo earlier than AMD and by all means Intel is not a fast moving ship.

AMD had to go through HPC to get there. Without those exascale HPC wins and the cred it gave them, they would not have been a trusted option for AI.

and through Xilinx;)

amd can stick ai where the sun don’t shine it is because of all of this ai crap that is messing up our pc’s and microsoft and intel and amd don’t care they are setting up Terminator it had to have the input and then it made it look like a virus so that the people would turn it on and have the info and good bye world But microsoft and apple and amd and asus and intel have lost their minds they know how that ai is a a clear danger but they don’t care for you being safe and to keep the wolrd safe all so all they can see is money so they have let ai mess up pc’s a cross the world and don’t care al is taking over and these companys don’t see it at all this is very bad for us the people