In the past several decades, data processing and storage systems could be architected from best of breed components, and the market could – and did – sustain multiple suppliers of competing technologies in each of the categories of compute, networking, and storage.

But the post-Moore’s Law era, the IT sphere is getting increasing vertical in its thinking. Co-design across a vertically integrated stack of compute, storage, networking, and systems software is therefore one of the main ways to keep delivering the kinds of system-level reductions in total cost of ownership that we used to just get from transistor shrinks and some modest packaging tricks for semiconductors.

That is why Intel, Nvidia, and AMD have all been building their arsenals of compute and networking and doing very specific things with both to help improve the way they act with storage. (Intel has divested itself of its flash storage business and we shall see what happens with Optane persistent memory.)

Given this, we watched with interest at the Financial Analysts Day hosted by AMD earlier this month as it talked about the networking adapters that it has gotten by virtue of its $49 billion acquisition of FPGA and adapter card maker Xilinx and its $1.9 billion acquisition of DPU maker Pensando. While these two deals give AMD network adapters and all kinds of software to reach out into the networks, and some of the best people to design SerDes (Xilinx) and packet processing engines (Pensando) that are on the planet, we think that neither acquisition has gone far enough. AMD needs to have datacenter switching and quite possibly the kind of routing that is moving to hybrid merchant silicon, too.

We know what you are thinking. The last time AMD made a foray into networking, when it paid $334 million to acquire microserver maker SeaMicro in February 2012, mostly to get its hands on that 1.28 Tb/sec “Freedom” 3D torus interconnect that was at the heart of the SeaMicro machine, this didn’t turn out all that well. To be fair, Intel’s own purchases of the Fulcrum Microsystems, QLogic InfiniBand, and Cray “Gemini” XT and “Aries” XC supercomputer interconnects didn’t exactly pan out for Intel, either. Everyone in compute was freaking out back then because Cisco Systems had created converged serving and networking with its “California” Unified Computing System and were trying to get into the networking game almost reflexively.

Well, we have had a decade now to reflect on that first pass of blade computing and integrated server-switching hybrids, and the architecture is a bit more flexible, of necessity, than what Cisco was dreaming about with the UCS platform and that IBM, HPE, Dell, and others emulated as they mashed up their server and switching iron.

In the long run, the control plane will be isolated from the application and data planes in systems, which will be comprised of clusters running bare metal, virtualized, or containerized instances. That control plane isolation will be provided by the DPU, and we can think of a CPU as a fat memory compute engine with very fast serial processing and some decent matrix and vector math for AI inference hanging off the DPU. The GPU is a fast parallel processor for acceleration of massively parallel parts of the code, and the FPGA also hangs off the DPU to provide accelerated dataflow applications that might otherwise be written for CPUs using Java. (The Solarflare FPGA accelerated SmartNICs sold by Xilinx are an earlier example of this, putting the FPGA in the “bump in the wire” to do preprocessing on financial transactions before data even reached the CPU.) FPGAs also include hard-coded DSP blocks that are essentially matrix engines that can accelerate AI inference, and that is why AMD is moving these DSP blocks, which it calls AI engines, from the Xilinx hybrid FPGA devices to its client and server CPUs.

Collections of the compute engines and different kinds of caches and memories may be glommed together as chiplets and put into a single package – a socket or a module of some sort. But ultimately, whatever that abstraction is called – a motherboard, a socket, a compute complex – it will talk to a DPU and then out to the network full of clients, other compute engines, and storage.

With the Xilinx and Pensando deals, AMD has the interim SmartNIC and the emerging DPU bases covered – and these may not be inside the server once the PCI-Express switching roadmap accelerates and CXL protocol comes along for the ride and opens up the storage and memory hierarchy.

We are not fully into this DPU-centric view of the world, and in the interim, AMD has to sell what is already on the truck.

That includes FPGA-accelerated Solarflare SmartNICs, which are designed for low latency and high throughput, and the beefier and more compute intensive Alveo FPGA accelerated SmartNICs, which have packet processing engines that can cope with what Forrest Norrod, general manager of the Data Center Solutions group at AMD (which is the name of the custom server business at Dell that Norrod used to run, not coincidentally), calls “extreme packet processing rates.”

The current Alveo “adaptive network accelerator,” as Norrod calls it, is shipping to hyperscaler and cloud builder customers now and has two 200 Gb/sec ports and can process 400 million packets per second across that FPGA engine. This is not just a bump in the wire like the Solarflare adapter, but several bumps.

There is a follow-on Alveo SmartNIC coming in 2024, which we presume will double the packet processing rate and double to port bandwidth to 400 Gb/sec when it plugs into PCI-Express 6.0 slots in servers.

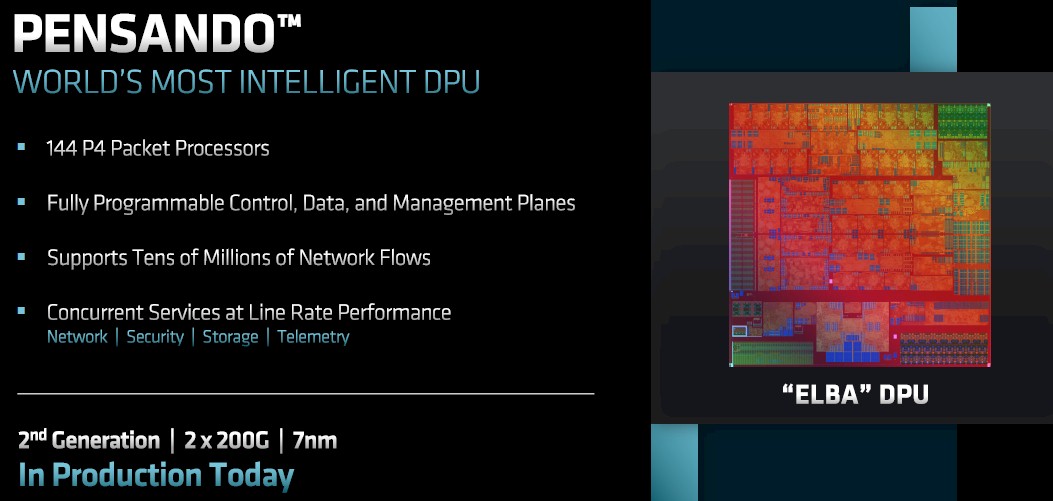

The eponymous Pensando DPUs are not programmable in C converted to VHDL or directly in VHDL, but in the P4 network programming language that came out of Stanford University and that is being championed by Google and now Intel in the wake of its acquisition of programmable switch ASIC maker Barefoot Networks back in June 2019. The Pensando DPUs have packet processing engines that are programmable in P4 and can support multiple services running at the same time at line rate and handle tens of millions of network flows across a fleet of servers equipped with them.

To be precise, the “Elba” chip created by the Pensando team – some of the same people that brought Cisco is Nexus switches and its UCS servers – has 144 P4 packet processors. The Elba device is the second generation of Pensando DPU ASIC, and is etched in 7 nanometer technologies from Taiwan Semiconductor Manufacturing Co and packs two ports running at 200 Gb/sec.

This is all well and good, and it gives AMD plenty to talk to hyperscalers, cloud builders, HPC centers, and large enterprises about when it comes to networking. But like Nvidia and Intel, AMD is going to need to own more of the networking stack. It cannot just stop at the SmartNIC and the DPU because switches, routers, DPUs, and the myriad compute engines mentioned above are going to have to work in concert to do certain kinds of collective processing in the right part of the compute-network complex.

Where you start out is not always where you end up. Here is a good case in point. Amazon Web Services bought Annapurna Labs in 2015 for $350 million, which was designing its initial “Nitro” DPUs. The Annapurna team did several Nitro iterations and then moved on to Graviton CPUs and specialized AI training ASICs called Trainium and specialized matrix math engines for AI inference called Inferentia. We also think that there is a good chance that the same Annapurna team has expanded out into switch ASICs.

If Annapurna, which was founded to create SmartNIC engines, can move down to XPUs and possibly up to switch and router ASICs, then why could not the Pensando team also move up to switch ASICs? It is not like the Pensando founders never saw a switch or router ASIC before. They now have access to high speed Serdes from Xilinx and plenty of P4 skills, and might create a very good switch and router ASIC lineup and give Intel, Nvidia, Marvell, Broadcom, and Cisco a run for the money.

Or AMD could go an even quicker route and buy Xsight Labs, the upstart switch ASIC maker that was created by the founders of EZchip, which was acquired by Mellanox as the basis of its DPU efforts a few years before Nvidia bought Mellanox. (And, frankly, EZchip was one of the reasons why Mellanox was worth so much money.) Xsight Labs started sampling its X1 ASICs, a 25.6 Tb/sec device that sports 32 ports running at 800 Gb/sec, and a 12.8 Tb/sec device that supports 32 ports running at 400 Gb/sec, back in December 2020. The devices use 100 Gb/sec signaling with PAM-4 encoding to drive 200 Gb/sec per lane out of the SerDes, from what we understand, which is pretty good. Intel, Microsoft, and Xilinx all kicked in a big chunk of the $116 million in four rounds of funding that Xsight Labs raised since it was founded five years ago.

But as we say, AMD has the skills now to make its own switch ASICs from scratch, and Xsight Labs, which we presume is itching to go public or to sell, might be asking too much money. But let’s face it: How many potential buyers can there be at this point for a switch ASIC maker? Marvell already has Xpliant, Innovium, and its homegrown Prestera stuff. Intel has Fulcrum and Barefoot. Broadcom already has three lines of ASICs for switching and routing. Cisco has Silicon One for switching and routing, and it seems like a very good architecture. Avigdor Willenz, who founded Galileo Technology (sold to Marvell in 2001 for $2.7 billion), Annapurna Labs (sold to AWS in 2015 for $350 million), and Habana Labs (sold to Intel in 2019 for $2 billion) did all of this math a long time ago. If AMD is not negotiating with Xsight Labs, we would be very surprised.

And if not, AMD should pick up the phone and call Israel.

Isn’t it time to bin that stock photo of ancient media converters?

Yes, it was. HA!

Very interesting insight indeed. It will definitely be cheaper than buying Marvell, even if the latter were for sale, and cleaner too without the stuff AMD probably doesn’t want. But in order for this argument to stick, Xsight lab probably needs one pilot hyperscaler customer. FB and Microsoft are loyal customers of Arista/Broadcom, and AWS is on its own, Chinese clouds unlikely, may be Google can give it a try? or maybe Oracle cloud, why not.