Sometimes, you do put new wine in old bottles. This is what it looks like Meta – well, really its Facebook social network group – is doing as it adds a microserver node based on a custom AMD “Milan” Epyc 7003 processor to its datacenter infrastructure.

Facebook has been one of the big proponents of microservers, and a substantial portion of its server fleet, which we profiled here in 2016, then here in 2017and once again here in 2019, is based on single-socket microservers. In many cases, Facebook has been able to compel Intel to create custom processors for its needs; the Xeon D is largely a part that Facebook needed for core compute and that has been repurposed by Intel into storage and networking compute roles. The “Yosemite” V3 microservers that debuted last May were based on a custom “Cooper Lake” Xeon SP processor, which is neat considering that the only other, and the only mainstream Cooper Lake processors available were for four-socket and eight-socket servers. (Otherwise, two-socket Cooper Lake Xeon SPs would step on the “Ice Lake” Xeon SP announcement in April of this year.) Facebook wanted the DLBoost AI acceleration on all of its compute CPUs, and hence Intel accommodated.

Now, it is AMD’s turn to provide Facebook with a custom single-socket Epyc 7003 for its microservers. And it is looking like the custom 36-core Epyc 7003 used in the new “North Dome” Yosemite V2 microserver that Facebook was showing off at this week’s OCP Global Summit will compete rather nicely against the “Delta Lake” Yosemite V3 server based Intel’s custom 26-core Cooper Lake Xeon 8321HC-Platinum processor. We drilled down into the Yosemite V3 server refresh here, and now much was said about this processor at the time because Cooper Lake was a month away from launch, and Facebook was getting a single socket version, which no one else got, as well as four-socket and eight-socket versions, which is what everyone else had access to.

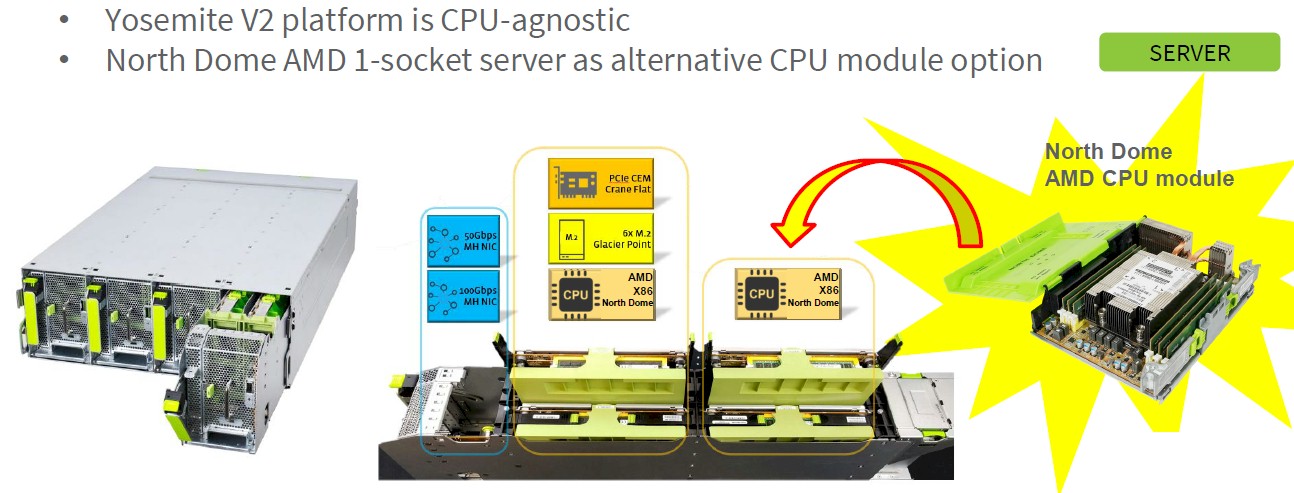

The North Dome CPU module plus into the Yosemite V2 sleds, which put four microservers into the chassis, mounted vertically, like this:

The Yosemite servers are designed to fit four servers per sled and have four sleds per shelf in the Open Rack V2 server rack. The Open Rack V2 has room for two power shelves, eight server shelves, one top of rack switch for linking them all to the network, and two blanking panels for future expansion. That is a total of 128 servers per Open Rack, which is fairly dense compute as air-cooled, hyperscale datacenters go.

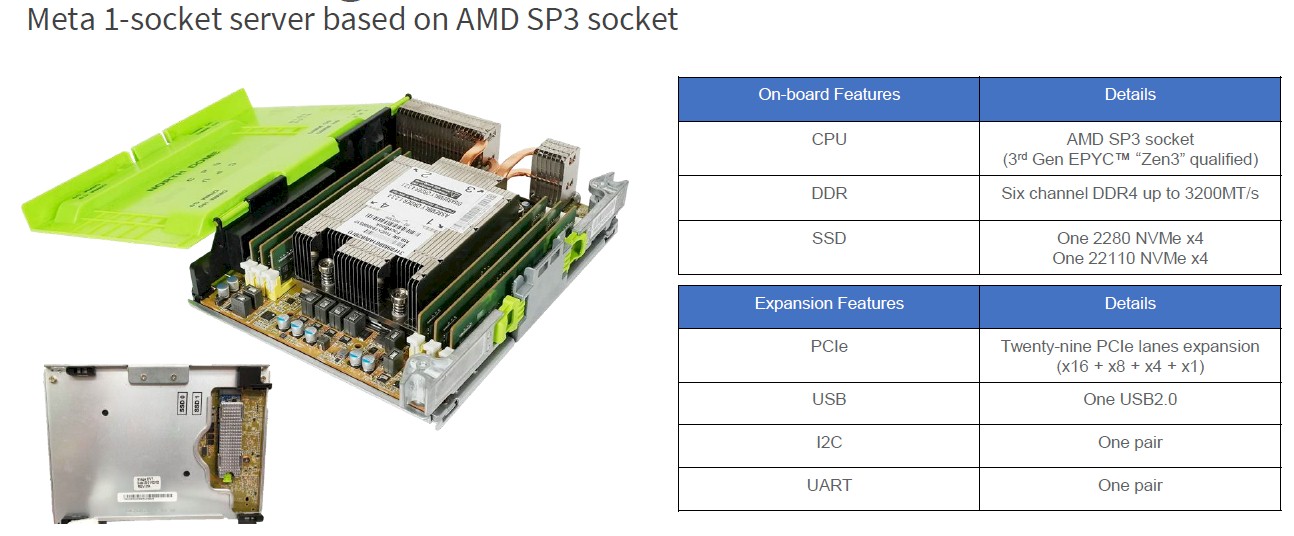

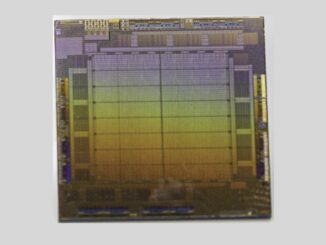

The custom Epyc 7003 part that Facebook has commissioned from AMD has 36 cores out of its potential 64 cores activated, and with a bunch of optimizations for Facebook applications at the web, database, and inference tiers of its application stack. The system board used in the North Dome server also has been tuned to use the power management algorithms of Facebook and has board level optimizations to reduce power consumption, plus operating system and BIOS tweaks to boost performance.

When it is all done, this 36-core chip consumes 95 watts, which is 7 watts higher than the custom 26-core Cooper Lake Xeon 8321HC-Platinum from Intel used in the Delta Lake Yosemite V3 server. That Intel chip has a base clock speed of 1.4 GHz and a turbo boost to 3 GHz when only one core is activated. We did a little guesstimating on the back of the envelope and think that the custom Epyc 7003 runs at around 1.8 GHz and still fits in that 95 watt envelope. We might know more once OCP gets the specifications of the North Dome server published.

Here are the specs, such as they have been revealed:

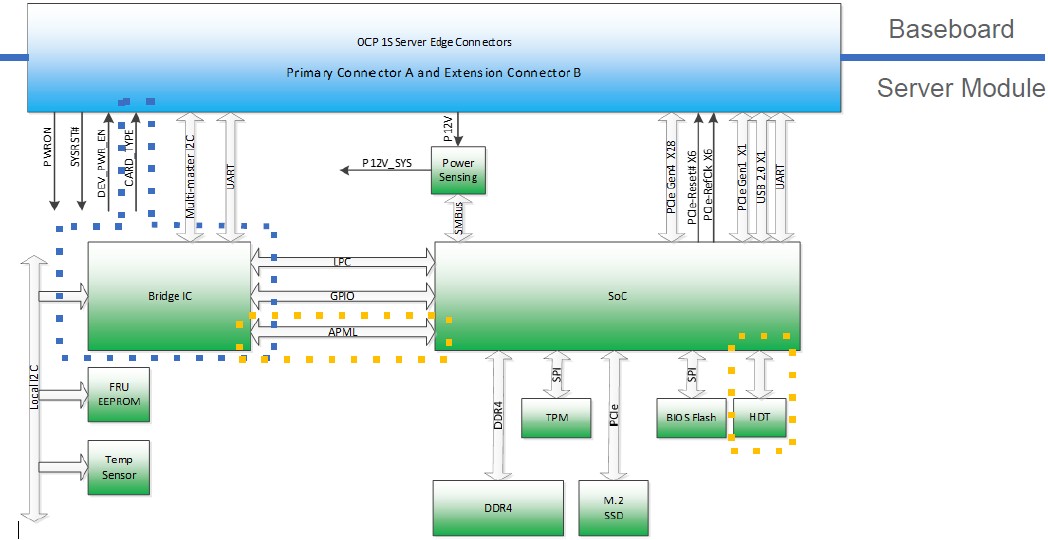

Some of the power savings in the custom Epyc part AMD made for Facebook is that two of the chiplets on the Epyc complex seem to be dead, because the custom CPU only has six DDR4 memory controllers. They run at 3.2 GHz. The server only makes use of a total of 29 PCI-Express 4.0 lanes, one each for x16, x8, x4, and x1 slots. So some of the PCI-Express controllers on the Milan processor complex are also turned off.

Here is the schematic of the North Dome system board:

It is hard to compare across generations and architectures, but it is a safe bet that single-thread performance on the custom Milan CPU that AMD made for Facebook is considerably higher, due to a big architectural difference as well as high clock speeds. We looked at SPEC integer tests for these processors and figure that the AMD Milan core delivers about 30 percent more performance per core per clock than the Intel Cooper Lake core, and that the AMD core is also running at 28.6 percent higher clock speed to boot. Call it a 65 percent single-threaded performance advantage. And then you add in the fact that there are ten more AMD cores – with only a 7 watt increase in power dissipation – and you get an additional 38.5 percent more throughput from the higher core count in the AMD device. That’s a 2.3X increase in overall throughput for that incremental 7 watts, which is a big change in performance per watt.

OK, that leaves us to as why this AMD chip is not appearing in Yosemite V3 servers inside of Facebook, which seems odd on the face of it. So, why would Facebook support Yosemite V2 nodes with this new AMD motor instead of in the shiny new Yosemite V3 sleds? Because Facebook might be taking out old Intel Xeon D sleds and sliding in new AMD Epyc 7003 sleds rather than trying to build whole new stacks of Yosemite V3 infrastructure. If an existing design works, why change it? If the server racks and their enclosures can live in the world for ten years or more, why change them? Moreover, server manufacturing is tight and parts are scarce during this coronavirus pandemic, so why not upgrade the Yosemite V2 Twin Lakes nodes cores – rather than wholesale replace the Yosemite sleds with V3 machinery?

What that means for Facebook is that the real comparison is not even with the custom Cooper Lake part shown off in the Yosemite V3 server earlier this year, but with Xeon D-2100 and Xeon E5 series processors that went into the “Twin Lakes” uniprocessor server modules in the Facebook fleet many, many years ago. The custom AMD chip will blow these old CPUs away.

I think you have the details of the Milan part wrong. The I/O is all on one chiplet, so the six DDR4 DIMMs probably indicate a physical limitation. The same applies to all of the reduced I/O limits. There are several ways that AMD could scatter 36 cores across up to eight chiplets. Four chiplets only provide 32 cores, so at least five are needed. I’m guessing though that there are six Zen 3 chiplets with two cores on each disabled. That makes for a nice symmetric system and allows AMD to use harvested chips with some defects. I don’t know if it is possible to harvest I/O chiplets with single-point failures, but that also might explain some of the I/O restrictions.