The latest list of the world’s top 500 floppiest supercomputers was released this week and the biggest news to report is that there is almost no news to report. The top 10 systems are unchanged from the previous TOP500 rankings released six months ago and the overall turnover rate was one of the lowest in the list’s 26-year history.

Arguably, the Chinese did make some news by surging further ahead of the United States in the number of supercomputers on the list. The Middle Kingdom now lays claim to 227 systems (the US has dropped to 118), up from 219 six months ago. But as we have complained before, many of these machines are of dubious HPC pedigree, having been added to list because Chinese Internet service providers and their vendor partners wanted to show off their flops by running Linpack on many partitions on their super-sized clusters.

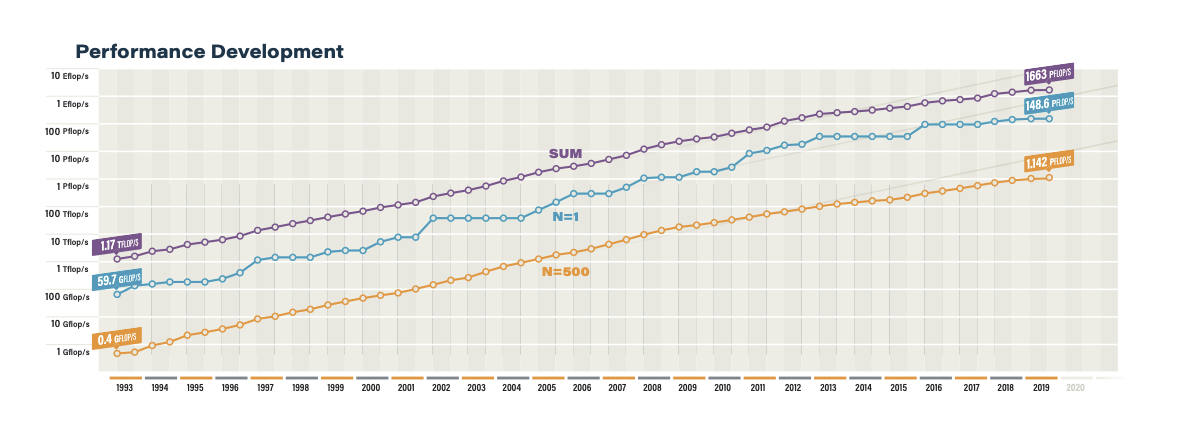

Even with those kinds of shenanigans going on, the list’s aggregate performance only increased by 6 percent since the last list, from 1.56 exaflops to 1.66 exaflops. In addition, the entry level to the TOP500 barely budged this time around, increasing from 1.02 petaflops to 1.14 petaflops. That new entry level system at number 500 was number 400 on the previous list. The highest rank for a newly added system is position 25, which means the top 24 supercomputers are retreads from June.

The irony here is that this is all happening during one the most dynamic periods for supercomputing in a generation. The scope of HPC workloads has expanded to include AI, machine learning, and data analytics. Processor hardware is becoming more diverse, with GPUs (from Nvidia, AMD, and Intel) and Arm (from Marvell and Fujitsu and Ampere) challenging the dominance of X86. Plus, a resurgent AMD has made X86-based HPC exciting again. Meanwhile, storage and memory hierarchies are becoming deeper and more interesting, thanks to the expanded use of non-volatile and 3D stacked memories.

Fueling much of this is the race to exascale, which has become a catalyst for a lot of these technologies, as well as a convenient milestone for redefining the application scope of high performance computers. Billions of dollars in R&D is being funneled into exascale programs in the US, Europe, China, and Japan in anticipation of creating machines that are more than just an evolution of the previous generation of HPC hardware.

But perhaps that’s the problem. These billions of dollars represent a form of delayed gratification, which will only be reflected on the list when these systems start to show up in the field in a year or two.

One example of how that is playing out is the Arm-HPC story. Of the four big supercomputing powers – the United States, China, Japan, and the European Union – all of them, except for the US, have Arm-powered exascale systems in their plans. But in this latest TOP500 list, there are only two supercomputers equipped with Arm processors – the “Astra” machine at Sandia National Laboratories built by Hewlett Packard Enterprise using Marvell’s ThunderX2 processors and the “Fugaku” prototype at RIKEN built by Fujitsu using its A64FX prototype processors – neither of which is in the top 100. Yet, if all goes according to plan, in a few short years there will be multiple Arm-based exascale systems decorating the top of the list.

One other potential side effect of the exascale push is that HPC customers who purchase systems in the wake of the leading edge sites may be delaying acquisitions until they get a clearer picture of how well the different exascale platforms are received by their initial users. For these second tier players of universities, commercial buyers, and smaller nations, there’s little point in buying an Arm supercomputer or one with Intel’s upcoming Xe GPUs if they don’t live up to expectations. Better to let someone else separate the stunt machines from those with a commercial future.

In essence, that’s the double-edged sword of these kinds of artificial milestones: they fuel greater innovation because of the increased investment, but they create something of a bubble in the pipeline as users sit on their budgets waiting for those investments to pay off.

That said, we shouldn’t blame all of the TOP500’s lethargic behavior on the exascale phenomenon. As is evident from the graphic above, the list’s performance trajectory started tailing off in 2008, which coincided with the slowdown in system turnover. As we explained in June, the performance tail-off appears to be the result of the end of Dennard scaling, a Moore’s Law-related phenomenon which enabled processor clocks to get faster without requiring additional power.

None of the technologies being developed for exascale computing will resurrect Dennard scaling. Worse yet, Moore’s Law itself is expected to fade into oblivion sometime during in the next decade. As a result, any surge in list turnover is likely to be short-lived and will probably be limited to the first and second waves of exascale machines. Once those innovations have been adopted, the party’s over. Until a technology or set of technologies can be developed that will put compute back on its exponential trajectory, we will have to accept the new normal of the post-Dennard era.

Be the first to comment