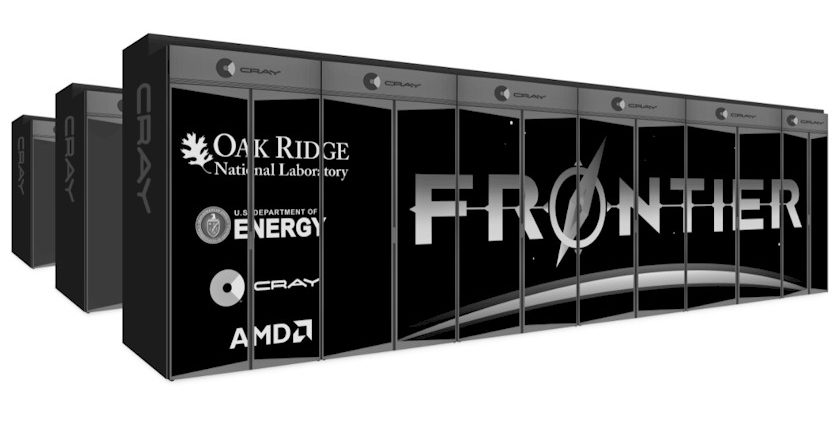

When Oak Ridge National Laboratory installs its 1.5 exaflops Frontier supercomputer in a couple of years, it’s likely to be the most powerful system in the US, if not the world. Cray was awarded the contract for the machine last month, which as we reported, will be based on the company’s new “Shasta” architecture and will be powered by semi-custom CPUs and GPUs supplied by AMD. The Department of Energy is spending a cool $600 million for Frontier – $500 million for the system and $100 million for the associated non-recurring engineering (NRE) costs.

We learned this week that Cray will also be supplying Frontier’s storage system, in this case, more than 1 EB – better get used to the abbreviation for exabyte – worth of Lustre, using the supercomputer maker’s next-generation ClusterStor platform. More than likely, that will make it the most capacious HPC file system on the planet in 2021 when it’s scheduled to be deployed alongside Frontier. It will also be one of the fastest, shuffling data at up to 10 terabytes per second.

According to Cray chief executive officer Peter Ungaro, it will be “by far the biggest storage system we’ve ever sold.” Its cost is valued at $50 million, which will be wrapped into the $600 million contract.

The system will provide more than four times the capacity and offer more than four times the throughput of the 250 PB Spectrum Scale storage currently serving ORNL’s Summit supercomputer. The Frontier storage will be comprised of two tiers: a flash tier for fast scratch storage and a hard disk tier that will supply the majority of the one-exabyte capacity. The whole set-up is designed to fit into 40 cabinets.

From the looks of things, the new ClusterStor offering, codenamed “Kilimanjaro,” entails a lot more than a simple product upgrade. “Everything that we’ve been doing in Shasta, we’re doing the same thing in storage,” Ungaro told us. “It’s a complete new storage infrastructure.”

At this point, the company is not providing a lot of details about the architecture, however, as Cray chief technology officer Steve Scott told us last October, the design will enable the storage to be directly connected to their Shasta Slingshot network. In this streamlined model, the LNET (Lustre network) router nodes and external storage network (InfiniBand, Ethernet, or whatever) are done away with. “We get rid of all that and pull the storage directly out of the high-speed network,” explained Scott.

Removing these middlemen promises to boost throughput and lower latency since the data no longer has to traverse multiple networks and hop through router nodes. And coupled with its ability to directly connect both flash and hard disk nodes, it becomes more straightforward for customers to tune their storage infrastructure in terms of IOPS and capacity. “You can buy just as much as you need from of each type of component,” Scott said.

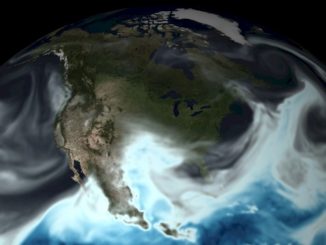

One of the obvious use cases for mixing flash and hard disks on supercomputers these days is when both machine learning and traditional HPC models are run in tandem on the same system. In the case of machine learning, storage tends to use a combination of random and sequential I/O, with data of varying sizes. That type of access favors a greater proportion of flash drives. By contrast HPC simulations typically demand a lot of sequential I/O, which is more naturally suited to high-capacity spinning disks. Undoubtably, a lot of workflows on Frontier will be integrating both types of applications.

Cray will continue to sell standalone ClusterStor systems as well, but the capability to integrate the storage and compute more intimately suggests this will be the solution-of-choice for customers who buy Shasta and ClusterStor as a package deal. There’s already some good evidence of that from the previously announced “Perlmutter” (NERSC-9) supercomputer, a Shasta machine that is slated for delivery to Berkeley Lab in 2020.

Perlmutter is advertised to be three times as fast as the current Cori (NERSC-8) supercomputer, a 28-petaflops (peak) Cray XC40. Cori also uses ClusterStor-based storage, but in the form of a 30 PB Sonexion Lustre 2000 system outfitted with HDDs and fronted by a DataWarp burst buffer. NERSC-9’s Kilimanjaro storage will also have a capacity of 30 PB, but will be built entirely from flash, obviating the need for a burst buffer. It’s expected to deliver data at up to 4 TB/sec, more than five-and-half times faster than what Cori’s Sonexion system can deliver.

We have a feeling that Cray probably had something like Kilimanjaro in mind when it purchased the ClusterStor business from Seagate back in 2017 and brought all the technology and Lustre expertise in-house. At that time, Slingshot was still under development, but it wouldn’t surprise us if the technology marriage had already been pre-arranged.

As a result of the impending acquisition of Cray, all of this will end up under HPE’s control. And given the differentiation inherent in products like Shasta and Kilimanjaro, it’s not hard to imagine that some of the underlying technology will eventually get infused into other parts of the HPE portfolio.

At least it’s not hard for Ungaro to imagine. He believes that bringing together HPE’s scale and customer base with Cray’s HPC technology could be a winning combination across HPE’s entire datacenter business. And as the line between data-centric enterprise and cloud workloads and compute-centric scientific workloads continues to blur, the demand for technologies like Slingshot and Kilimanjaro might grow substantially. “I think it could be pretty fun,” said Ungaro.

Be the first to comment