There is a kind of dichotomy in the datacenter. The upstart hyperconverged storage makers will tell you that the server-storage half-bloods that they have created are inspired by the storage at Google or Facebook or Amazon Web Services, but this is not, strictly speaking, true. Hyperscalers and cloud builders are creating completely disaggregated compute and storage, linked by vast Clos networks with incredible amounts of bandwidth. But enterprises, who operate on a much more modest scale, are increasingly adopting hyperconverged storage – which mixes compute and storage on the same virtualized clusters.

One camp is splitting up servers and storage, and the other is mashing them up on the same nodes. Both camps agree on one thing: They do not want traditional storage area networks, which are far too expensive compared to server-based clustered storage, whether it is aggregated or disaggregated. They also agree that trapping storage inside of servers doesn’t make a lot of sense because you want to be able to scale up compute and storage independently of each other – but those peddling hyperconverged wares don’t bring this up a lot because in many cases they have to scale up compute and storage in a static lockstep. (We analyzed this dichotomy back in December.)

Having said that, many workloads within the enterprise do make sense to put onto hyperconverged platforms, particularly those that, over the past decade, have been moved to server virtualization hypervisors. Such workloads are very sticky indeed, which is why VMware has revenues running at an annualized rate of around $8 billion and over 500,000 customers in the datacenter. These virtualized workloads are natural for hyperconverged infrastructure, which is why so many startups popped into existence peddling their own variant of HCI once Nutanix dropped out of stealth in the summer of 2011 and led the charge. IBM is making buddy-buddy with Nutanix and has ported the latter’s Enterprise Cloud Platform to its current Power8 and impending Power9 iron. Dell, by virtue of its acquisition of EMC, has control of both VMware VSAN and EMC ScaleIO, two different server-storage hybrids. Hewlett Packard Enterprise acquired SimpliVity this time last year to get its own HCI stack (it already had one through its LeftHand Networks acquisition, but through SimpliVity was better for a broader enterprise market). And Cisco Systems partnered Springpath and then bought it to own its own HCI fate.

Cisco was the pioneer a decade ago of converged infrastructure, in its case it pulled together virtual compute and virtual networking onto a single Unified Computing System and entered the server market, sending off a wave of networking company acquisitions by incumbent server makers who were looking to own their own stacks like Cisco was going to. Cisco had strong partnerships with VMware for its virtualization layer, but VMware was a reluctant entrant to the virtual SAN market by virtue of the fact that its owner, EMC, wanted to sell real SANs to enterprise customers. Eventually, a portion of the market looking for cheaper, easier, and still scalable storage – particularly for virtual machines – started moving to Nutanix and other platforms, and VMware had no choice but to extend VSAN into a real product, not an experimental toy. Once VMware bought Nicira for virtual networking (it is productized today as NSX), it was only a matter of time before Cisco would come up with its own twist for both software-defined networking – that’s the ACI extensions for its switches – and virtual SANs – in this case, Springpath, now sold as a bundle of UCS hardware and Springpath software called HyperFlex. Cisco has been peddling HyperFlex for more than two years now, and offers some insight into where hyperconverged storage is going to find a place in the datacenter – and where it will not.

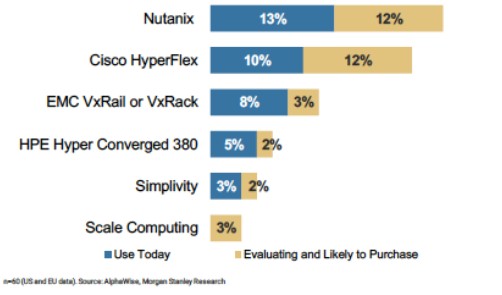

The UCS server business has kept growing its share over that time, rising from 50,000 customers back in March 2016 when we profiled the underlying HyperFlex technology to 65,000 customers today. Cisco has grown that HyperFlex base from zero to 2,400 in that time – about a third of what VMware has – but the HyperFlex base is growing faster than the UCS base or the overall server base at large among enterprises. Basically, Todd Brannon, director of product marketing for the UCS line at Cisco, tells The Next Platform, the HCI market has come down to a three horse race, and some market data compiled by Morgan Standley for its annual CIO survey backs this contention up. This chart shows hyperconverged storage usage and plans:

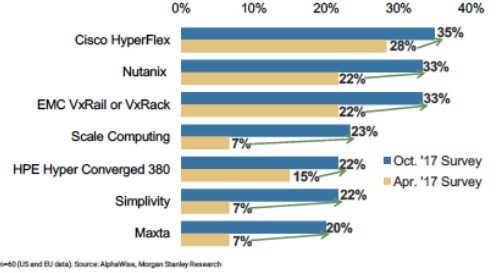

And here is another chart that shows what products were under evaluation in the surveys that Morgan Stanley did in April and October of last year:

The interesting bit is that of those 2,400 HyperFlex customers, about a third of them, according to Brannon, have never acquired Cisco servers before. While that is good, and allows the company to ride up the expanding addressable market for HCI – rising from around $2 billion in 2017 to about $8 billion by 2021 – what is equally important is having a captive market to sell an HCI stack into, and Cisco certainly has built that up over the past nine years.

One reason why it is able to ramp HyperFlex quickly that the company has been scaling up the underlying Springpath software, which was running only across three nodes when the partnership started, jumped to 16 nodes at the 1.0 release after the acquisition, was at 32 nodes with the 2.0 release, and that is being expanded to 64 nodes and, significantly, close to 1 PB of capacity in an all-flash configuration with the 3.0 release that just came out.

For those who need more capacity, Cisco supports hybrid flash and disk setups on HyperFlex, but depending on the quarter, Brannon says that somewhere between 40 percent and 50 percent of HyperFlex shipments are for all-flash setups. As we expected, many enterprises have modest capacity requirements but heavy performance requirements, and they are willing to spend a premium on flash in HCI stacks rather than on dedicated SAN hardware, which is pricey by comparison.

Interestingly, Springpath allowed for compute and storage nodes to scale independently in the HCI stack. A bit of software called the IOVisor, which is a driver this that allows for compute (meaning virtual machines for running applications) to be added independently of storage capacity in either the caching or persistence tiers. You just load IOVisor into a virtual machine and it can read the data spread across the HyperFlex cluster. With the 3.0 release, a cluster can have up to 32 of these IOVisor nodes plus another 32 HX nodes that provide storage capacity. The software also allows for storage to be grouped into availability nodes, with specific IOVisors and HX nodes in each zone, and also now has synchronous replication across geographically distributed clusters that stretches the Springpath file system across the nodes on both ends of the wire – up to 100 kilometers apart, the limits of latency for synchronous replication – and allows for disaster recovery.

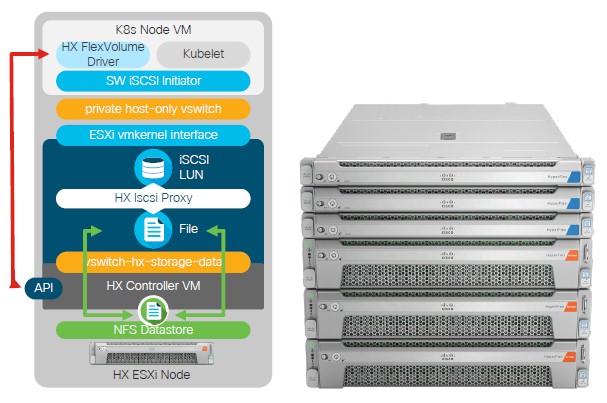

Containers are all the rage for certain kinds of workloads these days, and Cisco has been working with Google to create persistent volumes for the Kubernetes container orchestrator. The implementation that Cisco has cooked up for HyperFlex based on this joint work is called the FlexVolume driver, and here is how it all fits together:

For the moment, given the heavy use of the VMware ESXi hypervisor by enterprises generally and by Cisco customers in particular, this Kubernetes container stack is implemented atop the VMware stack. But over time, Brannon reckons that a large number of UCS customers will look for a bare metal implementation of Kubernetes, particularly for new workloads. It will all come down to how integral the VMware management and software distribution tools are to each enterprise. The savings in not having to pay for VMware may be more than eaten up by the hassle of having two different software stacks atop HCI.

The original Springpath stack ran atop the ESXi hypervisor from VMware, and there were some experimental customers looking at the KVM hypervisor controlled by Red Hat and also championed by Canonical, IBM, and others. To date, Cisco has not announced formal support for KVM, but is evaluating it. With the 3.0 release, however, HyperFlex can be booted up on Windows Server running atop UCS iron, linking into Microsoft’s Systems Center virtual machine manager and the PowerShell scripting engine and looking just like any other block storage as far as the hypervisors are concerned. The Springpath stack implements an SMB3 file server protocol, and retains the fully distributed data model (striping across all nodes) that is a key aspect of the Springpath file system. At the moment, only Windows Server 2016 and its companion Hyper-V are supported, and earlier Windows Server editions probably will not be added.

All of these new features help with broadening the base of HyperFlex and are reflective of the same forces and opportunities that are affecting all sectors of the systems and storage business, and unlike VMware, Cisco is free to support any hypervisor it wants and it is also capable of developing its own Docker and Kubernetes stack for those who want bare metal container environments.

So what parts of the datacenter workload are going to be deploy hyperconverged infrastructure? Here is how Brannon sees it: “There are definitely workloads that are scale out and bare metal inherently. That includes Hadoop and other data analytics workloads, and also object storage. At the very upper end there are in-memory databases such as Spark and SAP HANA, and of course high performance computing, which is also taken out of the equation. Our belief is that everything else is a potential workload you can run on HyperFlex. HCI will be on the majority of our installed UCS base, and it will be sooner rather than later. Whenever there is a datacenter refresh, HCI will be part of the deal.”

As a case in point, Brannon give some hints about an unnamed oil and gas giant based in Houston that is standardizing on HyperFlex for its corporate datacenter, and interestingly is replacing servers running a mish-mash of software from Hewlett Packard Enterprise, whose server business also hails from Houston, that has been the incumbent server supplier for the past dozen years. In this case, the deal calls for over 800 servers to be installed over the life of the contract with Cisco to build the company’s private cloud. This is not a small installation, at least by HPC standards if not by public cloud or hyperscale norms.

Be the first to comment