Five years ago, Hewlett Packard Enterprise let loose the Peregrine supercomputer at the National Renewable Energy Laboratory, a marriage of performance and power efficiency that is based on HPE’s dense Apollo 8000 system and includes warm liquid cooling. The 2,592-node cluster, which was updated in 2015, includes a combination of Intel “Sandy Bridge,” “Ivy Bridge” and “Haswell” Xeon E5 processors and Mellanox InfiniBand interconnect, and it delivers a peak performance of 2.26 petaflops in its current configuration. It’s a powerful system that, like most supercomputers these days, runs Linux for a mix of compute-intensive and parallel workloads.

And now Peregrine is going to be replaced. Next year, HPE will install Eagle, a system that will be 3.5 time more powerful than Peregrine, will be the latest example of the vendor’s push to produce increasingly more energy efficient HPC systems and will be able to run a broad array of highly detailed simulations of complex processes and simulations in such areas as wind energy, advanced analytics and data science, and vehicle technologies. It also will be based on HPE’s SGI 8600 supercomputer, inherited when the company bought SGI two years ago for $275 million, and is part of an ongoing effort at HPE to integrate features found in the SGI 8600 and Apollo 8000 into new platforms.

“When we acquired the 8600, we put a lot of the best of both sides and we made sure the best-in-breed technology came from both legacy SGI and legacy HPE,” Nic Dube, chief strategist for HPC at HPE, tells The Next Platform. “Now we’re working on the follow-on to the 8600 that’s going to be a joint system. The teams have been integrated for a few years, so now we’re working on that for later on.”

Eagle will come with newer processors and a new network topography, but in many ways it will be similar to Peregrine, Dube says. It will leverage Intel Xeon chips, GPU accelerators from Nvidia, and a 100 GB/sec EDR InfiniBand fabric from Mellanox Technologies. In addition, Eagle will run Linux, either RedHat or the CentOS distribution. HPE will install the system at NREL’s Energy System Integration Facility datacenter this year and turn it on in January 2019.

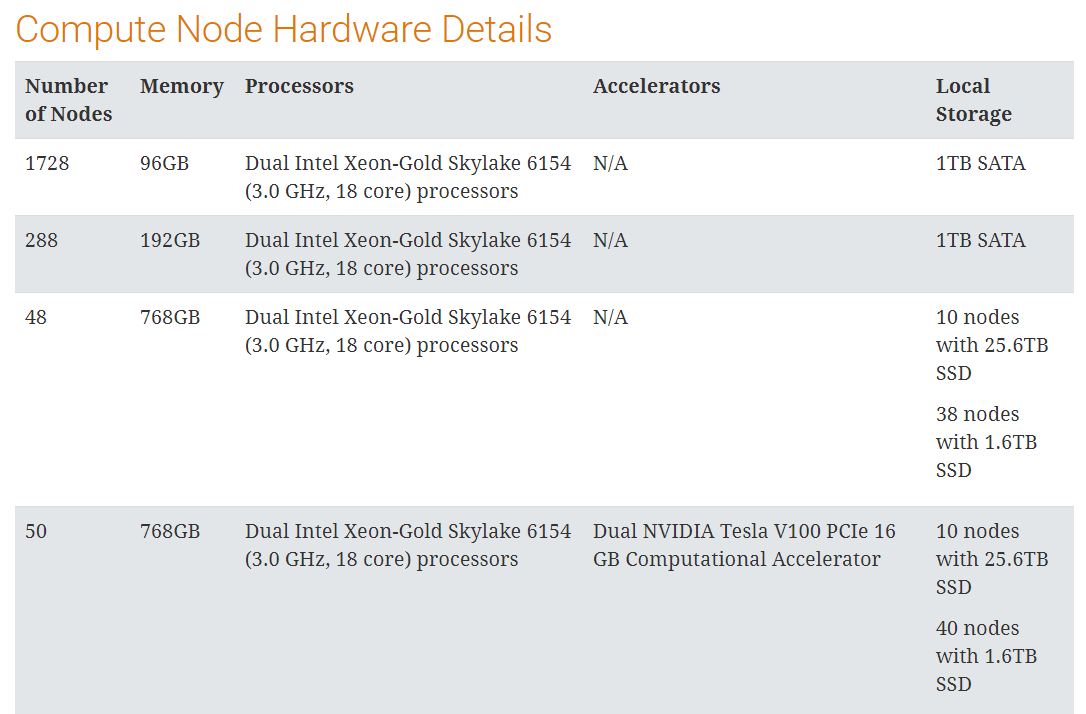

The system will employ “Skylake” Xeon SP processors and will include 76,104 cores across 2,144 dual-socket compute nodes. The 18-core Xeon SP-6154 Gold chips will run at 3.0GHz with memory ranging from 96 GB to 768 GB, and the supercomputer will deliver a peak performance of 8 petaflops at double precision. It also will include Nvidia Tesla V100 GPU accelerators. There also will be NFS file systems for home directories and application software and a 14 PB high-speed Lustre file system for parallel I/O.

“They’re not that different,” Dube says of Peregrine and Eagle. “They’re both CPU-based systems with InfiniBand, so from an IT side, they both provide a vary widely available platform for those users. They don’t have to import it, they don’t have to do anything complicated, so there will be continuity between going from Peregrine to Eagle, and even though the technology behind Eagle – the 8600 – came from SGI, it will be able to deliver a system that will feel from an IT perspective – from a user perspective – a lot like Peregrine. Doing that while being able to deliver on NREL requirements in terms of hot water output and to be able to do heat reuse in the winter is a key story. It’s really about continuity rather than doing something trend-breaking or doing something completely different.”

That said, there are some key differences, including the fact that Eagle will have an eight-dimensional Hypercube network topology.

“Eagle has the premium InfiniBand switches, which has basically twice the number of switches in every switch tray and it’s wired as an enhanced hypercube config, while Peregrine was wired as a fat tree,” he says. “It basically doesn’t require core switches and it’s wired rack to rack. By having all of the switches integrated in the infrastructure, we’re able to liquid cool them. From a power efficiency and energy efficiency perspective, we don’t have to put that outside and cool with either datacenter cooling or with other equipment. It’s also a way to somewhat optimize the ratio of copper cabling vs. optical cabling, so that allows us to reduce the cost of some of the shorter links better than when we look at other topologies. From an application performance perspective, the enhanced Hypercube does have some interesting characteristics as well.”

The power efficiency is important. HPE and NREL has been collaborating since 2013 on developing highly efficient HPC systems that not only generate less heat and are less costly to run, but also can have the heat taken from the systems reused in offices and other spaces. The SGI 8600’s warm liquid cooling system can capture 97 percent of the heat to be used to warm a 10,000-square-foot building, Dube says.

HPE and NREL, which is run by the Department of Energy, are addressing the efficiency issue in other ways outside of the systems themselves. In a blog post earlier this year, Bill Mannel, vice president and general manager of HPC and AI segment solutions in HPE’s Data Center Infrastructure Group, wrote about a joint effort between the two organizations to create a hydrogen-powered datacenter. Mannel noted that the datacenter power market will reach $29 billion by 2020 – up from $15 billion in 2016 – and that by then datacenters will consume 140 billion kilowatt hours of electricity, costing companies in the United States about $13 billion.

“Energy efficiency of both the data center facility and the equipment itself are critical to HPC,” Mannel wrote. “Although a majority of the energy consumed is funneled into complete compute-heavy and data-intensive tasks, computing at peak performance creates tremendous amounts of heat and can produce excessive carbon emissions and harmful waste. … Energy efficiency will become increasingly vital to success, particularly as the industry strives to develop a new generation of HPC systems. As we race to deploy an exascale-capable machine by 2021, there is a strong urge to pack as much compute into each centimeter of silicon as possible. However, this ‘performance at any cost’ mentality will only serve to produce extremely inefficient, wasteful, and unstainable solutions.

At The Next Platform, we have written about efforts by a range of vendors looking to drive down power consumption and costs, including companies like IBM and Lenovo that are pushing liquid cooling options and facilities such as Sandia National Laboratory that are interested in bringing them into their environments.

HPE’s Dube reiterated the work the vendor is doing to more closely align the Apollo 8000 and SGI 8600 systems, saying that “we’re now looking to integrate some of the technologies that were developed on Peregrine on the Apollo 8000 and, independently, a lot of the technologies developed by SGI on the 8600, and putting that together on our next platform. We’re really taking the best of both technologies and building that into our platform.”

He declined to give a timeline for the new platform, but said HPE also is doing a lot of work as part of its research into exascale computing and next-generation infrastructure, which will need to be highly power-efficient.

“We’re researching in ways to build systems that will be completely fanless – completely liquid-cooled and without any fans,” Dube says. “It’s very interesting to have the background from both companies coming into this. The time to market for that will really be a matter of when we can figure out how to achieve some of those technical challenges. But it’s not just technical challenges, because we know how to do it today, but it could be very expensive and the cost would make the platform prohibitive. A lot of the work we’re doing is not just about how to remove the fans completely from the systems, but how to go about it so we can have a cost structure that can compete with air cooling. That’s what will truly democratize liquid cooling, when we get to a place that not only do we not require any fans because we’re not moving air any more, but that we can get to a cost structure that can compete with air-cooled systems.”

Be the first to comment