Mellanox got its start as one of the several suppliers of ASICs for the low latency InfiniBand protocol that was originally conceived as a kind of universal fabric to connect all devices in the datacenter. That did not happen, but Mellanox saw the potential of this technology and built a very respectable business aimed at traditional HPC simulation and modeling and expanded that into parallel databases and storage clusters.

The company also took a few forays into the mainstream Ethernet switching market, first with its hybrid SwitchX Ethernet-InfiniBand ASICs and of course with its ConnectX line of hybrid server adapters, which have supported both Ethernet and InfiniBand from the getgo. The converged SwitchX lines allowed for companies to have one switch run both protocols, which was great, but it sacrificed some of the inherent low latency on InfiniBand in exchange for that, which some HPC customers did not want. So Mellanox forked the switch ASIC lines, creating what has been named the Spectrum line for Ethernet and the Quantum line for InfiniBand while at the same time keeping the converged ConnectX – the X signifies a variable, just like in algebra – adapter approach that has served it so well.

This Ethernet business has been growing fast on the server adapter side for a decade, with Mellanox commanding a dominant 85 percent of the share of adapters during the 40 Gb/sec Ethernet generation, according to Crehan Research, and already capturing a 65 percent share with the new generation of ConnextX-5 adapters that are based on 25 Gb/sec signaling, that were launched in June 2016, and that support 25 Gb/sec, 50 Gb/sec, and 100 Gb/sec links to servers. The ConnectX-6 adapters, which previewed in the fall of 2016, double up that signaling to 50 Gb/sec and support 200 Gb/sec links between servers and switches, matching the oomph of 200 Gb/sec Quantum HDR InfiniBand and future Spectrum Ethernet switches coming from Mellanox, which will follow the 100 Gb/sec Spectrum chips from June 2015 to market very soon.

Mellanox has benefitted against arch rivals Intel and Broadcom (with a certain amount of competitive pressure coming from Cavium and Marvell, now united) by having this dual protocol approach (which they do not) and by selling customers both finished adapter cards as well as raw adapter chips and now “BlueField” manycore ARM coprocessors fused with those ConnectX chips so hyperscalers and cloud builders can create their own network interface cards. An increasing number of these companies deploy what are called SmartNICs, which do a lot of network processing, run virtual switches, or even the NVM-Express over fabrics protocol to offload even more work from servers than the plain vanilla ConnectX chips do. This is done to free up compute on the server, which can be burdened substantially with networking tasks, as we have discussed before.

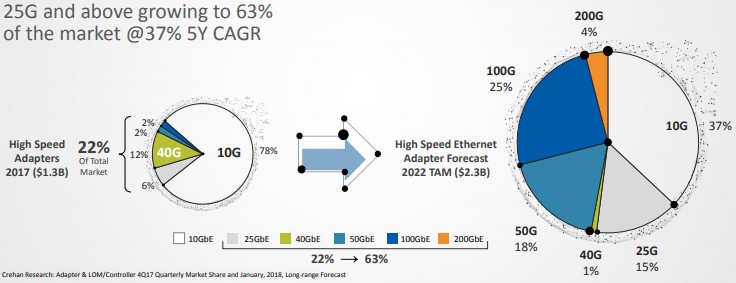

Given this, the server adapter market is booming along with the server market itself, and companies are moving on up the bandwidth ladder:

“This market is expected to grow at a 50 percent compound annual growth rate over the coming years,” Eyal Waldman, chief executive officer at Mellanox, said on a recent call with Wall Street analysts going over its second quarter financial results. “We think we are pretty much in the very early stages of this transition. These transitions really take about five years, from our previous experience. So we are definitely not even touching the mainstream, we are just serving the early adopters that are moving to 25G. This is, again, like a three year or seven transition that we are just starting to see the beginning, and we are riding this wave with superior technology.”

Everyone knows that Mellanox sells a lot of complete InfiniBand systems – adapters, cables, and switches – to HPC centers, to a bunch of clustered storage and database providers, and to a handful of hyperscalers and cloud builders that are doing HPC-style work. All of the InfiniBand systems on the Top 500 ranking of supercomputers in the June 2018 list and all but one of the 76 Ethernet systems use Mellanox server adapters; obviously, machines using IBM BlueGene, Cray “Gemini” XT and “Aries” XC, Intel Omni-Path, and Hewlett Packard Enterprise NUMALink interconnects have their own server interfaces.

The perception, however, is that the Ethernet business at Mellanox is mostly adapters. This is not true today, even if it was largely true in the early days of its Ethernet switching business. Mellanox has sold a slew of Spectrum switches to one of the Super 8 big hyperscalers and cloud builders – we think it is Microsoft, but no one has confirmed that and will not – and Waldman confirmed on the Wall Street call that the company is in the works to close a big deal with another of the Super 8.

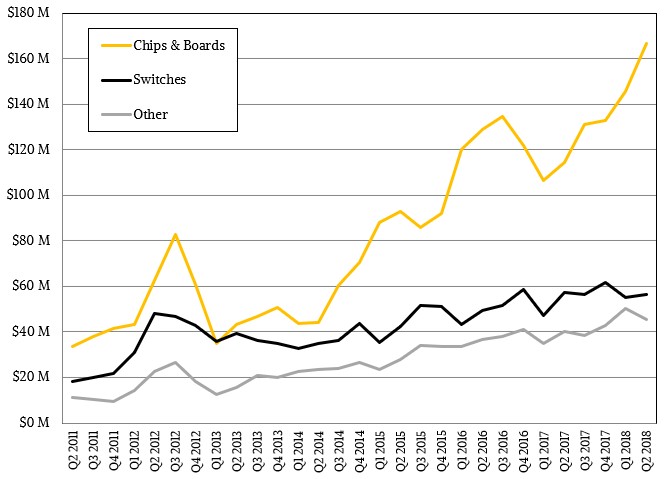

In fact, in the quarter ended in June, the Mellanox switching business grew by 114 percent – a factor of 2.14X growth – year over year, and that just goes to show you what one of the Super 8 can do for your business. Mellanox did not break out Ethernet switch revenues distinct from InfiniBand switch revenues, but we do know that overall switch revenues were down 1.5 percent to $56.4 million. Sales of raw chips and finished boards rose by 45.4 percent to $166.5 million. While other revenues – mostly services of around $8.9 million and cabling and software for the remaining $36.7 million portion – accounted for a total of $45.6 million in the quarter, up 13.3 percent.

A lot of hyperscalers, cloud builders, and even some smaller telco service providers buy chips and have OEMs build their switching or adapter gear for them, so Mellanox sells a lot of raw parts. It is very likely that for the foreseeable future sales of chips and boards will far outstrip sales of finished switches. (But to be fair, a ConnectX adapter is a board, but it is largely a finished product. Mellanox should have a raw chips category, a finished board category, a finished switch category, and an other category to do this right.)

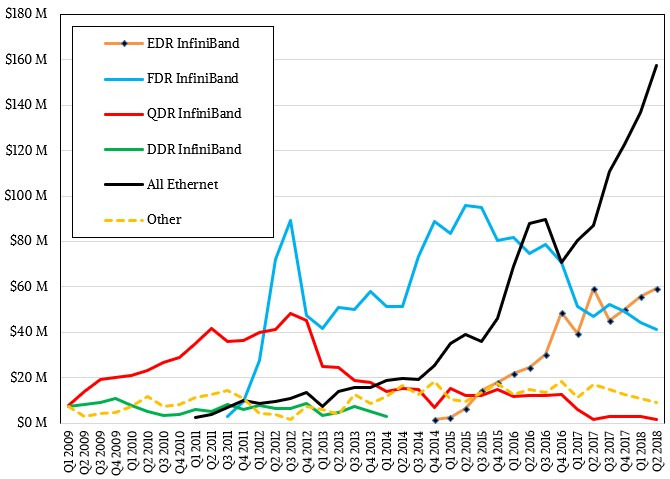

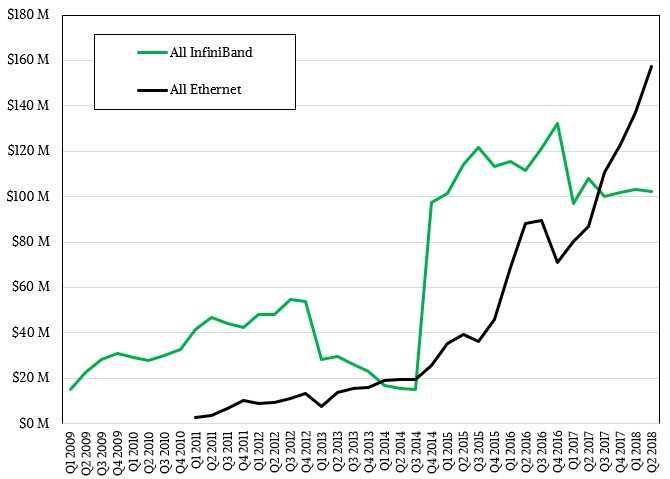

If you pick apart the numbers that Mellanox provided for the second quarter, 100 Gb/sec EDR InfiniBand sales were off two-tenths of a point to $59.3 million; this includes switches, adapters for InfiniBand systems, and cables. 56 Gb/sec FDR InfiniBand sales, thanks in large part to the database and storage appliances that have been certified to use it, continue to come in, but sales were off by 12.4 percent to $41.2 million. Sales of the slower 40 Gb/sec QDR InfiniBand products are still limping along, but were off 2.6 percent to $1.6 million. Add these all up, and InfiniBand product sales totaled $102.1 million, and the chart below makes obvious the rolling nature of the InfiniBand upgrade cycle:

What you cannot readily see from the chart below is that InfiniBand sales have been fairly steady in the past five quarters. So, we made a new chart, that plots all InfiniBand revenues against all Ethernet revenues, and now you can see it:

In the second quarter, Ethernet sales overall were up 81 percent to $157.5 million, and were essentially half again as large as InfiniBand revenues. It is hard to say what the balance is for raw chips and finished switches regarding Ethernet and InfiniBand, but they are probably neck and neck at this point and Ethernet is by far the higher volume on adapter cards or raw adapter chips.

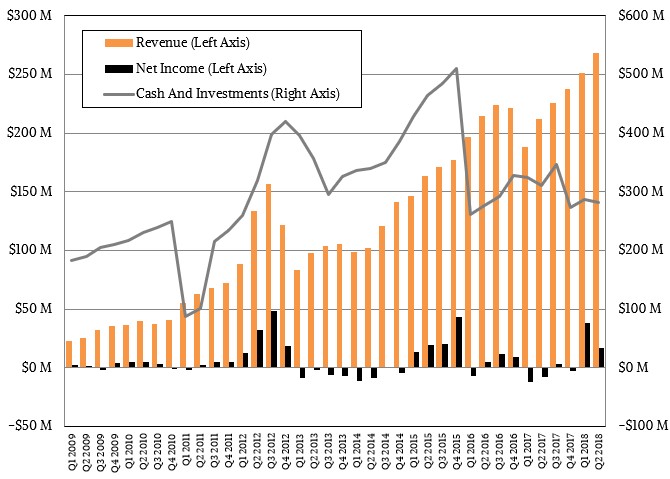

Add it all up, and here is the trendline for Mellanox overall:

For the full quarter, Mellanox posted sales of $268.5 million, up 26.7 percent, and net income of $16.5 million, which is a lot better than the $8 million loss it posted in the year ago period. The company has $283 million in the bank, and is expecting for revenues to go up further in the third quarter, ranging from between $270 million and $280 million and is projecting that for the full year it will bring in $1.065 billion to $1.085 billion in sales. That implies revenues of somewhere between $307.9 million and $327.9 million in the fourth quarter, which will be its best quarter in company history. And not too shabby considering that is over 10X growth in a decade. In the third quarter, with two different chip tapeouts being wrapped up, Mellanox will incur somewhere between $8 million and $9 million in incremental costs, according to Waldman. It is unclear what, if any, impact this will have on net income in the period.

Looking ahead, Waldman says that InfiniBand revenues will grow in the single digits for the full year, driven in part by HPC centers adopting the impending 200 Gb/sec Quantum products as well as myriad companies using InfiniBand in their machine learning clusters, which are also latency sensitive just like HPC simulation and modeling workloads. As HPC and hyperscale use expands in 2019, Waldman said there is potential for even more growth for InfiniBand next year. Whether or not InfiniBand can ever pull even with Ethernet again remains to be seen. It seems unlikely. But, either way, Mellanox will be happy.

So where are Mellanox SN3000 series Ethernet switches (200GbE/400GbE) announced in July 2017 (a year ago)? And will their per-port prices be competitive with Tomahawk3 based switches?