It’d be difficult to downplay the impact Amazon Web Services has had on the computing industry over the past decade. Since launching in 2006, Amazon’s cloud computing division has become the set the pace in the public cloud market, rapidly growing out its capabilities from the first service – Simple Storage Service (S3) – it rolled out to now offering thousands of services that touch on everything from compute instances to databases, storage, application development and emerging technologies like machine learning and data analytics.

The company has become dominant by offering organizations of all sizes a way of simply accessing technology without incurring the expense of actually having to buy the equipment or the hassle of having to integrate the various hardware and software components. Instead, businesses need only to tap into the massive datacenter environment that AWS has built, choose what technologies they want to run and pay only for what they use. There a million different pieces that can be access and easily integrated, are all connected and are available ala carte.

In the process, AWS has grown a business that only a handful of other tech companies – in particular Microsoft with Azure, Google, Baidu and Alibaba – can rival, and startups and major enterprises alike are leveraging the AWS offerings. And the company continues to press its advantage: since 2012, AWS has rolled out 3,959 new features and services, including a broad array this week at its AWS re:Invent show in Las Vegas. The new services covered a lot of ground: databases, compute and storage, as well as machine learning, containers, data analytics, software development, security, virtualization and video.

“Our goal has always been to build a whole collection of very nimble and fast tools, and you can decide exactly how to use them,” Werner Vogels, vice president and CTO at Amazon, said during his keynote address at the conference. “We are delivering tools that all you to develop the way you want to develop. Not the way you would develop two or three years in the past but how you want to develop now. … We are delivering the tools now for the systems you want to be running in 2020.”

With that in mind, Vogels, spend part of his lengthy keynote focusing in on the drivers that will impact how technology and infrastructures will evolve in the coming years. Among the top disrupters will be new, more natural interfaces, he said. Historically interfacing with computers has been based on the capabilities of the systems, which has meant keyboards, mice and screens. Users have become good at manipulating the machines – they know what keywords to type in to ensure they get back the results they’re looking for.

However, in recent years, the growth in machine learning, artificial intelligence and analytics has driven advances in more human-centric interactions with computers, particularly in the area of voice, with such technologies as speech recognition and natural language processing. Apple’s Siri, Microsoft’s Cortana, Google’s Assistant and Amazon’s Alexa voice assistant technologies have helped users get comfortable with using natural language to interact with their mobile devices and home digital assistant device, and that will is beginning to spread into the business world and will into how vendors and developers build their back-end systems, Vogels said.

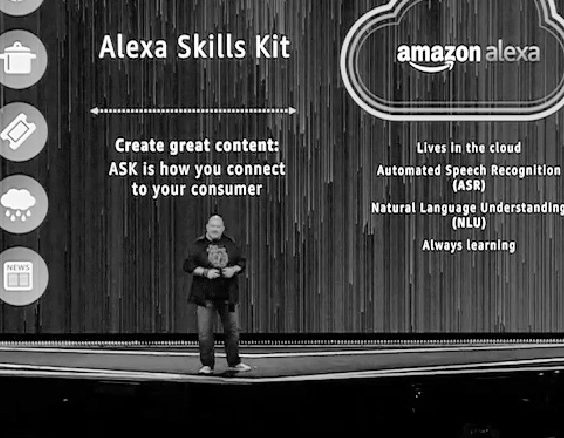

AWS offers two Alexa-based services – Alexa Voice Service for enabling businesses to integrate the voice technology into their products, and Alexa Skills Kit for developers.

“The interfaces to our digital systems of the future are not longer going to be machine-driven,” he said. They’re going to be human-centric. We can build neural networks that can execute human natural interfaces at speeds that allow us to build natural interfaces into our digital systems.”

The capabilities of the systems themselves will grow once they’re no longer limited by the apps that run inside them, and users will not want to go back to using those apps once they get used to interacting with their systems by talking to them.

The use of natural language interfaces has begun to move from the home and car into the enterprise. Cisco Systems last month launched its Spark Assistant, a voice system that leverages the AI technologies acquired when the company bought MindMeld earlier this year and is aimed at helping businesses facilitate better meetings. AWS is making a similar move with the announcement of Alexa for Business, a service designed to enable enterprises to bring the intelligent assistant technology into the workplace, including conference rooms and offices. Rather than having to type in a meeting password or dial a phone, the user can tell the Alexa-based device to start a meeting or call a particular person.

Salesforce, Concur, SuccessFactors and Splunk are among the companies integrating Alexa into their business applications.

An as voice and other human-centric interfaces grow in the home and workplace, it will also mean that vendors will have to build their back-end systems and software to support such interfaces, Vogels said.

“The next generation of systems will be built using conversational interfaces,” he said. “This will be the interface to your systems. This will become the main interface to your systems. This is how you will unlock a much larger audience for the systems that you’re building.”

Data also is a key driver for future systems. Data will be what differentiates companies, thanks to the cloud.

“One of the things the cloud has done is it has created a complete egalitarian platform,” Vogels said. “Everybody has access to the same compute capabilities. Everybody has access the same storage. Everybody has access to the same analytics. If you do not have the algorithms yourself, you can buy them.”

Given that, it will be data that businesses compete on, and the challenge there will be who will have the best data, be able to most quickly derive the best insight and make the best business decisions based on those insights.

The IoT will essentially create entire environments that are active and connected. Every device that is powered by electricity is capable of being connected, Vogels said. Once connected, they also will create even more data for businesses to store and analyze. At the same time, new technologies, such as AWS’ P2 and P3 GPU-based instances and the TensorFlow open-source machine learning software means developers can build that neural networks that execute tasks in real time.

“Now with the new hardware and software advances, we can executive in neural networks in real time,” he said. “That sort of drives a complete shift in the way that we’re going to be able to access digital systems.”

Be the first to comment