Announcements of new iron are exciting, but it doesn’t get real until customers beyond the handful of elite early adopters can get their hands on the gear.

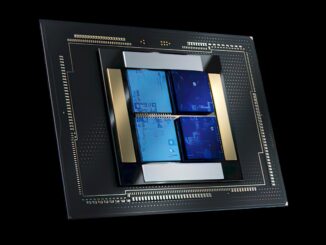

Nvidia launched its “Volta” Tesla V100 GPU accelerators back in May, meeting and in some important ways exceeding most of its performance goals, and has been shipping devices, both in PCI-Express and SXM2 form factors, for a few months. Now, the ramp of this complex processor and its packaging of stacked High Bandwidth Memory – HMB2 from Samsung, to be specific – is progressing and the server OEMs and ODMs of the world are getting their hands on the Voltas and putting them into their machines.

We have already talked about the use of the Volta processors in Nvidia’s own DGX-1V server, which crams two Intel Xeon processors and eight Voltas into a single chassis and the first of which shipped to the Center for Clinical Data Science in Cambridge, Massachusetts. The center is the first of a wave of hospitals that will employ deep learning for MR and CT image analysis, and it actually secured four of the Nvidia servers, which is pretty generous given the demand for the Volta accelerators. The bang for the buck on the Volta systems is very good, around 18 percent better compared to the DGX-1P version using last year’s “Pascal” accelerators for both single and double precision math and about 80 percent better on half precision math. (That’s 5.7X better performance on FP16 workloads at a 15.5 percent premium on the server cost.)

As you might imagine, with machine learning all the rage and systems to run these workloads being very beefy, OEMs and ODMs are excited to be peddling gear that employs the new Volta GPU accelerators. We presume the markup on the accelerators is pretty good, and ditto for the servers that have been engineered to have three, four, or even eight of the accelerators in the box. In some cases, server makers might try to push it up to sixteen Voltas in a system, as happened with some of the Pascal machines. But with the AI frameworks allowing for processing across nodes, the need for fat nodes is diminishing a bit, just as it did when the Message Passing Interface (MPI) protocol was created back in the late 1990s to scale out HPC clusters, usually running Linux. It is not an accident that MPI is one of the methods by which these AI frameworks are being extended across nodes. But it is not the only means; Facebook has extended its Caffe2 framework using a lower level and homegrown communication protocol.

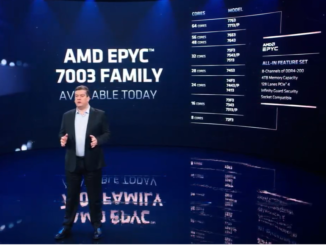

At the GPU Technical Conference in China this week, Nvidia announced that Alibaba, Baidu, and Tencent were all adding Volta GPU accelerators to their infrastructure, and all three are using Volta not only to power their internal AI workloads – largely speech, text, image, and video recognition but also probably including search and recommendation engines – but also as augmented compute on their public clouds for those who want to rent rather than buy GPU computing. Without being specific about who was buying what, Nvidia said that many of the largest system manufacturers in China, including Lenovo, Inspur, and Huawei Technologies, are building hyperscale-style platforms using its HGX-1 reference architecture, which was unveiled back in March at the annual Open Compute Summit hosted by Facebook. We presume that other ODMs not mentioned and from Taiwan – namely Quanta Computer, Foxconn, and Wistron – are also building HGX-1 iron, and for the big American hyperscalers such as Google and Amazon. Facebook has its own “Big Basin” server design that accommodates both Pascal and Volta GPU accelerators using the respective SXM and SXM2 form factors, which support NVLink 1.0 and NVLink 2.0 interconnects on the system board. But interestingly, Facebook is using PCI-Express slots to link the GPUs to the CPUs into the system and to each other, as we previously detailed. The SXM and SXM2 choice is about density, not tighter coupling with between the two compute components. Microsoft also has its own “Project Olympus” second generation of cloud infrastructure, which accommodates GPU accelerators and which is based on a rack rather than blade form factor like the original Open Cloud Servers created by Microsoft.

No one knows for sure how many GPU accelerators the big eight hyperscalers have installed in the Pascal and Volta generations, but what we do know is that these companies tend to get in the front of the line for any new technology. Volta should be no exception, even if Nvidia does keep a few aside to hand deliver DGX systems to selected companies for a PR boost and does also get the GPUs into selected machines where it has been a key contractor in the bid. This includes the “Summit” and “Sierra” supercomputers being built by the US Department of Energy for Oak Ridge National Laboratory and Lawrence Livermore National Laboratory, which have started receiving their Volta coprocessors along with the Power9 processors from IBM, the main contractor on the project. (We have talked at length about the nodes in the Summit system at Oak Ridge, and will be drilling down into the Sierra system shortly. Keep an eye out.)

Now, the Voltas are starting to trickle down to other organizations, who are not hyperscale or special HPC centers and who tend to get their machinery from OEMs in the hundreds of nodes rather than the thousands to tens of thousands.

IBM has not announced the “Witherspoon” Power9 system yet, and it has been expected since the summer, maybe around July, and then the rumor had it that maybe Big Blue might get it out the door in October. And lately, we are hearing that the announcement could be pushed until December. What we can tell you is that Oak Ridge and Lawrence Livermore are getting first dibs on this iron, but others will want to buy it and IBM will want to sell it to them and has a plan to commercialize this iron as well as a slew of other Power9 systems that will not quite have the same GPU compute density and that will probably not employ the SXM2 socket and the NVLink 2.0 interconnect or support the tight cache coherency that using NVLink on the CPUs and the GPUs together offers.

This is precisely what some server makers are doing, at least the initial ones that are supporting the Volta GPU accelerators.

Dell, for instance, is supporting either four of the PCI-Express or SXM2/NVLink variants of the Volta GPU accelerator in its PowerEdge C4130 system, one of its cloudy boxes inspired by hyperscalers and sold by its former Data Center Solutions bespoke server unit. The PowerEdge C4130 currently uses the “Broadwell” Xeon E5 v4 generation of processors and is a two-socket 1U rack server that plugs the GPUs into the front and the CPUs, memory, and disks in the back. The PowerEdge R740 system, also a two-socket box, comes in a 2U form factor and has room for a dozen drives and three PCI-Express Volta GPUs. The PowerEdge R740xd comes in a 2U rack form factor and has room for 32 2.5-inch or 18 3.5-inch drives along with a two-socket server node that can have up to three Volta V100 accelerators put into PCI-Express x16 slots.

Hewlett Packard Enterprise is not formally supporting the SXM2 socket for the Volta accelerators, but the Apollo 6500 servers, which we profiled back in April 2016 when they launched, have been certified to support up to eight of the Volta V100s in the PCI-Express form factor inside of the 4U, twin server enclosure. With the prior generations of Tesla accelerators, HPE could get a total of sixteen GPUs in a single enclosure – eight per sled – and we think someone putting out the specs got a sled mixed up with the chassis since the Apollo 6500 is able to have eight 350 watt accelerators per sled. The Volta V100s in the PCI-Express form factor are below this threshold. Interestingly, the Apollo 6500 is still using Broadwell Xeons and has not been upgraded to use the current “Skylake” Xeon SP processors from Intel. HPE is also certifying that the ProLiant DL380 Gen10 servers, its workhorse two-socket Skylake machine, is able to power three of the Tesla V100s on its PCI-Express bus.

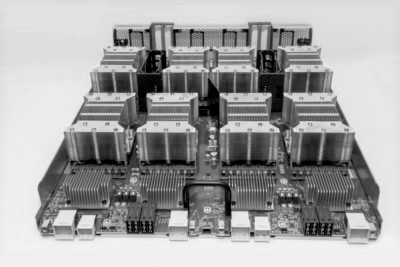

That leaves Supermicro among the big server suppliers, and it has four different servers that have explicitly been called out to support the Tesla Voltas as they come out in volume. This includes the SuperServer 4028GR-TXRT, a two-socket machine with Broadwell Xeons that houses eight of the SXM2 GPU sockets and that only comes as a fully assembled system; the 4028GR-TRT is a very similar 4U system but uses PCI-Express rather than SXM2 versions of the Voltas. Supermicro’s SuperServer 4028GR-TR2 has more peripheral slots and storage expansion and has eleven x16 PCI-Express slots that could, in theory, all have a Volta card slapped into them. Finally, the two-socket SuperServer 1028GQ-TRT, which can support up to four PCI-Express x16 slots in its 1U chassis, has been certified to support the Voltas. Again, none of these are machines that support the Skylake Xeons. But no doubt, Supermicro will have Skylake Xeons – and very likely Power9s – in future SuperServers that also sport Tesla Volta GPUs.

HPC upstart Penguin Computing has also certified a bunch of its machines for the Volta accelerators, which you can see from the list of GPU systems it sells. Penguin has a bunch of new Skylake Xeon systems that also support Volta accelerators as well as some older Haswell/Broadwell machines that can do both Pascal and Volta GPUs, some in PCI-Express form factors and some using the SMX2 socket. The interesting ones, in terms of density, are the Relion XE4118GT, which is a 4U rack server that uses Skylake (with or without integrated Omni-Path networking ports) and can house up to eight of the PCI-Express versions of the Pascal or Volta accelerators. The Relion XE4118GTS is the same 4U enclosure, but it uses the SXM2 socket and uses NVLink to hook the GPUs together. Penguin also says that it is working on a10U variant of its Tundra high density sled servers that will have four of the Volta SXM2 units in it for the CPUs to play with.

Be the first to comment