The novel architectures story is still shaping out for 2017 when it comes machine learning, hyperscale, supercomputing and other areas.

From custom ASICs at Google, new uses for quantum machines, FPGAs finding new routes into wider application sets, advanced GPUs primed for deep learning, hybrid combinations of all of the above, it is clear there is serious exploration of non-CMOS devices. When the Department of Energy in the U.S. announced its mission to explore novel architectures, one of the clear candidates for investment appeared to be neuromorphic chips—efficient pattern matching devices that are in development at Stanford (NeuroGrid), The University of Manchester (Spinnaker), Intel, Qualcomm, and of course, IBM, which pioneered the neuromorphic space with its TrueNorth architecture.

In the last few years, we have written much about the future of neuromorphic chips due to the uptick in development around the devices. Arguably, the reason the dust has been brushed off of architectures like IBM’s TrueNorth chips (which were first finally produced in 2014 after several years of research via the DARPA SyNAPSE program in 2008) is because of the resurgence of interest in machine learning and more broadly, the realization that standard CMOS scaling is coming to an end.

Novel architectures in general are getting far more interest than ever before and neuromorphic is just but one on a growing list. However, of all of these, neuromorphic appears to be getting more funding and real legs to get off the ground, even if the application sets are still somewhat limited.

The neuromorphic investment we first reported in 2015 for neuromorphic devices in military applications has come to fruition. the U.S. Air Force Research Laboratory (AFRL) and IBM are working together to deliver a 64-chip array based on the TrueNorth neuromorphic chip architecture. While large-scale computing applications of the technology are still on the horizon, AFRL sees a path to efficient embedded uses of the technology due to the size, weight, and power limitations of various robots, drones, and other devices.

“The scalable platform IBM is building for AFRL will feature an end-to-end software ecosystem designed to enable deep neural-network learning and information discovery. The 64-chip array’s advanced pattern recognition and sensory processing power will be the equivalent of 64 million neurons and 16 billion synapses, while the processor component will consume the energy equivalent of a dim light bulb – a mere 10 watts to power.”

The IBM TrueNorth Neurosynaptic System can efficiently convert data (such as images, video, audio and text) from multiple, distributed sensors into symbols in real time. AFRL will combine this “right-brain” perception capability of the system with the “left-brain” symbol processing capabilities of conventional computer systems. The large scale of the system will enable both “data parallelism” where multiple data sources can be run in parallel against the same neural network and “model parallelism” where independent neural networks form an ensemble that can be run in parallel on the same data.

“AFRL was the earliest adopter of TrueNorth for converting data into decisions,” said Daniel S. Goddard, director, information directorate, U.S. Air Force Research Lab. “The new neurosynaptic system will be used to enable new computing capabilities important to AFRL’s mission to explore, prototype and demonstrate high-impact, game-changing technologies that enable the Air Force and the nation to maintain its superior technical advantage.”

“The evolution of the IBM TrueNorth Neurosynaptic System is a solid proof point in our quest to lead the industry in AI hardware innovation. Over the last six years, IBM has expanded the number of neurons per system from 256 to more than 64 million – an 800 percent annual increase over six years.’’ – Dharmendra S. Modha, IBM Fellow, chief scientist, brain-inspired computing, IBM Research – Almaden

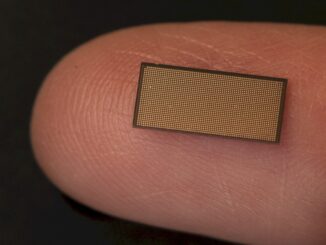

The system fits in a 4U-high (7”) space in a standard server rack and eight such systems will enable the unprecedented scale of 512 million neurons per rack. A single processor in the system consists of 5.4 billion transistors organized into 4,096 neural cores creating an array of 1 million digital neurons that communicate with one another via 256 million electrical synapses. For CIFAR-100 dataset, TrueNorth achieves near state-of-the-art accuracy, while running at >1,500 frames/s and using 200 mW (effectively >7,000 frames/s per Watt) – orders of magnitude lower speed and energy than a conventional computer running inference on the same neural network.

The Air Force Research Lab might be eyeing larger implementations for more complex applications in the future. Christopher Carothers, Rensselaer Polytechnic Institute’s Director of the institute’s Center for Computational Innovations described for The Next Platform in 2015 how True North is finding a new life as a lightweight snap-in on each node that can take in sensor data from the many components that are prone to failure inside, say for example, an 50,000 dense-node supercomputer (like this one coming online in 2018 at Argonne National Lab) and alert administrators (and the scheduler) of potential failures This can minimize downtime and more important, allow for the scheduler to route around where the possible failures lie, thus shutting down only part of a system versus an entire rack.

A $1.3 million grant from the Air Force Research Laboratory will allow Carothers and team to use True North as the basis for a neuromorphic processor that will be used to test large-scale cluster configurations and designs for future exascale-class systems as well as to test how a neuromorphic processor would perform on a machine on that scale as a co-processor, managing a number of system elements, including component failure prediction. Central to this research is the addition of new machine learning algorithms that will help neuromorphic processor-equipped systems not only track potential component problems via the vast array of device sensors, but learn from how these failures occur (for instance, by tracking “chatter” in these devices and recognizing that uptick in activity as indicative of certain elements weakening).

With actual vendor investments in neuromorphic computing, most notably Intel and Qualcomm, we can expect more momentum around these devices in the year to come. However, as we have talked about with several of those we have interviewed on this topic over the last couple of years, building a programmable and functional software stack remains a challenge. No architecture can thrive without an ecosystem—and while AFRL’s has been custom built for their applications running on the array, for market viability, it could take years of software stack innovation for these devices to break into the mainstream.

“From custom ASICs at Google, new uses for quantum machines, FPGAs finding new routes into wider application sets, advanced GPUs primed for deep learning, hybrid combinations of all of the above, it is clear there is serious exploration of non-CMOS devices.”

Surely many of these ASICs and GPUs are still CMOS devices?

This kind of a stupid approach. That chip is old and based on 28nm. IBM can do 5nm now. Also a 8 by 8 array of the chips on one board in a 4u? So make the chip a bit larger and you have a 32 to 1 scale up. So now you have 32 million per chip in a board of 64 chips. So now one board has 2 billion neurons. Given the the fact that the packaging shown is also really old one could drop the whole package into one PCie card. I would love to play with one of those.

IBM can do crap, they demonstrated a 5nm manufacture protype but they have nowhere near a production ready commercial 5nm process they don’t even have a commercial FAB at all anymore.

They might license parts of the process to GF and that’s it. But until GF gets to actually implement it and run at production level it is going to be at least till 2021-2.

Besides the current IBM TrueNorth prototype performs absolutely abysmal on state of the art DNN if you actually start investigating its usage.

What they are right though is ASIC is the future especially for the cloud and HPC. And you don’t need to be on a bleeding edge for getting a good ROI you can go to an old/mature/cheap process node and still gain.

Read this interesting paper to get an idea what I am talking about “Moonwalk: NRE Optimization in ASIC Clouds”