The United States for years was the dominant player in the high-performance computing world, with more than half of the systems on the Top 500 list of the world’s fastest supercomputers being housed in the country. At the same time, most HPC systems around the globe were powered by technologies from such major US tech companies as Intel, IBM, AMD, Cray and Nvidia.

That has changed rapidly over the last several years, as the Chinese government has invested tens of billions of dollars to expand the capabilities of the country’s own technology community and with a promise to spend even more to fuel the development of systems that use only home-grown technologies. The most high-profile example of this is the Sunway TaihuLight, a massive supercomputer developed by National Research Center of Parallel Computer Engineering and Technology (NRCPC) and installed at the National Supercomputing Center in China, and which now sits atop the T0p500 list. The supercomputer, which delivers 93 petaflops of performance – more than the next five systems combined, and 5.2 times faster than the top US supercomputer, the Titan system based on Cray and AMD technologies – is powered by ShenWei’s SW26010 many-core processor, a chip developed in China.

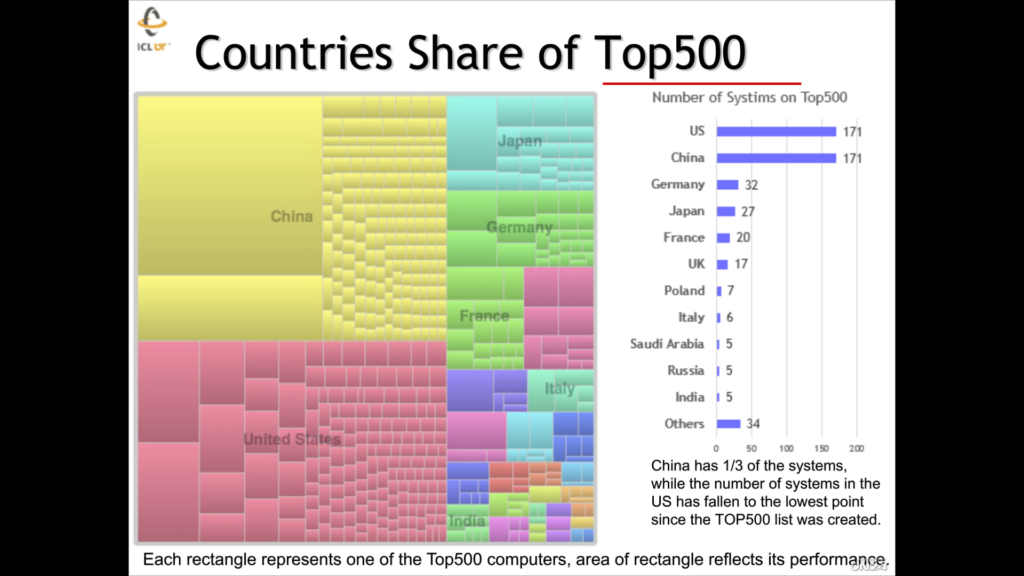

In the last edition of the Top500 list released in November 2016, the United States and China each had 171 systems, a number that indicates the rapid rise of China in the HPC space over more than a decade and decline in US dominance in the industry – it’s the lowest number for the US since the list began more than two decades ago. At the same time, while the US has plans to deliver capable exascale systems in 2022, China has three projects in development to bring exascale systems to market two years earlier with a prototype expected by the end of 2017.

The rise of China in the HPC space was one of several points made today by Jack Dongarra of the University of Tennessee and Oak Ridge National Laboratory outlining the state of HPC today and where it’s going in the near future. Dongarra gave what amounted to a call to arms for the US to understand the growing strength of China in the supercomputer field, particularly as we move closer to reaching exascale computing. The country that can lead the exascale race will have an edge in everything from business and research to innovation. The issue has gotten more attention due to concerns about how federal budget cuts under the Trump administration could impact US scientific projects, including those tied to the exascale efforts.

At the same time, Dongarra used the incredible numbers of the TaihuLight supercomputer to renew his push to move away from the well-know Linpack benchmark, which has been used over the years to rank systems based on floating point performance. The Linpack benchmark was used to calculate the performance that put the Chinese system at the top of the Top500 list, but a new benchmark is needed to keep with current HPC trends, including the growing amount of data running through the system and the growing reliance on such factors as memory and the I/O subsystem.

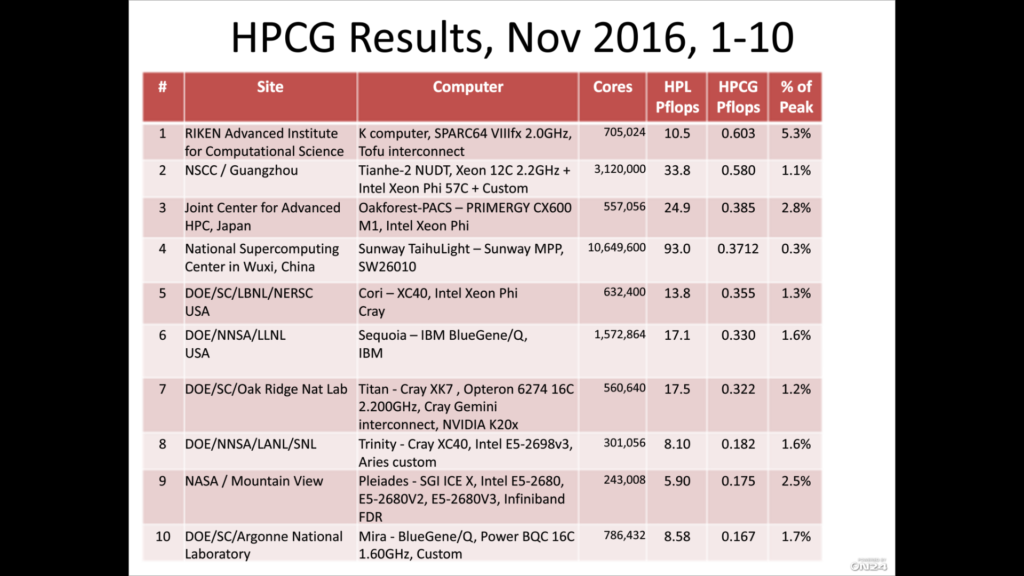

Dongarra – one of the original developers of the Linpack benchmark as well as the Top500 list – talked more about the High Performance Conjugate Gradient (HPCG) benchmark that he has developed with Sandia National Lab’s Michael Heroux. We have given the new benchmark some attention here, but Dongarra said the new benchmark is essential for giving a more accurate view of a system’s value based more on real-world workloads rather than simply on floating point performance. Floating point operations are fairly inexpensive, he said. What costs is data movement.

“Apps have changed and hardware has changed in many ways and we need a better way to look at HPC systems,” Dongarra said during the webinar.

That’s not to say that the rise of China in the supercomputer space or the Taihulight aren’t real challenges to the United States in HPC. The two countries have sparred over technology for more than a decade, with each worrying that products from the other could come with backdoors and other security problems and represent significant threats to national security. In 2015, US regulators barred vendors like Intel and Nvidia from selling products to four supercomputing centers in China over concerns that the facilities were working on nuclear programs. “In some sense, the result of that was to accelerate the rate of development going on in China,” he said.

We have talked about the challenges US chip makers like Intel and Qualcomm and other American tech vendors are facing when trying to grow in a China market that increasingly is looking to have systems built using Chinese-made technologies. For example, Chinese officials have promised to spend $150 billion over 10 years to grow the country’s chip-making capabilities.

The most high-profile results of all the country’s investments were the Taihulight system and the SW26010 chips that power it. The SW26010 is a 260-core chip built on 28-nanometer technology and running at 1.45GHz, size and speed that are “modest” compared with what Intel, AMD and others in the US are rolling out. The water-cooled TaihuLight, housed in 40 cabinets, includes almost 10.5 million cores, 1.31 PB of DDR3 memory—again, lagging behind the DDR4 memory that many newer systems are running – and consuming 15.3 megawatts of power.

The system burst onto the Top500 list last year on the strength of the dominant results from the Linpack benchmark. According to Dongarra, the TaihuLight has 1.1 times the performance of all the systems run by the US Department of Energy combined. However, the story changes a bit when the system is measured by the HPCG benchmark, with its focus on real applications. On that list, shown below, the massive system comes in fourth, and getting only .3% system utilization when looking at the theoretical peak. Most systems were getting somewhere between 1% and 3%, with the Riken system from Japan hitting 5.3%.

However, Dongarra cautioned that the results from the HPCG don’t mean that TaihuLight is a system that was designed solely for floating point operations in hopes of getting high on the Top500 list while adding no real value. Three of the six finalists for last year’s Gordon Bell Award – created to recognize the efforts to use HPC technologies use of HPC for real scientific applications – and the eventual winner were from China, and all three relied on the TaihuLight.

Dongarra also looked ahead at some of the key challenges around algorithm and software design in the age of petascale and exascale computing. Among the issues are the need to develop algorithms that reduce the need of synchronization in a parallel-processing world to help speed up computational work and that have lower bound on communications to help reduce the complexity of workloads. He also said more intelligence needs to be built into software so they can more easily and automatically adapt to the increasingly complicated underlying hardware and “don’t rely on the user tuning the right knobs from the start.”

Developers also need to implement algorithms that can more quickly recover from failures and bit flips and to create ways to more easily guarantee the reproducibility of results.

Be the first to comment