Amazon Web Services might be offering FPGAs in an EC2 cloud environment, but this is still a far cry from the FPGA-as-a-service vision many hold for the future. Nonetheless, it is a remarkable offering in terms of the bleeding-edge Xilinx accelerator. The real success of these FPGA (F1) instances now depends on pulling in the right partnerships and tools to snap a larger user base together—one that would ideally include non-FPGA experts.

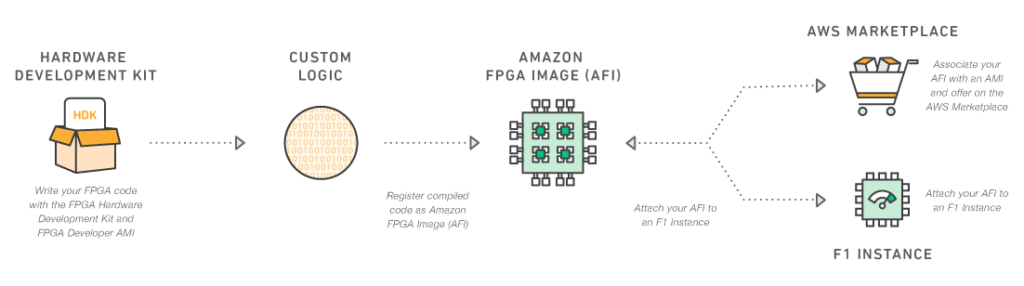

In its F1 instance announcement this week, AWS made it clear that for the developer preview, there are only VHDL and Verilog programmer tools, which are very low-level, expert interfaces. There was no reference yet to OpenCL or other hooks, but of course, given such a high-end FPGA, AWS might have strategized to go for the top end of that user market first to give access to a Xilinx part that has yet to hit many datacenters. Also, this is an early stage effort designed to appeal to existing FPGA users who might not have otherwise had access to the brand new 16nm UltraScale Plus FPGA.

What we found out this week after talking to companies that carve a niche by offering such interfaces via compilers, tools, and frameworks (often on their own appliances) is that they are a key to AWS’s strategy to onboard new users. On the analytics side, Ryft (whom we profiled in detail here) is a good example, whereas for domain-specific FPGA companies, including Edico Genome (we also profiled here) will help usher in new F1 users for genomics research. In short, the strategy for AWS is a familiar one; partner for the expertise, which lends to the customer base, which creates a stronger cloud product (and presumably, more options for the partner due to increased reach).

Ryft’s FPGA accelerated analytics business is driven by government, healthcare, and financial services with an increasing push on the genomics side for the types of large-scale pattern matching required in searching for gene sequencing anomalies. The company says their FPGA-boosted analytics on the AWS F1 instances to bolster elastic search will allow for such searches across multiple datasets, both for individuals and entire populations. “It’s very difficult with conventional analytics tools using just CPUs or even GPUs to this type of search effectively. FPGAs are a natural fit for that, and since this is a market the cloud providers want to tackle, it’s in their interest to keep pushing the envelope,” McGarry says. The company’s analytics engine bypasses the standard ETL processes for this and other workloads, and this will keep speeding workloads this and the others powering their business, he adds.

The reason Ryft’s business worked at all is because first, the addition of FPGA acceleration workloads like those listed above is not to be dismissed. However, also not to be overlooked is the complexity of using low-level tools to get to the heart of reconfigurability’s promise. Like the very few other companies out there providing FPGA acceleration for key workloads, their emphasis is on ultimate abstraction from the hardware and a focus on key workloads. Amazon, it seems, is following their lead, albeit by tapping those who do it best.

As a side note to that domain-specific approach to pulling in non-FPGA experts to AWS F1, we spoke at length with Edico Genome in the wake of the F1 announcement. They use custom FPGA-based hardware to support genomics research, including providing end-to-end sequences in minutes (more detail on that coming in a detailed story next week). McGarry’s point about AWS and cloud providers hoping to tackle the genomics boom with enriched platforms for sequencing and analysis is an important one, and could help explain why AWS is getting in front of the FPGA trend. In the announcement of the F1 instances, genomic research was at the top of the potential use cases list.

Ryft is jumping in with AWS to boost elastic search (especially useful for the genomics use case) following a nine-month collaboration with the cloud giant to share insight about how to make FPGA-based analytics workloads hum. “Amazon certainly understands that the success of this instance is dependent on their ability to allow people to abstract the complexity of FPGAs; to provide the interfaces and an existing analytics ecosystem for this and other instances,” Ryft CEO, Des Wilson, tells The Next Platform. “They came to us because of our ability to do that and they will move this into the mainstream this way.”

AWS has its complexity level set high with the Xilinx Ultrascale 8-FPGA nodes it has designed for the F1 instance, but according to Ryft’s engineering lead, Pat McGarry, they picked the right part for the times. While it still isn’t clear why AWS picked this without bringing along the higher-level OpenCL interfaces and tools (which are easier to program but don’t provide the same level of performance) no one we’ve talked to seems to be anything but excited such a beefy FPGA (backed with an equally beefy Broadwell CPU along with DSP cores.

“I suspect AWS chose this very new and high-end FPGA because they are trying to tackle classes of problems that none of the older generation FPGAs will be able to touch. It has 2.4 million logic elements, a strong Broadwell CPU and 6500 DSP slices. This eats into territory that used to belong solely to GPUs as accelerators and is a much bigger play for Amazon than just providing FPGA technology,” McGarry says. “This will eventually allow for all kinds of new machine learning, AI, big data analytics, you name it, all on one platform. They just have to figure out how to these things in a platform way for other verticals like we’ve managed to do with FPGA based analytics.”

One of the key differentiators other than the logic element capabilities and strong host CPU is that there is a much greater opportunity with these parts for partial reconfiguration. Being able to reconfigure an FPGA on the fly for different analytical workloads is a big opportunity McGarry says, and we will continue to see others doing this while also offloading critical network and other datacenter functions on the same device, although Ryft folks were not convinced this is what AWS is doing with their FPGAs. “These are really designed just for the F1 instance, we’re quite sure,” McGarry stated. “This is purely an EC2 compute play.”

Ryft says they were able to provide AWS with key insight into how to solve the problems of distributed accelerators, especially with the highly heterogenous CPU, FPGA, DSP nodes that back the F1 instances. “We had to spend a lot of time working with them to understand their architecture first,” Des Wilson says. “We ended up figuring out which pieces of our own architecture for our Ryft One appliances would fit best and use that as a starting point. We had to make the limitations of a cloud provider architecture our strengths and spent months looking at which architectural flaws with that don’t match well with FPGAs and use that to our advantage.”

Wilson describes the above engineering effort using an example from streaming data to F1 nodes in a cloud environment versus their own hardware. “Instead of streaming data from an SSD, we had to think about doing this directly from S3 or Glacier or some other data source. We took that model and saw as soon as you have that matched with the reconfiguration capabilities of those FPGAs, the AWS architecture lets us connect all that together because of the ring topology with 400 Gb/S of throughput between the FPGAs. It’s possible to segment jobs nicely. These are very new FPGAs and while latencies are an issue with many of these tied together, for most of the commercial workloads, this shouldn’t be a problem,” Wilson says. “This is a big deal for future workloads on F!.”

This all begs the question about what this AWS work will mean for Ryft’s niche FPGA appliance business, of course. While indeed, they get to pick up potential new users of their analytics packages (an important benefit), they had to share some of their secret sauce with AWS to make such a partnership practical. Ultimately, Wilson says this is a very good thing for their business because there will always be customers who need the ultra-low latency of the Infiniband-connected appliances they sell but the real value is the FPGA analytics software and services. As we know, there are razor-thin margins for anyone in the hardware game, so losing out on this business isn’t as devastating at it might otherwise sound.

“Everyone loves their own hardware design, so it was a challenge for us in this F1 work to not redo everything we’ve done in our architecture and appliance on AWS’s own infrastructure. We had to think carefully about their networking architecture, separation of the data across it at scale, and the latencies associated with doing so. Once we got past this, things moved along well.”

As we will explore in greater detail next week as we look at how AWS is backing into the FPGA business by using domain-specific companies like Edico Genome, we will look at more of the technical and interface challenges for truly democratizing FPGAs via a cloud model. As we noted in the past, there are companies like Nimbix, that have been working with HPC application developers to integrate FPGA acceleration, but there has not been such a big public push until the AWS announcement.

Since we expect that others, particularly Microsoft, which has deep experience with FPGAs, to announce similar offerings in the near future (an educated guess, of course), looking at how an expert-required device like this will filter to the mainstream through higher-level or domain-specific sources will be interesting–and could spell out how Altera/Intel attack the market when a future integrated part emerges.

Maybe people should read the latest Nimbix press release

https://www.nimbix.net/blog/2016/11/14/nimbix-xilinx-expand-fpga-cloud/?mc_cid=2bfac7aac7&mc_eid=497f52426c

That shows Amazon AWS is just trailing others.