Accelerators and coprocessors are proliferating in the datacenter, and it has been a boon for speeding up certain kinds of workloads and, in many cases, making machine learning or simulation jobs possible at scale for the first time. But ultimately, in a hybrid system, the processors and the accelerators have to share data, and moving it about is a pain in the neck.

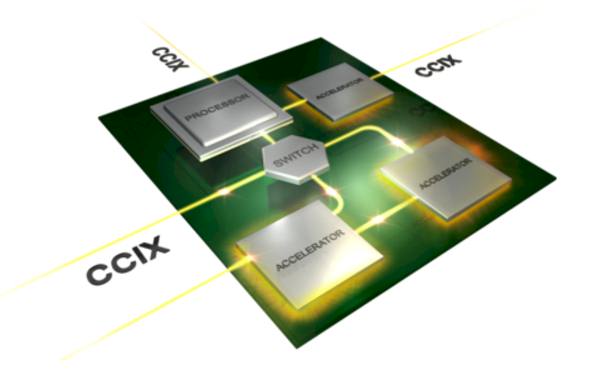

Having the memory across these devices operate in a coherent manner – meaning that all devices can address all memory attached to those devices in a single, consistent way – is one of the holy grails of hybrid computing. There are, in fact, a number of ways that this is being done on hybrid systems today, and that is a problem of its own and one that the Coherent Connect Interconnect for Accelerators, or CCIX, consortium seeks to address.

It is a tall order, but one that AMD, ARM Holdings, Huawei Technologies, IBM, Mellanox Technologies, Qualcomm, and Xilinx are taking on, and if they succeed in creating a more open specification to link processors and accelerators (including functions on network cards or non-volatile storage) together with coherent memory, building hybrid systems will be significantly easier than it is today. The question we have, and that the CCIX has not yet answered, is precisely how this might be done.

IBM’s Coherent Processor Accelerator Interface, or CAPI, which was first made available on its Power8 processors, provides a blueprint for how this could be accomplished, and so does Nvidia’s NVLink interconnect.

With CAPI, IBM has created a leaner and meaner coherency protocol that rides atop the PCI-Express peripheral interconnect and that allows accelerators like GPUs, DSPs, and FPGAs to link into the Power8 processor complex and address main memory in the server much like they would their own local memory (if they have any). Nvidia’s NVLink interconnect is another example of an interconnect that is not only used to attach devices to processors with coherency across the memory, but is also used to lash together multiple GPU accelerators so they can share memory in some fashion and more efficiently scale out applications running across those GPUs.

In its mission statement, the CCIX consortium outlined its goals, saying that it wanted to be able to accommodate both offload and bump-in-the-wire accelerators with this memory coherency while at the same time leveraging existing server interconnects and providing this coherency without the need for interrupts or drivers. The idea is also, as with CAPI, to provide higher bandwidth than current coherency protocols running on existing interfaces and at least an order of magnitude of improvement in latencies to make hybrid architectures even more attractive for running various kinds of workloads.

It is not much of a surprise that Intel has not joined the CCIX consortium, but it is a bit of a surprise that Nvidia, which has been gradually evolving the programming model for GPU accelerators to provide increasing levels of coherency between the processing elements in a system over the past eight years. Clearly, Nvidia has a vested interest in supporting coherency, and Intel may have to concede that it is better to play with other accelerators well through an open standard such as that which the CCIX develops rather than allow other processors and accelerators to have advantages that its Xeon processors do not.

The Power and ARM architectures are the two emerging alternatives to the Xeon processor in the datacenter, but neither has gone mainstream for core compute and both camps have aspirations for a sizable chunk of the compute in the coming years. Better leveraging hybrid architectures through a common and efficient coherency protocol that works across multiple interconnects and is more or less invisible to programmers and the applications they create could compel Intel to join up. Intel does not support NVLink or CAPI protocols on its Xeon processors, but there is no reason that it could not do so if enough customers demanded it do so. For now, adoption of CAPI has been fairly low, and NVLink is just getting started with the “Pascal” generation of GPU accelerators from Nvidia and will not ramp in volume until early next year.

If IBM is willing to tweak CAPI to adhere to the CCIX standard, and the CCIX technology is either open sourced or made relatively cheaply available through licensing like the PCI-Express protocol from the PCI-SIG that controls this peripheral interconnect, then there is a good chance that Intel and perhaps other processor manufacturers like Oracle, Fujitsu, and other members of the ARM collective, could get behind it as well. Intel might need a standard way to interface with Altera FPGAs, too, even as it is offering a prototype hybrid Broadwell Xeon-Arria FPGA package and is expected to deliver a true hybrid Xeon-FPGA chip further down the road.

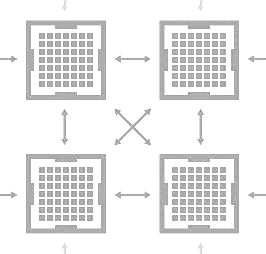

It is not a foregone conclusion that PCI-Express will be the means of creating the coherent fabric on which the CCIX protocol stack will run, Kevin Deierling, vice president of marketing at Mellanox, tells The Next Platform. With the PCI-Express, CAPI, and NVLink protocols, for instance, these protocols are leveraging the same signaling pins, but the way they make use of the pins are very different and CCIX could be as well in a transparent way. It is also reasonable to expect that CCIX will in some sense eventually extend the protocols that link processors to main memory and to each other out across a fabric to other processors and accelerators. This fabric will not replace existing NUMA protocols, but augment them. But this is all conjecture until CCIX releases its first draft of a specification some time before the end of the year.

There is some talk out there that the CCIX effort got its start when Intel acquired Altera last year, but Deierling says that the efforts to have a consistent memory coherency method predate this and even the OpenPower consortium that many of the CCIX players are also a part of.

“The main thing is that we do not reinvent the wheel five or six times,” says Deierling. “There is a need for an industry standard cache coherency interface, and finally everyone agrees the need is clear and the time is now.”

And as for timing for CCIX products, there are those who say that it will take until 2019 or 2020 for the first CCIX products to come to market, but given how there are already precedents in the market with CAPI-enabled FPGAs and network adapter cards, it may not take as long and could be more like the 25G Ethernet standard, which was pushed by the hyperscalers and which moved from idea to product a lot faster than the consortium of Ethernet vendors normally does.

“When we go outside of committees, we can go a lot faster,” says Deierling. This kind of speed is what both hyperscalers and HPC centers both need, and they are the top users of accelerators today and certainly want to be able to plug more types of coprocessors into their systems and not have to radically change their programming models. While hyperscalers and HPC shops have been among the drivers for the CCIX approach, unlike hyperscalers with the 25G Ethernet effort, they are not the main drivers. Suppliers of processors, coprocessors, and networking devices that are aligning and competing against Intel in the datacenter seem to be in agreement that they need to work together.

We happen to think that the generic accelerator interconnect that is derived from NVLink on the Power9 chips, which IBM talked about a bit back in March and which is due in 2018, is in fact going to be supporting CCIX. We also think that ARM server chips need a consistent coherency method that spans more than one socket and that also allows for various accelerators to hook into ARM chips, and CCIX could fill that need nicely if ARM Holdings and its licensees get behind it. Cavium and Applied Micro, which gave their own NUMA interconnects already, are not on board with CCIX yet but Qualcomm, which has server aspirations and no experience with NUMA, is. AMD obviously has done a lot of work on this with the Heterogeneous Systems Architecture that spans its CPU-GPU hybrids, which in theory is expandable to discrete devices and not just its APU chips.

We have reached out to Intel and Nvidia to see what they think about all of this. Stay tuned.

very smart move by IBM

Not a word about AMD HSA?It’s my impression that this is already a solved problem.

Well the reason that Intel doesn’t join it because they have OmniPath as a competitor solution.

Intel joins with no one, mostly, including with its x86 one trick pony ISA, while others like AMD have gone on to using ARM and Custom ARM(K12) designs, as well as x86. There will be an even more ARM like hardware/software ecosystems built up around the OpenPower industry users, and hopefully AMD will try to get some of its GPU/IP into the nascent OpenPower third party accelerator market ASAP, as Nvidia stands to gain handsomely from its OpenPower affiliation!

Intel appears to be sowing more seeds that will further isolate it from any future mobile market penetration, while trying to retain its proprietary market share! Intel’s market share is currently a little safer in the server room, but that will change also, with AMD’s Zen x86 competition and custom ARM(K12 Server APUs), to go along with the OpenPower server competition coming online from the many OpenPower licensees! Hopefully AMD will have the good sense to embrace the OpenPower GPU accelerator market, and maybe even get an OpenPower license of its own to go along with AMD’s x86, and custom ARMv8A ISA running designs.

Intel is going to be the one to fall the most, being on the top and not paying attention to any changes before it’s too late(Mobile Market). That high market share/high margin server market will come into a more ARM style licensed IP business model around the server SKUs(OpenPower, ARM, AMD K12/ARM), an IP licensed business model that will bring actual competition to the server market the likes that some in Intel’s management has never seen, they themselves being with Intel for far too short of a time! They will be around for a shorter amount of time to witness the great fall as the redundancy letters go out persevering the jobs of the ones primarily responsible for the Intel’s many market oversights over these last few years.

As long as this is for off chip communication(for the single monolithic die based APUs/SOCs)or for off interposer module communication, for APUs and SOC on an interposer package, then fine. I’m much more interested is what AMD is doing with its HPC/exascale APUs on an silicon interposer package, as that is where the really large bandwidth inter-processor die communication will put the rest in the rear view mirror. If AMD can get its GPU processors communicating with the HBM memory die stacks via 4096 bits of wide total parallel traces(1024 bits per HBM stack), just imagine what adding some of those Zen x86 CPU cores/complexes to the same interposer module and wiring up to CPU to the GPU core via the same or even larger number of silicon interposer etched traces, say a CPU’s whole cache line to a Cache line on the GPU’s Cache, so the CPU/GPU processors’ cache subsystems can transfer data(on some future variants), and even floating point, integer/other instructions between themselves(mostly CPU directly dispatching instructions to the GPU for some FP/int work directly dispatched to the GPU’s larger available ranks and files of FP/INT/other units).

I’m sure that an industry standard fabric is a good thing, especially for off chip and off interposer module communication, but hosting many different processors on a silicon interposer module with that interposer’s substrate made of the exact same material as the processors dies themselves has many more interesting possibilities for wiring separately fabbed processor dies and still being able to wire these separate processor dies as if they where made on a single monolithic die. Currently the interposer designs are only passive in nature, just hosting thousands of traces, but future silicon interposer designs may be of the active design and maybe hosting much wider versions of this CCIX/other custom fabrics along with active circuitry to run the fabrics.

It just appears with the silicon interposer technologies potential, that these other connection technologies will be somehow be quickly supplanted, at least for inter-processor die on silicon interposer communication is concerned. So maybe this CCIX technology will have to come in two versions one for the old single monolithic die/off module communication over a narrower fabric, and one other CCIX type of industry/standard much wider parallel standard fabric for complete SOCs/APUs hosted on a silicon interposer package.

Moore’s economic observation/”Law” is fast running out for economically shrinking things in planar dimension(more so than the limitations of the laws of physics). So why not start spread things out on a silicon interposer package and wiring them up via 10s of thousands of interposer traces, for the dies with too much thermal dissipation issues to be readily stacked while also stacking things on the same interposer package that can be readily stacked like HBM memory.

Future AMD(Navi) GPUs, and APUs on an interposer package, designs may make use of many smaller GPU Dies wired up via a silicon interposer in some modular fashion so that the necessity of having to fabricate fewer large GPU monolithic dies per wafer, at larger per wafer yield loss issues, can be supplanted by making many smaller monolithic GPU die units, and a larger number of dies per wafer with much lower dies/wafer yield losses. If you look at a larger monolithic GPU die, its most definitely made up of much smaller blocks of completely modular functional units that are wired up on a single monolithic die with an on monolithic die wide parallel connection fabric. So it would be very easy to move the fabric on the interposer’s silicon substrate and make the modular functional blocks into separate smaller die units of one or more of these blocks of GPU functional units.

So maybe there should be a standardized much wider parallel industry standard interposer fabric for silicon interposer based SOC’s/APUs that is similar in scope the standardized motherboard form factors and communication fabrics(PCI, etc.) that are in use today. The silicon interposer package may supplant to PCB based motherboard for the hosting of the main processor dies and their stand connection fabrics, as well as first tier DRAM memory stores(HBM, other memory stacks). The PCB main-board will become mainly there to host the power traces and some secondary extra Dram cards, as well as the interposer module/s, etc.