Graphics chip maker Nvidia has taken more than a year and carefully and methodically transformed its GPUs into the compute engines for modern HPC, machine learning, and database workloads. To do so has meant staying on the cutting edge of many technologies, and with the much-anticipated but not very long-awaited “Volta” GP100 GPUs, the company is once again skating on the bleeding edge of several different technologies.

This aggressive strategy allows Nvidia to push the performance envelope on GPUs and therefore maintain its lead over CPUs for the parallel workloads it is targeting while at the same time setting up its gaming and professional graphics businesses for a significant performance boost – and a requisite revenue jump if history is any guide – in 2018.

The premise with general purpose GPU computing, way back in 2006 when it got started and in 2008 when the Tesla products and the CUDA parallel programming environment debuted in the HPC space that was in dire need of larger increases in application performance at lower costs and thermals than CPUs alone could deliver, was that all of the innovation in gaming and professional graphics would engender a GPU compute business that benefitted from Nvidia’s investments in these areas. Now, to our eye, it looks like the diverse compute needs in the datacenter are driving Nvidia’s chip designs and roadmaps, and for good reason.

The very profitable portion of its business that is embodied in the Tesla and GRID product lines and that are used to accelerate simulation, modeling, machine learning, databases, and virtual desktops has been nearly tripling in recent quarters and shows no signs of slowing down. The datacenter tail has started wagging the gaming dog. And as far as we are concerned, this is how it should be. We believe fervently in the trickle down of technology from high end to enterprise to consumer because the high end has the budget to pay for the most aggressive innovation.

Volta is, without question, the most advanced processor that Nvidia has ever put into the field, and it is hard to imagine what it will be able to do for an encore. That is probably why Nvidia co-founder and chief executive officer, Jen-Hsun Huang, did not talk about Tesla GPU roadmaps during his keynote at the GPU Technology Conference in the company’s hometown of San Jose today during his keynote address.

Back in the earlier part of the decade, as GPU compute moved from academia to the national HPC labs, Nvidia had to prove it had the technical chops to deliver enterprise-grade engines in a timely fashion and without technical glitches. There were some issues with the “Maxwell” line of GPUs in that we never did see a part with lots of double precision flops, and some features slated for one generation of GPU were pushed out to later ones, but Nvidia has more or less delivered what it said it would, and did so on time. This, in and of itself, is a feat and it is why we are not hearing about successors to Volta as this new generation of GPUs is making its debut in the company’s datacenter products.

We don’t expect to hear much about roadmaps until this time next year, in fact, when the Volta rollout will be complete and customers will be wondering what Nvidia will do next.

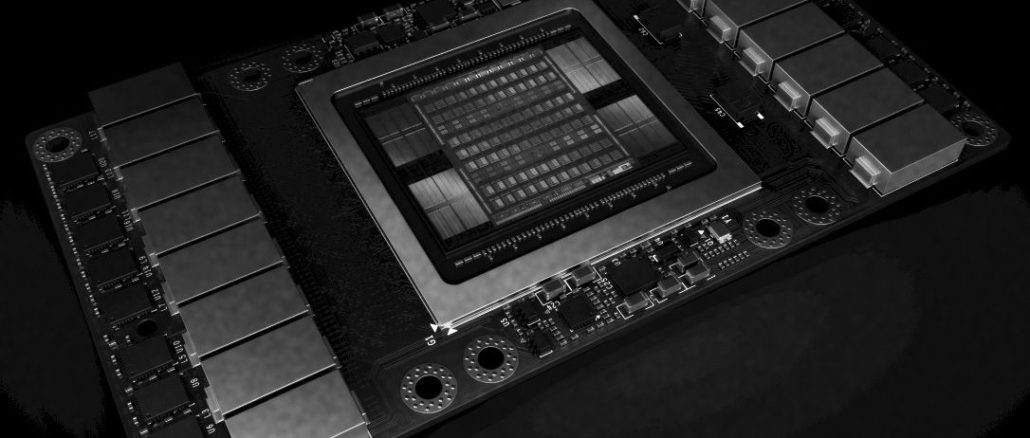

Shockingly Large Volta GPUs

The Volta GV100 chip needed to incorporate a lot more compute, and a new TensorCore matrix math unit specifically designed for machine learning workloads in addition to the floating point and integer units that were in the Pascal GP100 chip that made its debut last April at the GTC shindig. The full Pascal GP100 chip had 56 streaming multiprocessors, or SMs, and a total of 3,584 32-bit cores and 1,792 64-bit cores; with caches, registers, memory controllers, and NVLink interconnect ports, this Pascal GP100 chip weighed in at 15.3 billion transistors using the 16 nanometer FinFET process from Taiwan Semiconductor Manufacturing Corp.

The Volta GV100 chip has 84 SMs, each with 5,376 32-bit floating point cores, 5,376 32-bit integer cores, 2,688 64-bit floating point cores, and 672 TensorCores for deep learning; this chip is implemented in the very cutting edge 12 nanometer FinFET node from TSMC, which is one of the ways that Nvidia has been able to bump up the transistor count to a whopping 21.1 billion for this chip. To help increase yields, Nvidia is only selling Tesla V100 accelerator cards that have 80 or the 84 SMs on the GV100 chip activated, but in time, as yields improve with the 12 nanometer process, we expect for the full complement of SMs to be activated and the Volta chips to get a slight performance boost further from it. If you extrapolate, there is another 5 percent performance boost in there, and more if the clock speed can be inched up a bit.

In any event, the Volta GV100 chip has a die size of 815 square millimeters, which is 33.1 percent larger than the 610 square millimeters of the Pascal GP100 die, and that is about as big as any chip dare to be on a 300 millimeter wafer in such a new manufacturing process.

We will be drilling down into the architecture of the Volta GPUs and the possibilities of the updated NVLink 2.0 interconnect in future stories, but for now, here is the overview of the Volta GV100 GPU. With those 80 SMs fired up on the die and running at 1,455 MHz (1.7 percent slower than the Pascal GP100 clocks), the GV100 delivers 15 teraflops of floating point performance at 32-bit single precision, 7.5 teraflops at 64-bit double precision, which is a 41.5 percent boost in raw computing oomph that is applicable to traditional HPC workloads and to some AI workloads. But here is the interesting bit, those Tensor Core units added to the Volta architecture deliver 120 teraflops of effective performance for machine learning training and inference workloads. To be precise, the Tensor Core units on the Volta GV100 deliver 12X the peak performance of the Pascal GP100 using FP32 units for training and 6X its performance on machine learning inference using FP16 half-precision instructions on the FP32 units. (There was no comparison of inference workloads using the Pascal’s 8-bit integer units compared to Volta’s Tensor Core’s, but logically, it should be somewhere around 3X higher on the Tensor Core units.) All of this within the same 300 watt thermal envelope that its predecessor had.

The Volta GV100 chip has 6 MB of L2 cache, a 50 percent increase over the Pascal GP100 and consistent with the increase in the number of cores. The GV100 has a tweaked set of memory controllers and the Tesla Volta V100 accelerator has 16 GB of an improved variant of HBM2 stacked memory from Samsung. The Pascal P100 card delivered 724 GB/sec of peak memory bandwidth and the Volta V100 card can push 900 GB/sec. This is an order of magnitude higher than a typical two-socket X86 server can do, which is one reason why GPU accelerators are being deployed in HPC workloads. But HPC workloads are largely bound by memory capacity and memory bandwidth, and HPC centers were probably hoping to get GPU accelerators with 24 GB or even 32 GB of capacity and well over 1 TB/sec of bandwidth. There is a lot more compute with Volta, but no more memory capacity and only 24.3 percent more peak memory bandwidth. That said, Nvidia says the new Volta memory architecture actually delivers 1.5X better sustained memory bandwidth than Pascal, and this matters, too.

It seems the promises for TSV memory by the DRAM manufacturers have not been met. HMC and HBM seem to be slower and more expensive than promised and I have no idea what happened to Wide I/O. I wonder if the restriction to 16GB instead of 32GB of VRAM on the Tesla V100 is also because of technical issues. It seems somehow odd to me that NVIDIA would pay for an 815 mm^2 die on a cutting edge process and then only include 16GB of VRAM to keep costs down.

HBM/HBM2 is slower(lower clocked) because the memory channel is much wider with HBM/HBM2 offering 8, 128 bit channels(1024 bit total) per JEDEC HBM/HBM2 stack. So that’s why HBM/HBM2 is much more power efficient compared to regular DRAM and even GDDR5/6. The JEDEC standard only deals with what is required to support one HBM/HBM2 stack with device makers free to use one or more HBM/HBM2 stacks as bandwidth needs require. HBM/HBM2 is able to make that high effective bandwidth because of a much wider parallel data path relative to the older DRAM technologies and the server/HPC markets are where HBM2 will be used first where the markups are much higher than in the consumer markets.

AMD will be making use of that HBCC(High Bandwidth Cache Controller) on its Vega SKUs to leveradge HBM2 like a last level cache and allow the GPU to store more textures/data in regular system memory with the HBCC able to pre load the textures/data from regular DRAM to VRAM(HBM2) in the background and allow the Vega GPU to feed directly from HBM2 with no loss of effective bandwidth. In this way via the HBCC AMD will be able to get buy with a smaller amount of costly HBM2(Used as a last level cache) and still take advantage of HBM2’s high effective bandwidth.

HBM2 is costly because of supply/demand pressures and Nvidia for sure is going to be using HBM2 where the markups are highest while AMD will be doing the same to a lesser degree. HBM2 can be clocked anywhere between 500Mhz to 1Ghz, Dual Data Rate, depending on the bandwidth needs of the processor and it would be foolish to include more bandwidth than the processor can actually make use of. Even with HBM2 it’s still may be good to make use of data/texture compression and the bandwidth/power savings that can be had rather than to use excess HBM2 over what is actually needed for the workloads. Using HBM/HBM2 as a last level cache is the logical method to get the most out of HBM/HBM2’s intrensic advanrage over slower system memory until the price of HBM2 becomes competative enough to justify using more HBM/HBM2 on computing systems.

There is nothing stopping AMD/Nvidia/SK Hynix/Samsung from creating an HBM2a JEDEC standard with a wider that 1024 bit interface per HBM2a stack if more effective bandwirtdh is required, or even an HBM2a standard that allows for 1.5Ghz or 2.0Ghz clock rates per HBM2a PIN(The current maximum per pin clock rate for JEDEC HBM2 is 1.0Ghz per pin, dual data rate) . Also HBM2 has a 64 bit sub channel mode that HBM lacks and it woul be good for you to read up on the JEDEC HBM2 standard because there are other improvments in HBM2 over HBM with HBM3/Next generation in development.

If I where looking to save Interposer space I would just create an JEDEC HBM# Standard with 2048 pins per stack and then 2 stacks could have twice the effective bandwidth without having to increase the per pin clock rate. That way 2 HBM#(2048 pin) stacks woud equal 4 HBM2 stacks in total pin count/effective bandwidth and HBM2 dies are larger in area than HBM stacks to begin with. So more microbumps can be placed on HBM2 if necessary to create an HBM2a stack with 2048 pins(micro-bumps).

HBM’s strategy is to reduce the bus width and length to save on power. I was talking specifically about the promises made for the bandwidth and capacity of the memory. Road maps from both the memory manufacturers and NVIDIA showed larger capacities and higher bandwidth than seem to be appearing.

I mean reduce the clocks and length…

HBM/HBM2 Reduce the clocks and increase the effective bandwidth by using a wide parallel interface to each HBM2 Stack. That’s a 1024 bit wide interface per HBM/HBM2 stack split up into 8 indipendent 128 bit channels that also for HBM2 can service 64 bit data requests(2 per 128 bit channel for HBM2). The JEDEC clock rate for HBM2 tops out at 1 Ghz(Double data rate DDR style) for an effective data rate of 2 Gbs per wire/trace. Lower memory clocks at lower power usage and lower error rates is what HBM2 provides as opposed to GDDR5/6 with clocks that are clocked 7 or more times higher(Big Power deaw) relative to HBM2’s single gigahertz clocks. HBM2 being on Interposer package and tucked closely up against the processor die on that interposer is just icing on the HBM cake for HBM2 with all that inherent/intrinsic advantage that HBM2 has over GDDR5/6.

One needs to look at that total effective bandwidth metric and ignore the clock speeds as a false method of an advantage when comparing HBM2 to GDDR5/6 or DRAM DDR4 or otherwise. High Clock rates figure greatly into the higher power usage and higher error rates on memory and other computing functionality compared to lower clock rates with wider parallel interconnects/buses so look to the JEDEC standard white papers or even Anandtech for their deep dives into the subject.

Also the JEDEC HBM/HBM2 standard only relates to what is needed to interface to a single HBM/HBM2 stack so device makers are free to include 1 to 4 or more HBM/HBM2 stacks with the only currently limiteding factor being the Interposer size limit if more that 4 HBM2 stacks are utilized on an interposer package along side a large processor die.

This article is a good read, be sure tor also read the research paper also AMD has some very intresting plans for exascale computing and this IP will be used dwon the product stack in consumer products:

“AMD Researchers Eye APUs For Exascale”

https://www.nextplatform.com/2017/02/28/amd-researchers-eye-apus-exascale/

The study link to in the NextPlatform article can be found here(PDF):

“Design and Analysis of an APU for Exascale Computing”

http://www.computermachines.org/joe/publications/pdfs/hpca2017_exascale_apu.pdf

I want to see that coupled with a POWER9!

“this is how it should be. We believe fervently in the trickle down of technology from high end to enterprise to consumer because the high end has the budget to pay for the most aggressive innovation”

You’ve got this exactly opposite.

NVDA was quite clear today they could only afford to develop Volta, at a staggering $1.5B cost, because gaming pays the freight.

The beginning of the proper GeForce – Tesla split has arrived.

They clearly lost the plot now. Chip is way too big to make enough money out of it on the consumer level as they will have to sell it for a big amount of money.

Not sure if the market uptake is big enough to warrant such a huge chip. Just checked their Volta workstation is predicted to costs 69k that’s a ludicrous amount of money.

It is just as predicted to make their design any better for DL and ML they will have to sacrifice something they decided to bolt it on which is about as bad. Now you have a lot of dead chip area for anything that doesn’t use these units and vice-versa

Instead they should have just got right of all the graphics related stuff and floating and double floating point units.

It is not such a expensive (actually much cheaper than P100).

You must understand 12nm node. TSMC wants to replace their 28nm node (since other foundries are getting to reliable production stats here) so they introduced 22nm and 12nm. Both with extremly low price to enter, high yield and low cost per wafer.

Actually no it is a special designed 12nm for nVidia that’s what the channels are saying, because it is a higher-power-high-performance node unlike the other mis-labeled 12nm which are low-power and actually more like real 16nm.

So nVidia had to put a lot of money on the table as they will likely be the only one who are going to use it. A very dubious strategy. nVidia is not Apple they have nowhere near the wafer volume. 28nm will be around for a very long time. For IoT there is no reason to go lower than 28nm, certainly there is no economical reason as design costs go up the rough if you go non-planar.

Yes and not. This is regular 12nm node. Actually TSMC is adjusting their processes to every single customer needs. But it is not major changes. It is like more robust suppy line for handling >300W TDP, etc.

And in Iot you need high performance too. Sure, simple temperature sensor with display does not requie such a performance. But there are also applications like smart-glass, drones… where you need maximum performance (computer vision…) at lowest power possible. And here comes smaller nodes.

But off course, for handling temperature sensor vill be probably 28nm better choice.

btw.: what do you mean by mislabeled?

Doubt it that Node is for high performance definitely not low power, Smart glasses, drones are all about power anything that is not one a main is all about power consumption this node is useless for that. It doesn’t fit the design criteria.

So there will be very few customers on this, maybe Microsoft if they are not going with GF and their XBox chip and that’s probably it. All FPGA vendors which would be another candidate say they are waiting for 7nm.

AMD is very committed to GF.

And for those who do look at lower power FDSOI 22 and 12 from GF look far more interesting and cheaper candidates. Anything else will probably stick to 28nm or even higher unless you are a big SoC manufacture you go for any of the other 12nm, 16nm or 10nm Nodes.

TSMC 12nm is mislabled as it is not really 12nm structure size it is a marketing name. As structure wise it is actually more like true 16nm.

Node naming has gone away from representing the real structure size and that includes all foundries nowadays.

Market uptake was excellent for the 129K USD DGX-1, no wonder they’re back with a rebound.

In case you haven’t noticed, Nvidia has been making a killing lately in data-centers due to its superior Deep Learning training hardware and edge computing in the nodes.

Meanwhile Intels Xeon Phi lies in shambles,,, nobody wants it

There wont be a consumer V100 like there wasnt really a consumer P100. There are already thousands of these V100 GPUs spoken for, going into Summit and Sierra.

And actually these GPU based heterogeneous systems are relatively inexpensive at a couple hundred million. The old but still reigning king of all supercomputers(in the tests that matter), the Fujitsu K, cost over $1.3 BILLION.

Will be interesting to see if either of the V100 based systems, or the Knights Hill based Aurora will dethrone the K in either test. The 100 PLFOPS Taihu Light didnt manage to.

The P100 and V100 are Nvidias first pre exascale chips, and their work on getting the pJ per bit of communication on chip down still has a way to go before they can do 1 ExaFLOP in ~20MW.

Im guessing its very application specific. In linpack and other standard benchmarks (Jack Dongarras… ) accelerator based supers are way ahead…

Which applications are you thinking of and of you have any data to back it up?

Thanks

/J

HPCG is one of Mr. Dongarra’s benchmarks like HPL. In fact, it was developed specifically because he believes that HPL is no longer an effective way to measure the complete performance of modern supercomputers. And no, the K is not very application specific and runs all sorts of simulations. Typically, all CPU systems are less application specific than accelerator based systems. Thats why the forthcoming flagship architecture for Japan’s exascale system is an all ARM CPU system.

So far, many of the all CPU systems like K, PrimeHPC FX10 and PrimeHPC FX100 are.around 90% computationally efficient Rmax/Rpeak, with K being 93%. No conventional heterogeneous system comes close. Look at the abysmal computational efficiency of Tiahne 2 or even the more specialized architecture Taihu Light by comparison.

The only place where accelerator based systems are currently WAY ahead is in FLOPS. And even then, the FLOPS don’t matter much if the thing is sitting there bottlenecked by its interconnect, as they often are in real world use. Hence Nvidia’s focus on data locality and getting their TOCs to work more synergistically with latency optimized CPU cores in architectures like Pascal and Volta and NVLink.