What constitutes an operating system changes with the work a system performs and the architecture that defines how that work is done. All operating systems tend to expand out from their initial core functionality, embedding more and more functions. And then, every once in a while, there is a break, a shift in technology that marks a fundamental change in how computing gets done.

It is fair to say that Windows Server 2016, which made it formal debut at Microsoft’s Ignite conference this week and which starts shipping on October 1, is at the fulcrum of a profound change where an operating system will start to look more like the distributed software substrate built by hyperscalers and less like the IBM mainframe, a DEC VAX, or a Unix system of the past.

In a sense, there are really two Windows system platforms from Microsoft as Windows Server 2016 comes to market.

The important one for Microsoft’s current business is the Windows Server that looks and smells and feels more or less like Windows Server 2012, its predecessor, and that will support the familiar file systems, runtimes, and protocols that current Windows applications require to execute. There are always nips and tucks to make Windows Server work better on newer processors and I/O devices, of course, and these are important to customers. It is this Windows Server that will keep the installed base of tens of millions of customers who run Windows platforms in the dataclosets and datacenters of the world humming along, and we expect that they will gradually, as time and application certification permit, move from Windows Server 2008 and 2012 to 2016 in the coming years. (The remaining Windows Server 2003 shops that are running on operating systems that lost support in July 2015 will likely not jump all the way to Windows Server 2016, but we shall see.)

The other Windows Server, and the one that is important for Microsoft’s future, is the one that its own Azure public cloud (we got an exclusive inside look in their cloud datacenters this week) is dying to get its hands on and that is also being infused with technologies from Azure, including its file systems, containers, integrated software-defined networking, and a streamlined variant of the core Windows Server kernel called Nano Server.

The Long Road From File Server To Datacenter To Cloud

In the past month, ahead of the Windows Server 2016 launch, Microsoft has been celebrating two decades of Windows as an operating system for servers. But the origins stretch much further back than that, for those of us who have been watching this metamorphosis of MS-DOS from the system software on the IBM PC to a windowing environment that rivaled Apple and Unix workstations. We will oversimplify some and ignore Microsoft’s dabbling with Unix (remember Xenix?) and OS/2 (remember LAN Manager?) and just say that Microsoft always wanted to break into the datacenter by jumping from the desktop to the closet and then stretching into the glass house – and to do so with Windows.

Back in November 1989, when Microsoft and IBM were still staunch partners in the emerging server space, the two committed to making the 32-bit OS/2 operating system running on Intel X86 processors – the predecessors to the Xeons before Intel really had server-specific chips – the preferred server platform for “graphical applications,” whatever that qualification meant. At that time, a beefy server had a single 80386 or 80486 chip, 4 MB of main memory, and a 60 MB disk drive that came in a massive 5.25-inch form factor. This was a decade before Google started building its own rack-scale servers from commodity parts. Three years later, in October 1992, Windows really got its start on systems with Windows for Workgroups 3.1, and the ball really got rolling for Windows on the server a year later when the much-better Windows for Workgroups 3.11 came out.

Overlapping this low-end, file serving variant of 16-bit Windows was, of course, Windows NT, short for “New Technology,” which made its debut in July 1993 with the Windows NT 3.1 release, sporting a new 32-bit kernel and a intended to run on DEC Alpha, SGI MIPS, IBM PowerPC, and Intel Itanium processors as well as the X86 family of chips. It was Windows NT that put Microsoft firmly on the path to siege the datacenter that had been carved by the DEC VAX and other proprietary minis starting in the late 1970s and the Unix vendors starting in the middle 1980s. When Dave Cutler, the inventor of the VMS operating system, moved to Microsoft in 1988, even before the OS/2 announcement by IBM and Microsoft mentioned above, the writing was surely on the wall. Cutler, who has been a Senior Technical Fellow at Microsoft for more than a decade now, helped create the Windows NT kernel, worked on various Windows Server releases, then the Azure platform team, and now is creating virtual infrastructure for the Xbox Live online gaming platform.

Which brings us to our point. Windows Server 2016 does not, as the Microsoft marketing message suggests, represent 20 years of its aspirations for systems rather than PCs. There is at least another decade more of history before Windows NT 4.0 came out, which is where Microsoft is currently drawing the starting line on its run to the datacenter.

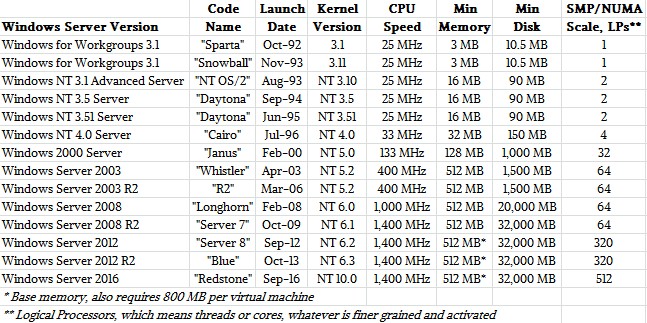

We will concede that with Windows NT Server 4.0, Microsoft was absolutely credible as a platform for SMBs and could take on Unix and the emerging Linux as an application and Web serving platform along with the traditional print and file serving duties that Windows for Workgroups and Windows NT 3.1, 3.5, and 3.51 were doing just as the word “server” was coming into common use and replaced the word “system” in the datacenter lingo. Here is what the history of Windows as a server really looks like:

And if you wanted to get into it with finer-grained detail, you would have to show all of the variations and permutations of the Windows Server platform within each version and release. The Windows Server platform – and when we talk about it, we by necessity bring in email, database, Web, and application serving because these are key drivers of the stack – is much more complex and has become increasingly so as time has gone by.

Jeffrey Snover, Technical Fellow and chief architect for Windows Server and Azure Stack, briefed The Next Platform at Microsoft headquarters ahead of the Windows Server 2016 launch. Snover walked us through the important phases in the development of the Windows platform, beginning with the mass commercialization made possible by Windows NT 4.0 Server.

This iteration of Windows Server was less expensive than the Unixes of the time that ran on powerful and pricey RISC machines, and it had the virtue of also running on cheaper (but somewhat less capable) X86 hardware. The X86 hardware would grow up and Windows Server along with it, eventually vanquishing Unix workstations from the market and killing off the volume business that helped Sun Microsystems, Hewlett-Packard, IBM, and Silicon Graphics support their respective Unix server businesses. (The same way that volume manufacturing of Core chips for PCs helps Intel invest in and profit from Xeon and Xeon Phi server chips.) At this point, Unix systems are waning, and IBM is building an OpenPower fleet for its Power architecture to sail across the sea to Linux Land, which is dominated by Xeon boxes just like the much larger territory (as measured by both server revenues and shipments) of the vast continent of Windows Land.

By 2000, as the dot-com boom was starting to go bust and Linux was taking over for Unix, Microsoft was pushing more deeply into the enterprise and Windows Server 2000 made a fundamental leap along with the Intel Xeon architecture. The three big developments were Active Directory authentication, which drives a huge part of the Windows Server and Azure cloud business today, “Wolfpack” clustering of Windows instances for failover and workload sharing (which debuted on Windows NT 4.0 Server but matured substantially with Windows 2000 Server), and support of non-uniform memory access (NUMA) shared memory clustering within a single system. The Wolfpack clustering and NUMA support made Windows Server a credible alternative to Unix on big iron systems, and really opened the door for large email and database serving for the Windows platform.

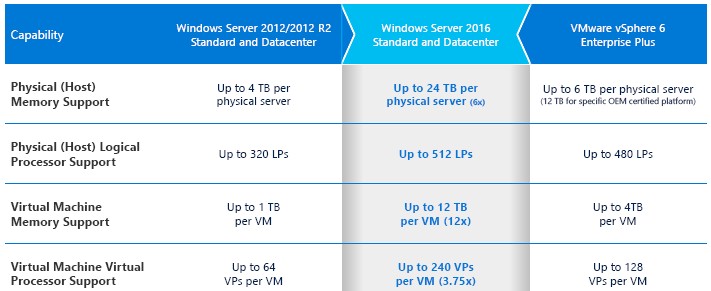

The initial NUMA support allowed for up to 32 logical processors and up to 128 GB of memory to be supported by the Windows kernel running on Intel Itanium servers; it was later doubled up to 64 sockets in a shared memory system, and with Windows Server 2003, 64 logical processors was standard in Datacenter Edition (with Standard Edition and Enterprise Edition scaling across fewer sockets), and a special variant allowed a single instance of Windows to span across 128 logical processors with two partitions. (This was before Hyper-V server virtualization, mind you.) The way Microsoft implemented NUMA in both Windows Server 2003 and Windows Server 2008, it counted threads like logical processors, so you could only span up to 64 sockets if you had 64 single-core Xeon chips with HyperThreading turned off. With Windows Server 2012, NUMA support was extended to 320 logical processors, and with Windows Server 2016, NUMA scaling is expanded to 512 logical processors and, perhaps more importantly, the maximum host memory is expanded to 24 TB, a factor of 6X greater than the upper limit with Windows Server 2012.

It is also significant that the underlying Hyper-V server virtualization hypervisor integrated with Windows Server can support larger virtual machine instances. With Hyper-V 2016, the hypervisor supports up to 240 logical processors (a factor of 3.75X more than Hyper-V 2012) and the maximum memory per VM is expanded to 12 TB, a factor of 12X increase.

As you can see, Hyper-V is getting out in front of VMware’s ESXi 6.0 hypervisor in terms of scale, and this is significant because the overwhelming majority of workloads that are virtualized by VMware’s stack are based on various versions of Windows Server. Microsoft has been chipping away at VMware accounts for some time, and is keeping the pressure on high. That said, most applications do not need the full scale of memory and compute that are available in the Hyper-V, ESXi, KVM, or Xen hypervisors for X86 iron. This is no longer a bottleneck for most customers, just like NUMA scaling for operating systems and databases has not been since maybe the Windows Server 2008 generations. To be sure, Microsoft has to keep up with the ever-increasing core counts and physical memory footprints of Xeon E5 and Xeon E7 machines, just like the Linux community has to. You can’t leave cores and memory chips stranded.

Scale Down Is As Important As Scale Up

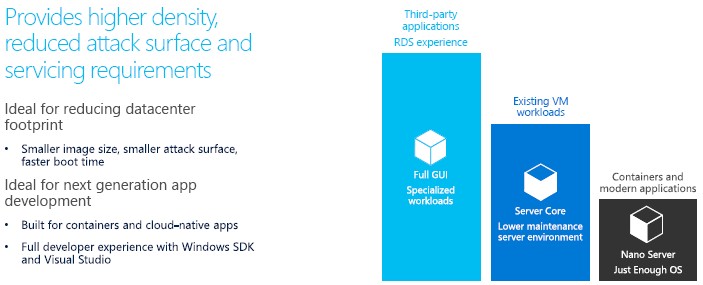

With the advent of the Azure public cloud and the backcasting of some technologies, such as shared storage and software-defined networking, into the Windows Server platform and the development of others aimed specifically at Azure, such as the Nano Server minimalist variant of Windows Server for supporting applications created from containerized chunks of code, called microservices, Windows in the datacenter is undergoing yet another metaphor change and technical evolution.

The neat bit is that Windows Server is gaining new approaches, but not eliminating old ones, and this is one of its strengths. Speaking very generally, and we realize there are always exceptions and issues, the client/server and Web applications that were written for prior generations of Windows will continue to work. The new world of Nano Server and containers is a deployment option, not a requirement. The point is, Microsoft understands that the enterprise world wants to go hybrid, and that means two different things. In one way, hybrid means running some applications in-house and some in the cloud, and in another, it means running some applications in a legacy Windows Server mode and other, newer applications in a Nano Server and container mode.

This is by necessity, as has always been the case with data processing.

Companies have spent years building virtualized datacenters that do not actually look anything like public clouds, according to Snover, and he is right. That includes those customers using the Microsoft platform, where the forthcoming Azure Stack (which was supposed to be announced in October and which has been pushed out) is based on Windows Server but it is a very different animal indeed.

“Some people feel that they are building the wrong things, and it reminds me of an old adage: When you find out that you are on the wrong road, turn around no matter how much progress you have made. A lot of people feel like they have done that, they have this sense that they are on the wrong road. We hear this a lot from people doing OpenStack. They are a couple of years into this, they have got a group of people who are experts in infrastructure and open source software, and then they ask themselves how is this helping them sell more cars or airplane tickets, how is this really moving the business forward?”

Moving forward in many cases will mean continuous application development running on containers and using a minimalist operating system, and that is why Microsoft is adding Windows Server Containers, Hyper-V Containers, and the Nano Server distribution of the Windows Server operating system with the 2016 version. We are going to drill down into these technologies, which are not yet deployed even on Microsoft’s Azure public cloud, but an overview here is appropriate. Nano Server eats up less memory and storage to run and has a much lower attack surface for malware and is also easier to update and keep secure. These are designed for a future that does not look like the past, and other technologies, such as Storage Spaces Direct hyperconverged storage and the Azure SDN stack are being added to Windows Server 2016 to make it suitable for other kinds of applications that are somewhere between Windows Server and Azure.

But in the end, unless customers put up a big fuss, the future will look more and more like Azure, as embodied in the Azure Stack approach to building a true cloud, and less like the Windows Server way that most enterprise are long familiar with. Now that these technologies are coming to market, the time has come to dig in and analyze, which you know The Next Platform likes to do.

OS/2 1.0 was announced in April, 1987.

https://en.m.wikipedia.org/wiki/OS/2#1985.E2.80.931989:_Joint_development

Yup. But I was talking about OS/2 LAN Manager and the partnership with IBM and Microsoft when they made it the “preferred” server platform for “graphical applications.”

Still not sure which .NET / ASP.NET version the nano-server-version will be able to run?

Is it comparable to the first announcement, that, as said earlier, the nano-version will not include .NET / .NET core out of the box?

(if i remember correctly, there was a similar limitation when Server 2008 R2 core was released, right?)

The most notable difference between Nano and regular Server is that Nano targets Asp.Net Core (formerly known as Asp.Net 5). Classic Asp will not run on Nano and neither will full .Net Framework applications such as Asp.Net <=4.

Great post. So nano servers is the single most important highlight of the entire offering. Other features and enhancements are also good, like storage etc.

Aah those were the days were each new OS version was exciting bring new useful features to the front and every new processor gen was actually close to double the speed of the previous one. All this has long gone.

More like End Of A Good Company

Excellent, but NT 4 never supported Itanium and wasn’t supposed to

Sorry, not even Windows NT 4, Windows Windows NT 3.1

Interesting reading, it’s well written. From the point of view of sell-out Windows customers, i guess they just enjoyed it, but without the blackout about the unices that fuel the so-called “cloud” doesn’t convey the necessary impartiality needed to make any informed technology decision.