If you want to study how datacenter design has changed over the past two decades, a good place to visit is Quincy, Washington. There are five different datacenter operators in this small farming community of around 7,000 people, including Microsoft, Yahoo, Intuit, Sabey Data Centers, and Vantage Data Centers, and they have located there thanks to the proximity of Quincy to hydroelectric power generated from the Columbia River and the relatively cool and arid climate, which can be used to great advantage to keep servers, storage, and switches cool.

All of the datacenter operators are pretty secretive about their glass houses, but every once in a while, just to prove how smart they are about infrastructure, one of them opens up the doors to let selected people inside. Ahead of the launch of Windows Server at its Ignite conference, Microsoft invited The Next Platform to visit its Quincy facilities and a history lesson of sorts in datacenter design, demonstrating how Microsoft has innovated and become one of the biggest of the hyperscalers in the world, rivaling Google and Amazon Web Services – companies that are its main competition in the public cloud business.

Rick Bakken, datacenter evangelist for Microsoft’s Cloud Infrastructure and Operations team, was our tour guide, and as you might imagine, we were not allowed to take any pictures or make any recordings inside the several generations of datacenters at the facility, which is undergoing a massive expansion to keep up with the explosive growth of the online services that Microsoft supplies and that are increasingly deployed on the same infrastructure that is used to underpin the Azure public cloud. But Microsoft did tell us a lot about how it is thinking about the harmony between servers, storage, and networking and the configuration of the facilities that keep them up and running.

From MSN Web Service To Azure Hyperscale

Each of the hyperscalers has been pushed to the extremes of scale by different customer sets and application demands, and over different time horizons. The Google search engine was arguably the first hyperscale application in the world, if you do not count some of the signal processing and data analytics performed by US intelligence agencies and the military. While search continues to dominate at Google, it is now building out a slew of services and moving aggressively into the public cloud to take on Amazon Web Services and Microsoft. Amazon has to support more than 1 million users of its public cloud as well as the Amazon retail business, and Facebook has to support the 1.7 billion users on its social network and the expanding number of services and data types they make use of as they connect.

For Microsoft, the buildout of its Azure cloud is driven by growth in its online services such as the Bing search engine, the Skype communications, the Xbox 360 gaming, and the Office 365 office automation tools, and others that add up to over 200 cloud-based services that are used by more than 1 billion users at more than 20 million businesses worldwide.

Those are, as The Next Platform has said many times, some big numbers and ones that make Microsoft a player, by default, in the cloud. And those numbers not only drive big infrastructure investments at Microsoft, which has well north of 1 million servers deployed across its datacenters today (including Azure and other Microsoft services that have not yet been moved to Azure), but they drive Microsoft to continuously innovate on the designs of its systems, storage, networking and the more than 100 datacenters that wrap around them in its infrastructure.

This move from enterprise scale to hyperscale all started almost a decade ago for Microsoft.

“I worked for Steve Ballmer back in 2007 and I was on his capacity planning team,” Bakken explained to The Next Platform during the Quincy tour. “We ran the datacenter in Quincy, Washington. At the time, the industry was building datacenters at a cost of between $11 million and $22 million per megawatt, according to figures from The Uptime Institute, depending on the level of reliability and availability. Even though our costs were lower, we still needed to drive better efficiencies. We decided to find ways we could beat the industry and looked at cooling as being an opportunity for significant improvement. What came out of this was a realization that we were really building large air conditioners, that we were not in the IT business but in the industrial air conditioning business when you build a facility like this. And this drove us to deeper questions as to why we were doing that, and the answer was heat.”

So rather than take the heat, Microsoft’s engineers went after it.

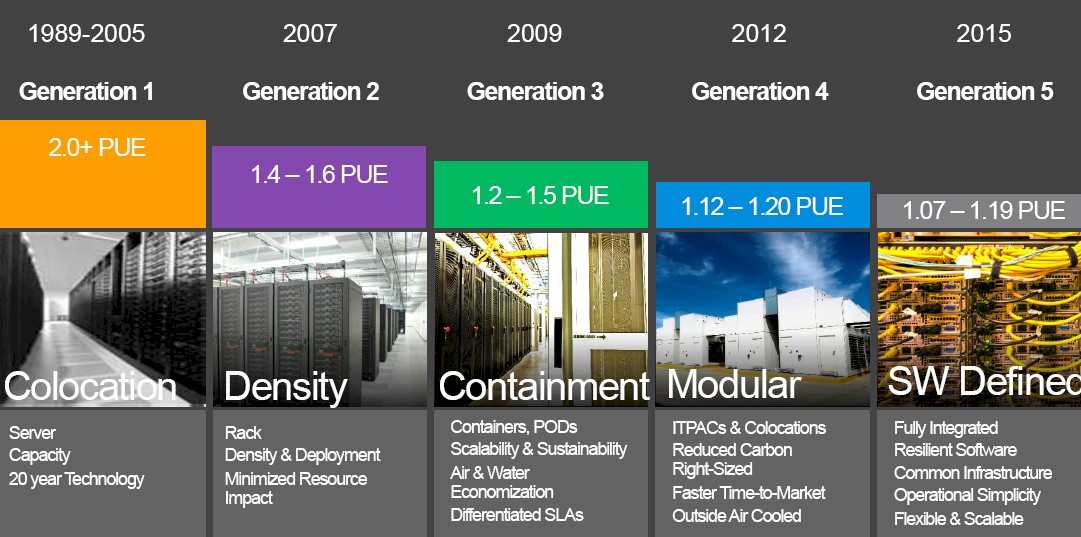

To backstep a little bit, between 1989 and 2005, with the first generation of co-located datacenters that Microsoft paid for, the company bought generic servers from the big tier one suppliers and racked them and stacked them like every other enterprise company of the client/server and dot-com eras did. With these facilities, which had traditional raised floors used in datacenters for decades, the power usage effectiveness of the datacenter – a measure of efficiency that is the total power of the datacenter divided by the total power of the IT gear – was at best around 2.0 and in some cases as high as 3.0. So, that meant for every 1 megawatt of juice consumed by the IT gear doing actual work there was another 1 megawatt of electricity being consumed by the datacenter as voltages were stepped down for battery backup and the gear in the racks and water was chilled and air blown around the datacenter.

Starting in 2007 with the Columbia 1 datacenter in Quincy and then adding on with the Columbia 2 facility in 2008, which together are the size of a dozen American football fields, Microsoft started using rack-dense system configurations. Each of the datacenters has five rooms with 18 rows of racked up gear. Each room is rated at 2.5 megawatts, and across those ten rooms with the networking and other gear thrown in, the whole thing draws 27.6 megawatts. With the compute density and improved cooling, Microsoft was able to get the PUE of its Generation 2 datacenters down to between 1.4 and 1.6, which means the power distribution and cooling were only adding 40 percent to 60 percent on top of the juice burned by the IT gear itself. This was a big improvement.

With the Generation 3 datacenters that Microsoft rolled out in 2009, it took on the issue of containing the heat in the datacenter. In some cases with the Generation 3 datacenters the containment came literally from containers – in this case, shipping containers, and with some of the Microsoft locations (such as those used in its Chicago facilities), the containers were double stacked and packed with gear. (See here for more details on this.) Microsoft experimented with different designs to contain heat and drive down PUE, including hot aisle containment and air-side economization, which drove the PUE down as low as 1.2.

There is no Generation 3 gear in Quincy, and that was because Microsoft was working on the next phase of technology, its Generation 4 modular systems, for the Quincy facility. Hot aisle containment was retrofitted onto the Columbia 1 and 2 datacenters, which are mirror images of each other, using clear, flexible plastic barriers from floor to ceiling.

Back in the mid-2000s, when Columbia 1 and 2 were built, server compute, storage, and power density was not what it is today, and so now, with the Microsoft’s Open Compute Server platforms installed in these facilities, only eight of the 18 rows have gear in them now, and because Microsoft has substantially virtualized its networking inside the datacenter, load balancers, switches, and routers are no longer in the facilities, either. (It is a pity that the datacenter can’t have its power distribution upped to 5.7 megawatts and be filled to the brim again.)

Buying A Datacenter In A Box

By 2011, Microsoft’s IT needs were growing fast as it made acquisitions and started preparing for a massive public cloud buildout, and the datacenter architecture changed again.

“In Generation 4, we learned a few things,” said Bakken. “First, buy the datacenter as a box with a single part number. These integrated modules can either be deployed in a container-like structure, which we call an ITPAC, or a more conventional co-lo design. At the same time we moved away from chiller environments and into chiller-less environments that use outside air for cooling — also known as air-side economization — where it was appropriate. Thsi drove our PUE ratings down to the range of 1.12 to 1.2, below our previous designs and far below the industry.”

This is something that is possible in a town like Quincy, where the temperature only rose above 85 degrees for 21 days in the past year.

With the ITPACs, which were manufactured by two different vendors, Microsoft told them that it wanted containerized units of datacenter that would take 440 volt power in and have a plug for the network and run several thousands of servers in a pod. Microsoft did not care how they did it so long as it met the specs and could be rolled into the datacenter. As it turns out, the two vendors had very different designs, with one creating a pod that had a shared hot aisle in the center and two rows of gear on the outside and the other creating a pod that had separate hot and cold aisles. These pods were lifted into a datacenter with a crane and then the roof was put onto the building afterwards, and interestingly, the first day that they went in it snowed like crazy in Quincy and the snow blew in through the side walls and surrounded the pods. (Microsoft eventually put up baffle screens to keep the rain and the snow out but let the wind in.)

Over the years, Microsoft has done a few tweaks and tunings with the Generation 4 datacenters, including getting rid of large-scale uninterriptible power supplies and putting small batteries inside servers instead. After realizing what a hassle it was to have a building with walls and a roof and that it took too long to get the ITPAC pods into the facility, Microsoft went all the way and got rid of the building. With one Generation 4 facility that was built in Quincy, the pods stand in the open air and the network and power are buried in the concrete pad they sit upon. And with a Generation 4 facility in Boydton, Virginia, Microsoft deployed adiabatic cooling, where air is blown over screens soaked with water in the walls of the datacenter to create cool air through evaporation of the water rather than using simple outside air cooling alone. (Facebook uses variations of this adiabatic cooling technology in its Forrest City, North Carolina facility.)

All told, the several generations of datacenter gear in Quincy take up about 70 acres today. But the Generation 5 facility that is being built across the main drag on the outskirts of town has 200 acres in total, and is a testament to the growth that Microsoft is seeing in its cloudy infrastructure from the Azure public cloud and its own consumer-facing services.

“We have taken all of the learnings from prior generations and put them into Generation 5,” said Bakken. “I am standing up four versions of infrastructure that we call maintenance zones. They are identical to each other and I am going to stripe some fiber between them to have great communication. I am on cement floors, I am using adiabatic cooling, and I don’t have chillers.”

While Bakken was not at liberty to say precisely how the IT gear will be organized inside of the datacenter – and the facility was still under construction so we were not permitted to enter – he did say that Microsoft was growing so fast now that it needed to scale faster than was feasible using the “white box datacenter” ITPACs it had designed. Presumably Microsoft is using some kind of prefabbed rack, as you can see in the image below from inside the Generation 5 facility:

The racks that Microsoft is using are obviously compatible with its 19-inch Open Cloud Server chassis, and Bakken said that the Generation 5 datacenters would use 48U (84-inch) tall racks. It looks like there are 20 racks to a row by our count, and at 84 inches that gives you four of the 12U Open Cloud Server enclosures per rack, and that works out to 96 server nodes per rack. The funny bit is that the machines on the left of this image look like the Open Cloud Server, but the ones on the right do not. They look like a different modular blade form factor, and perhaps Microsoft is cooking up a different Open Cloud Server to donate to the Open Compute Project? That looks like seven vertical nodes in a 4U enclosure to our eyes, which would yield a maximum of 84 sleds per node. (These don’t appear to be packed that densely and have other gear in the racks.)

The first Generation 5 maintenance zone has been released to engineering to be populated by servers. The Generation 5 datacenters have lots of power, at 10.5 megawatts each, 42 megawatts for a maintenance zone, and 168 megawatts for the theater of four zones, which Microsoft could build some day but has not committed to do so as yet.

“We have put some provisions in place to allow new ways and new technologies relating to power generation and those types of things to be integrated into our environment, Bakken explained. “So when thin film and batteries and all of that is viable, or we put solar panels on the roof, we are good to go. Let servers get small or big, let networks do SDN things. Whatever. We have thought that through. We can still run within the same datacenter infrastructure. And the way that I am doing that is that I am putting in a common signal backplane for my entire server environment, which shares power, cooling, and network.”

Software defined networking is a key component of the Generation 5 facilities, too, and Microsoft has been catching up with Google and keeping pace with Facebook (and we presume with AWS) in this area.

“SDN is a very big deal,” said Bakken. “We are already doing load balancing as a service within Azure, and what I really want to be able to do is cluster-based networking, which means I want to eliminate static load balancers, switches, and routers as devices and go to a standard industry interconnect and do those services in software. This is a big thing in my environment because networking makes up about 20 percent of the capital expenses because I have to replicate those frames in multiple locations.”

This is consistent, by the way, with the cost of networking at Facebook and at the largest supercomputing centers. (We will be talking to Microsoft about its SDN setup and its global network in a separate story.)

Microsoft is not bragging about PUE for the Generation 5 facilities yet, but it looks like it will be in the same ballpark as the best Generation 4 facilities (see the historical chart above), and we presume Microsoft is going to be able to push it down some. The other metric Microsoft is watching very closely as it warps higher into hyperscale is cost. Ultimately, that is what all of these changes in datacenter designs over the past decade are really all about. We can see the progression in datacenter efficiency over time, and so we asked Bakken about the change in costs over time?

“It comes down exponentially,” Bakken told us referring to the costs moving from Generation 1 through the phases of Generation 4 datacenters. “You are buying a box, you are not standing up a building, so it changes everything. With the new datacenters, all that I have to do is change the filters, that is really the only maintenance I have. And we have moved to a resiliency configuration where I put more servers in each box than I need and if one breaks, I just turn it off and wait for the next refresh cycle. The whole dynamic changes with the delivery model of the white box. So we learned quite a bit there, but now we have got to really scale.”

And that is not a technology problem so much as a supply chain and technology problem. And what seems clear is that racks beat ITPACs when speed matters. Bakken said that he had to grow the Microsoft network – meaning literally the network and the devices hanging off it – exponentially between now and 2020. “I have got to expand, and it is almost a capacity plan that is not a hockey stick but a flag pole when you look at certain regions,” he said. “The problem I have right now? It is supply chain. I am not so worried about technology. We have our Open Cloud Server, which I think is very compelling in that it offers some real economic capabilities. But I have got to nurture my supply chain because traditionally we bought from OEMs and now we are designing with ODMs so we can take advantage of prices and lower our overall costs. So I am moving very, very quickly to build out new capacity, and I want to do it in a very efficient and effective way and it is really about the commoditization of the infrastructure.”

This further and relentless commoditization is key to the future of the public cloud – all public clouds, at least those that are going to survive. The way that Bakken and his colleagues at Microsoft see it, the cost of using cloud is going to keep coming down, and presumably fall below the cost of on-premises infrastructure, because the big clouds will do it better, cheaper, and faster. But, as Bakken tells IT managers, with so many connected devices and cross-connected services, the amount of capacity IT shops buy is going to go up in the aggregate and hence net spending will rise. That is the big bet, and it is why Microsoft, Amazon, and Google are spending their many billions most quarters. Time will tell if they are right.

This story has been updated to reflect new information supplied by Microsoft.

Gosh these datacentre pictures look depressing. They look like any other generic large scale industrial or logistic complex structure. What happened to the fancy days of IT?

The glass house where you showed off your data processing might became the warehouse where you put exaints of compute and exabytes of storage.

“Each of the datacenters has five rooms with 18 rows of racked up gear per row”

Is that really what you meant to say? Maybe you meant “Each of the datacenters has five rooms with 18 rows of racked up gear per room”

Er, that’s not adiabatic cooling. It’s evaporative cooling. If anything, traditional phase-change compressor-based chillers would be “adiabatic”