The Inevitability Of FPGAs In The Datacenter

You don’t have to be a chip designer to program an FPGA, just like you don’t have to be a C++ programmer to code in Java, but it probably helps in both cases if you want to do them well. …

You don’t have to be a chip designer to program an FPGA, just like you don’t have to be a C++ programmer to code in Java, but it probably helps in both cases if you want to do them well. …

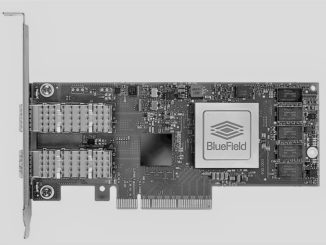

The consensus is growing among the big datacenter operators of the world that CPU cores are such a precious commodity that they should never do network, storage, or hypervisor housekeeping work but rather focus on the core computation that they are really acquired to do. …

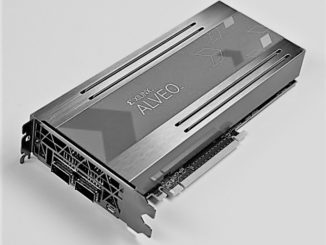

Accelerators of many kinds, but particularly those with GPUs and FPGAs, can be pretty hefty compute engines that meet or exceed the power, thermal, and spatial envelopes of modern processors. …

There is a battle heating up in the datacenter, and there are tens of billions of dollars at stake as chip makers chase the burgeoning market for engines that do machine learning inference. …

Technologies often start out in one place and then find themselves in another. …

Sometimes, if you stick around long enough in business, the market will come to you. …

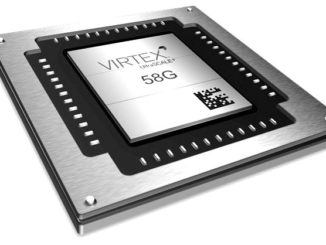

FPGAs might not have carved out a niche in the deep learning training space the way some might have expected but the low power, high frequency needs of AI inference fit the curve of reprogrammable hardware quite well. …

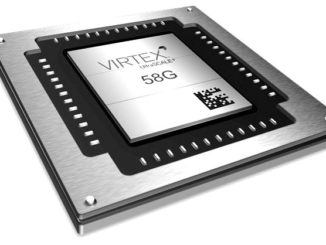

The field programmable gate space is heating up with new use cases driven by everything from emerging network, IoT, and application acceleration trends. …

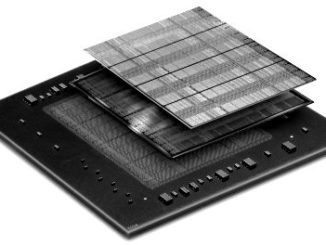

To keep their niche in computing, field programmable gate arrays not only need to stay on the cutting edge of chip manufacturing processes. …

In the U.S. it is easy to focus on our native hyperscale companies (Google, Amazon, Facebook, etc.) …

All Content Copyright The Next Platform