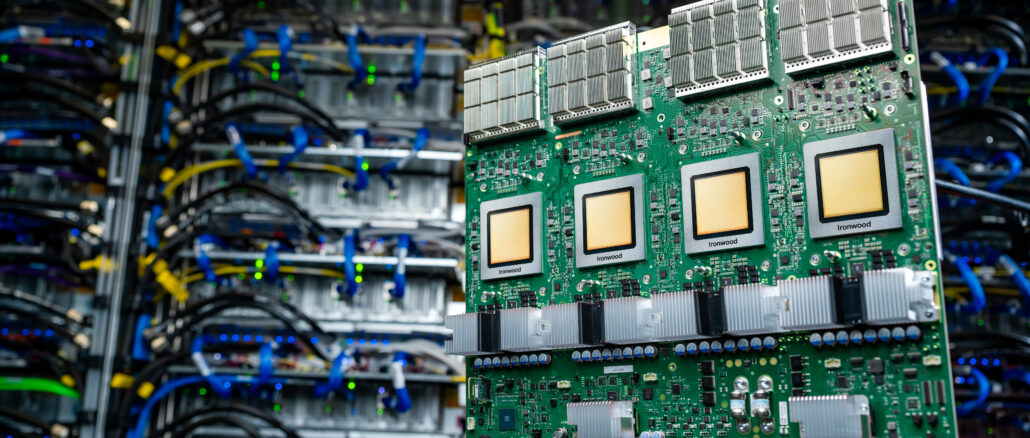

Stacking Up Google’s “Ironwood” TPU Pod To Other AI Supercomputers

As part of the pre-briefings ahead of the Google Cloud Next 2025 conference last week and then during the keynote address, the top brass at Google kept comparing a pod of “Ironwood” TPU v7p systems to the “El Capitan” supercomputer at Lawrence Livermore National Laboratory. …