Rated horsepower for a compute engine is an interesting intellectual exercise, but it is where the rubber hits the road that really matters. We finally have the first benchmarks from MLCommons, the vendor-led testing organization that has put together the suite of MLPerf AI training and inference benchmarks, that pit the AMD Instinct “Antares” MI300X GPU against Nvidia’s “Hopper” H100 and H200 and the “Blackwell” B200 GPUs.

The results are good in that they show the MI300X is absolutely competitive with Nvidia’s H100 GPU on one set of AI inference benchmarks, and based on our own estimates of GPU and total system costs can be competitive with Nvidia’s H100 and H200 GPUs. But, the tests were only done for the Llama 2 model from Meta Platforms with 70 billion parameters. This is useful, of course, but we had hoped to see a suite of tests across different AI models as the MLPerf test not only allows but encourages.

This is a good start for the MI300X and future platforms, and it is a good snapshot for this point in datacenter GPU time.

But by the end of this year – and based on expected prices for Nvidia Blackwell B100 and B200 GPUs – it looks like Nvidia will be able to drop the price/performance boom on AMD MI300X accelerators, and perhaps the AMD MI325X GPU coming later this year, if it wants to. But for the moment, Nvidia may not want to do that. With Blackwell GPUs being redesigned and shipping a few months later than intended and Blackwell GPU demand being well ahead of supply, we expect for their prices to rise and their price/performance many not be any better than for the H100s and H200s as well as the current MI300X and future MI325X. It may all wash out to around the same street price with pricing pressure up depending on the urgency of the situation.

And, the test results do not include the newer – and better – Llama 3.1 70B models from Meta Platforms, which is the one that enterprises are most likely to deploy as they runs proofs of concept and move into productions with their AI applications.

Also interestingly, AMD has yet to publish MLPerf test results for AI training runs, which everyone obviously wants to see. We expect the MLPerf Training v4.1 results to come out sometime in the fourth quarter, very likely just ahead of the SC24 supercomputing conference. The MLPerf Training v4.0 benchmark test results were announced back in June, and AMD did not publish any results then.

A Focus On Inference

There has been chatter that AMD has been getting some big business selling the MI300X to the hyperscalers and cloud builders for AI inference workloads, so we understand why AMD waiting until the MLPerf Inference v4.1 benchmarks were release last week.

Our analysis of the latest MLPerf inference results certainly shows that the MI300X can hold its own against the H100 when it comes to performance and value for dollar for the Llama 2 70B model running inference. But it has trouble competing with the H200 with its fatter 141 GB of HBM memory and the higher bandwidth that it has compared to the H100. And if the pricing is as we expect on Blackwell, then the MI325 coming out later this year will have to have more memory, more bandwidth, and an aggressive price to compete for AI workloads.

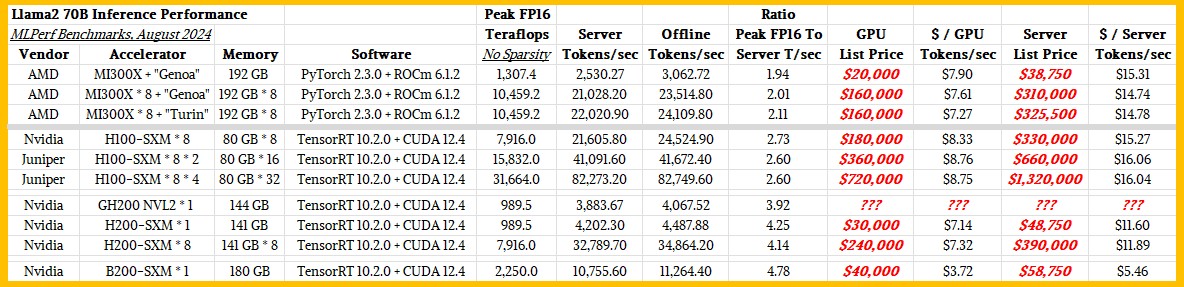

Here are the MLPerf inference benchmark results we extracted from the v4.1 run that was published last week:

There are a lot of server makers who have run tests on various Nvidia GPUs, but we have taken the ones run by Nvidia including the recent test on Llama 2 70B for a single Blackwell B200 SXM processor, which Nvidia discussed here in this blog. We extracted all of the Nvidia results, and also added the two results that switch maker Juniper Networks (soon to be acquired by Hewlett Packard Enterprise) did on HGX H100 clusters with two and four nodes with a total of 16 and 32 H100s respectively. AMD tested a standard universal based board (UBB) in a server node equipped with a pair of its current “Genoa” Epyc 9004 series processors and eight of the Antares MI300X GPUs and also tested a machine that swapped out the Genoa CPUs for the impending “Turin” Epyc 9005 series CPUs that are expected to launch in the next month or so.

AMD also presented The Next Platform with a chart that showed the performance of one of the MI300X GPUs being tested on the Genoa box to show the scaling of the GPUs within a node:

Let’s talk about performance and then we can talk about bang for the buck.

For performance, what we want to know is how much of the potential peak floating point performance embodied in these AMD and Nvidia devices is actually generating tokens in a Llama 2 inference run. We can’t know that because GPU utilization and memory utilization rates are not part of the benchmarks. But we can sure infer that something is up.

The AMD GPUs are configured with the PyTorch 2.3.0 framework from Meta Platforms and the ROCm 6.1.2 libraries and runtimes from AMD, its analog to Nvidia’s CUDA stack. Peak FP16 performance on the tensor cores in the MI300X is 1,307.4 teraflops, but running in server mode (meaning with a kind of randomized querying we see in the real world), a single MI300X generated 2,530.7 tokens per second running the Llama 2 70B model. That is a ratio of 1.94 between Llama 2 performance and hypothetical peak flops. As the machines scale up to eight MI300X device and switch to faster CPUs, that ratio rises a little bit, through 2.01 and up to 2.11.

We have been saying that the H100 GPUs, with a mere 80 GB of HBM capacity and lower bandwidth to boot, were underprovisioned on the memory front because of the scarcity of HBM3 and HBM3E memory. And we think the same thing is happening with the MI300X. (You got to war with the HBM army you have, to paraphrase former US Defense Secretary Donald Rumsfeld.) Everybody is underprovisioning their GPUs not only so they can sell more devices but also because it is hard to cram enough stacks of HBM, at a very tall height of stacks, close to the GPU chips (or chiplets) and have the package manufacturing process yield.

If you look at the H100 systems that Nvidia tested, the ratio of server tokens per second to peak FP16 flops is 2.6 or 2.73, which is better than what AMD is seeing and which might come down to software tuning. The CUDA stack and the TensorRT inference engine have had a lot of tuning on H100, and now you see why AMD was so eager to buy AI consultancy Silo AI, a deal that just closed a few weeks ago.

With the H200, which has its HBM memory boosted to 141 GB and its bandwidth boosted from 3.35 TB/sec to 4.8 TB/sec by switching to HBM3E stacks, you see that this ratio goes up to 4.25 and Nvidia’s own benchmarks showed that AI workloads increased by a factor of 1.6X to 1.9X just by adding that memory capacity and bandwidth on exactly the same Hopper GH100 GPU.

It is hard to guess what memory capacity and bandwith an MI300X should have to balance out its floating point performance when it comes to inference (and probably also training) workloads, but our hunch is the MI325X, with 6 TB/sec of bandwidth (compared to 5.3 TB/sec for the MI300) and 288 GB of HBM3E (compared to 192 GB of HBM3) is a big step in the right direction. It looks like this MI325X will have the same 1.31 petaflops of FP16 oomph, by the way, as we talked about back in June when the MI325X was previewed. The MI325X does not push the performance envelope directly, in terms of peak flops. And now we see why.

We do expect a flops boost with the MI350 series next year, which is supposed to have a new iteration of the CDNA architecture – CDNA 4 – that is different from the CDNA 3 architecture used in the Antares MI300A, MI300X, and MI325X. The MI350 moves to 3 nanometer processes from Taiwan Semiconductor Manufacturing Co and adds FP6 and FP4 data types. Presumably there will be an all-GPU MI350X version and maybe an MI350A version with Turin CPUs cores on it.

One thing. You might be inclined to believe that the difference in performance between the AMD MI300X and the Nvidia H100 was due the coherent interconnects to lash the GPUs into a shared memory complex on their respective UBB and HGX boards. That would be Infinity Fabric on the AMD machine – a kind of PCI-Express 5.0 transport with HyperTransport memory atomics added on top – and NVSwitch on the Nvidia machine. That’s 128 GB/sec of bi-directional bandwidth per GPU for Infinity Fabric versus 900 GB/sec for NVLink 4 ports and NVSwitch 3 switches, and that is a factor of 7X higher bandwidth on a memory coherent node fabric in favor of Nvidia.

This may be part of the performance difference for the Llama 2 workloads, but we think not. Here is why.

A single MI300X has, at 1.31 petaflops, 32.1 percent higher peak performance than either the H100 or H200 at 989.5 teraflops at FP16 precision with no sparse matrix rejiggering double pumping the throughput. The MI300X has 2.4X the memory, and yet only does about 7 percent more Llama 2 inference work as an H100 and only 60 percent of the inference load of an H200. This device will do about 23.5 percent of the work of a Blackwell B200 with 180 GB of memory, according to the tests run by Nvidia.

As far as we can tell, the B200 will also be memory constrained, which is why there will be a memory upgrade in 2025 for the B200 and maybe the B100, according to the Nvidia roadmap released in June, and if history is any guide, it will have somewhere around 272 GB of capacity. The H200 is getting memory balance ahead of the MI300X, which will be getting memory balance in the MI32X later this year and will beat the B200 “Blackwell Ultra” to memory balance by maybe six to nine months.

If we were buying GPUs, we would have waited for Hopper Ultra (H200) and Blackwell Ultra (B200+) and Antares Ultra (MI325X). You get your money’s worth out of a datacenter GPU with more HBM.

But you can’t always wait, of course. And you go to the GenAI war with the GPUs that you have.

Just because having fast and fat interconnects between the nodes might not matter for Llama 2 70B workloads doesn’t mean it doesn’t matter for larger models or for AI training more specifically. So don’t jump to the wrong conclusion there. Will be have to see when AMD runs AI training benchmarks in the fall.

And, of course, AMD is running Llama inference at FP8 resolution on Antares and Nvidia is running it at FP4 resolution on Blackwell, so that is some of the big jump between these two when it comes to observed LLama 2 inference performance. But the Hopper GPUs are running at FP8 resolution like Antares, so that is not it.

Where The Money Meets The Use Case

That brings us to price/performance analysis for MI300X versus the Nvidia Hopper and Blackwell devices.

Jensen Huang, Nvidia’s co-founder and chief executive officer, said after the Blackwell announcement earlier this year that the devices would cost $35,000 to $40,000. Hopper GPUs, depending on the configuration, probably cost $22,500, and this is consistent with the statement that Huang made on stage in 2023 that a fully populated HGX H100 system board cost $200,000. We think an H200 GPU, if you could buy it separately, would cost around $30,000. We think the MI300X costs around $20,000, but that is an educated guess and nothing more. It depends on the customer and the situation. The street price, depending on the deal, will vary depending on a lot of other factors, like how many the customer is buying. As Huang is fond of saying, “The More You Buy, The More You Save,” but this is just a volume economics statement in this case, not the additional knock-on network effects of accelerated processing.

Our rough guess is that to turn those GPUs into servers – with two CPUs, lots of main memory, network cards, and some flash storage – would cost around $150,000, and one that can plug in either the HGX board from Nvidia or the UBB board from AMD to build an eight-way machine. In the cases where we show a single GPU’s performance, we are allocating the cost of one GPU and one-eighth the cost of the hypothetical GPU system configuration.

To that end, we added in the cost of the GPUs and the full systems including those GPUs to the performance data we extracted from the MLPerf Inference v4.1 tests run with the Llama 2 70B foundation model.

As you can see, the MI300X that is out of balance like the H100 and delivers a little better bang for the buck. Working backwards from the eight-way H100 systems, we think a single H100 would be able to push maybe 2,700 tokens per second in server mode (not offline mode where the GenAI queries are batched up on the input and the output for a little more efficiency), which is as we say about 7 percent better than the MI300X pushes on Llama 2 70B inference. The H200 with its more than 2X memory of 141 GB boosts inference performance by 56 percent and the price of the GPU only goes up by 33 percent and so the ang for the buck at the GPU and system level improves.

If the B200 costs $40,000, as Huang indicated, then the cost per unit of inference on the Llama 2 70B test will be cut almost in half at the GPU level and will be cut by slightly more than half at the system level (because we are keeping the base wraparound server cost the same).

Given the shortages of Blackwells and the desire to fit more AI compute in a given space and in a given thermal envelope, we think Nvidia could charge $50,000 per GPU – which is something we have been expecting all along.

A lot will depend, of course, on how AMD prices the MI325 later this year and how many AMD can get its partners to manufacture.

I expect the MI325X to surpass the H200 and the B100 (at worst on par?). The B200 having higher wattage consumption will be hard to beat.

Like you said, if AMD is pricing correctly the MI325X (around $30K?) it can be a great $/perf card and fit perfectly in the market.

I expect both AMD and NVIDIA to be supply constraints though, so little relief on $/perf for the H100/H200.

The Silo and ZT acquisition will probably help AMD a lot on the software side, software optimization is one thing that AMD has been doing really well recently if given some time.

Lisa said in a previous interview that she has a lot of supply from the manufacturer; we will see soon if that is true.

Last one, I hope AMD will launch a 1000W MI350X (or watercooled one), otherwise, NVIDIA will own the higher end of this market until Q1 2026 at least.

Magnificent analysis! The ratio of Server-Tokens-per-second (perf on workload) to peak-FP16-Teraflops (max theoretical perf) is a wonderful tool to use here. As you astutely note, it gives a rating of how well-balanced the architecture is (eg. memory-wise, as in H200 vs H100), and how well the software stack manages to take advantage of that arch (eg. PyTorch+ROCm vs TensorRT+CUDA). Additionally, in Blackwell, the chip (and/or model weights, and/or software) have the possibility of FP4 computation that can boost perf by 2x vs FP8 (possibly 4x vs FP16), and this is not available in either MI300X/325X nor H100/200. The FP4 and FP6 in next year’s MI350X should give it the wherewithal to address that B200 feature.

It’s great to see AMD finally coming out of the MLPerf closet with results for the MI300 family. Beyond pricing for an AI perf similar to the H100/200 competition, I think that their value proposition also includes the possibility of running cloud-HPC FP64 workloads at the highest performance, which can help hedge one’s bets, if desired.

Alrighty then, I’ll bite … so, from that Token-to-PeakFP16 ratio:

1) H100-SXM*8 to H200-SXM*8 gives 4.14/2.73 = 1.5x advantage to H200 from 76% more HBM in its saddlebags;

2) H200-SXM*1 to B200-SXM*1 is a 4.78/4.25 = 1.1x advantage for taming the Blackwell bronco (with 28% more HBM), and;

3) MI300X*8 to H100-SXM*8 means a 2.73/2.11 = 1.3x advantage for TensorRT+CUDA vs PyTorch+ROCm, which seams believable at this here outside leg juncture of the barrel racing cloverleaf …

And if that’s a sensible enough hold up for you, I reckon horses ain’t quite left the barn yet, AMD’s got its work cut-out for itself, it’s in that there BBQuda secret sauce, just got to ROCm’it and SOCm’it, real good now!

The article is really well thought-through all the way to the $-per-token-rate metric, where it looks good for both parties in the MI300X vs H100 contest ($15). We’ll have to see where it goes for MI325X vs H200 ($12), and MI350X vs B200 ($5), if those are the correct upcoming trial pairings.

In all likelihood, the ZT and Silo impacts (mentioned by Patrice and the article) will help drive AMD’s Salt-n-Pepa Push-It-Real-Good performance moves to further take-on the heretofore undisputed dancefloor leader in that high-stakes glitter competition!