Why AMD Spent $4.9 Billion To Buy ZT Systems

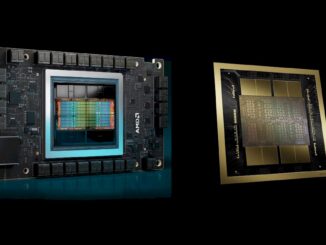

If AMD is willing and eager to spend $4.9 billion to buy a systems company – that is more than its entire expected haul for sales of datacenter GPUs for 2024 – then you have to figure that acquisition is pretty important. …