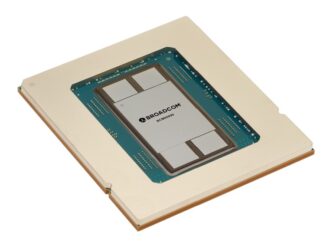

Broadcom Tries To Kill InfiniBand And NVSwitch With One Ethernet Stone

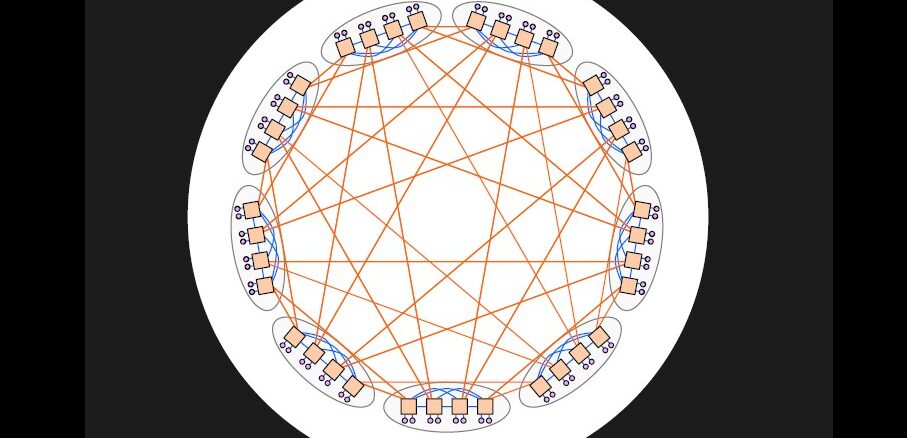

InfiniBand was always supposed to be a mainstream fabric to be used across PCs, servers, storage, and networks, but the effort collapsed and the remains of the InfiniBand effort found a second life at the turn of the millennium as a high performance, low latency interconnect for supercomputers running simulations and models. …