China has lots of coal but it does not have a lot of GPUs or other kinds of tensor and vector math accelerators appropriate for HPC and AI. And so as it has done with exascale-class HPC supercomputers, it is going to trade density and power efficiency for scale and just get the job done with big AI workloads.

Companies doing AI in China have little choice. Especially with a trade war going on between the United States and China, and Nvidia not able to sell even crimped recent vintage GPUs based on its “Hopper” GPUs into the Middle Kingdom because of US export controls. And to make matters harder for China, those expert controls reach into Taiwan Semiconductor Manufacturing Co for chips that get sold into China, and also restrict sales of machinery to make advanced chips using extreme ultraviolet (EUV) submersion lithography to etch ever-smaller and ever-taller transistors onto silicon wafers.

So the Chinese government has no choice but to make do with the accelerators it can design indigenously, etch them in the processes that Semiconductor Manufacturing International Corp (SMIC) can bring to bear, and pair them with whatever HBM memory it can get its hands on. It doesn’t hurt to have companies like DeepSeek figuring out ways to have AI models do more with less, too.

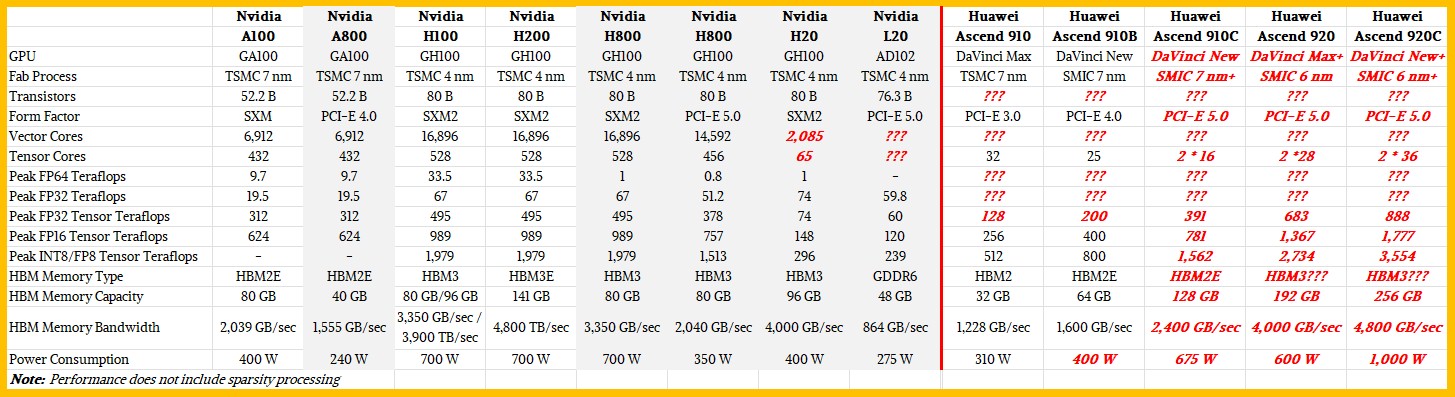

With Nvidia and AMD being slapped with new export controls on April 9, Chinese chip maker Huawei Technology’s HiSilicon division has taken the pole position in China with its Kunpeng Arm server processors and its Ascend AI accelerators – the latter of which we discussed in detail last August when the company was putting out hints about its Ascend 910C accelerators. With Nvidia and AMD making the news with yet more export controls on their respective and crimped H20 and MI308 GPU accelerators, that was a perfect time for Huawei to start talking about its next generation Ascend 920 and 920C accelerators.

We wish that we could get the full speeds and feeds of these devices as well as the prior generation 910C devices that started shipping late last year. There is some debate about who is etching the 910C, with rumors going around that TSMC baked a bunch of them before it was slapped with export controls even though the official word is that only the 910 was made by TSMC and the 910B and the 910C were made by SMIC using tweaks of its 7 nanometer non-EUV immersion lithography process. The word on the street is also that the forthcoming 920 and 920C are being etched with a tweaked 6 nanometer process from SMIC that is still not using EUV techniques and a stupid number of layers and patterning in the masks. (It takes a supercomputer to make the masks with multiple layers of patterning, so this is not trivial.)

We are not claiming any inside information about what HiSilicon has done with the 910C that is shipping now and that is at the heart of Huawei’s CloudMatrix 384 system, which as the name suggests has 384 of the 910C accelerators linked in an all-to-all configuration to create a shared memory system at a row scale that is akin to the rackscale DGX GB200 NVL72 from Nvidia, which has 144 GPUs in 72 sockets linked in a shared memory system. What we are doing – as usual – is trying to fill in the substantial gaps in information about this Ascend family of XPUs and the system that uses them.

Let’s start with the feeds and speeds of the “Ampere” and “Hopper” GPUs and the variants of these that have been crimped so they could stay not be trapped by US export controls for device memory bandwidth and compute capacity – barriers that are designed to keep China behind the US in the HPC and AI sectors. The export controls will not delay China’s advances on these fronts. Chinese researchers and system architects will just learn how to scale larger numbers of weaker devices for AI training and inference, just as they did to beat the US to exascale systems by more than two years.

Here are the Nvidia GPUs and their Chinese variants:

No, we are not including Nvidia “Blackwell” GPUs in the comparisons above because there is no way in hell that the Trump administration is going to let that device be sold into China, no matter how many factories Nvidia encourages its partners to build in Texas and Arizona. The pair of top-end Blackwell B300 accelerators paired with a single “Grace” CG100 CPU in the GB300 NVL72 system that will ship later this year each have about 3.4X more aggregate peak FP16 performance than the Hopper H100 (to that is 7.8X more FP16 oomph per node), and the Ascend 920C aimed at transformer and chain of thought models will have about 1.8X that of the H100. But as we say, there is not going to be a Blackwell sold in China, so that comparison is moot. And there won’t be a “Rubin” or “Feynman” sold there, either, in 2026 and 2028 respectively, unless something radical happens in the political world. You might be able to get a reuben sandwich or Richard Feynman’s wonderful books in Hong Kong, though. . . . You could even have both at the same time.

If you look at the comparison charts, it is pretty clear that an H20 is not very good for much, and it is sad, really, that there was so much fuss sending this to China. The H800 was much more useful and was only nominally crimped when it came to memory bandwidth, almost totally crimped for FP64 work (sorry, no nuclear simulations for you, China!).

What is also obvious is that the Ascend 910C AI accelerator is on par with the Nvidia H800 when it comes to raw floating point performance and beats it handily on HBM memory capacity and bandwidth. And the CloudMatrix 384 based on it has over 300 petaflops in a row of machinery at FP16 precision. The currently shipping GB200 NVL72 is rated at only 180 petaflops in a single memory space, and has half the aggregate HBM memory bandwidth and a little more than a quarter of the HBM memory capacity. This machine will burn a lot more power, we think, which is also true of the exascale “Tianhe-3” and “OceanLight” supercomputers in China compared to their American peers the “Frontier” and “El Capitan” supercomputers. Performance per watt is pretty bad, but the fully burdened cost might not be all that different. China buys more electricity, America buys more density and efficiency.

With the Ascend 920 and 920C, China will be able to keep pace with Nvidia at the system level, if the specs pan out as expected. But the question is whether or not SMIC can really make complex chips at reasonable yield with refined 6 nanometer non-EUV processes with crazy levels of patterning. We are willing to bet that it can, even if it takes a year. And it doesn’t matter as long as Huawei can’t sell them outside of China and can’t even make enough for China itself and Nvidia and AMD can’t sell into China anyway. It is like the AI revolution has two worlds and all they can do is talk vaguely about their systems and algorithms to goad each other on.

Comments are closed.

In my opinion it’s possible the tricks mastered to create 6nm features using a non-EUV process might turn out to be useful later.

Yes. I agree.