There is no shortage of top-name – and even lesser known – companies pursuing the white whale of developing a quantum computer that can run workloads and solve problems that today’s most powerful classical computers simply can’t. A global market is growing around quantum computing, with billions of dollars being spent to create and use them.

That said, the revenue streams from quantum computing are still an many times smaller than the investments being made in designing them, and there’s still debate one when a true quantum computer will emerge. That’s because building a quantum computer is hard due to any number of factors, a key one being error correction.

Qubits, the building blocks of quantum systems, are highly sensitive to a range of disturbances, like temperature fluctuations, electromagnetic radiation, and vibrations. These can cause errors during computation, so error correction is critical to ensuring the accuracy of the systems’ quantum calculations.

Researchers with Google’s Quantum AI Lab in Santa Barbara, California, say they’ve solved a key challenge to error correction in quantum systems, one that scientists have been trying to crack for three decades. The norm has been that the more qubits you use in a system, the more errors will occur, which blows a hole in another necessity for quantum computing to flourish – the ability to scale the systems.

According to Michael Newman, a research scientist at Google’s lab, error correction involves pulling together many physical qubits and getting them to work together – creating a logical qubit – to correct errors.

“The hope is that as you make these collections larger and larger, there’s more and more error correction, so your qubits become more and more accurate,” Newman told journalists and analysts during a video briefing. “The problem is that as these things are getting larger, there’s also more opportunities for error, so we need devices that are kind of good enough so that as we make these things larger, the error correction will overcome these extra errors we’re introducing into the system.”

In the 1990s, the idea of a “quantum error correction threshold” was proposed, with the thought being that if qubits are good enough, then as systems become bigger, these groups of physical qubits also can be made larger, which keeps the error from also increasing. It’s a three-decade goal that has not been reached – until now, according to Google.

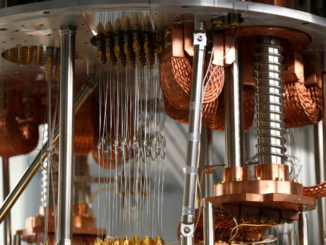

The company in Nature this week introduced its latest quantum chip, called Willow, the successor to Google’s previous Sycamore family of quantum processors and a key step along the tech giant’s quantum roadmap. In experiments using 72-quibit and 105-qubit Willow processors, Google researchers tested increasingly larger arrays of physical qubits – called logical qubits – that created grids that scaled from 3×3 to 5×5 to 7×7, and each time the size of the logical qubits increased, the error rate declined exponentially.

“Every time we increased our logical qubits – or our groupings – from 3-by-3 to 5-by-5 to 7-by-7 arrays of physical qubits, the error rate didn’t go up,” Newman said. “It actually kept coming down. It actually went down by a factor of two every time we increased the size.”

Julian Kelly, director of quantum hardware at Google, called error correction “the end game for quantum computers,” and added: “To be clear, there’s really no point in doing quantum error correction if you are not below the threshold. This is really a critical key ingredient towards the future of making this technology real.”

In the research paper in Nature, the researchers wrote that “while many platforms have demonstrated different features of quantum error correction, no quantum processor has definitively shown below-threshold performance.”

They added that fault-tolerant quantum computing requires more than just raw performance. There needs to be stability over time, the removal of such sources of errors like leaking, and better performance from classical co-processors.

“The fast operation times of superconducting qubits, ranging from tens to hundreds of nanoseconds, provide an advantage in speed but also a challenge for decoding errors both quickly and accurately,” they wrote.

A key to Willow’s error correction capabilities are the improved qubits in the chip, Kelly said during the briefing, adding that “you can think of Willow as basically all the good things about Sycamore, only now with better qubits and more of them.”

In the Nature paper, the researchers pointed to such improvements as a better T1 – the “relaxation time” it takes for a qubit in state 1 to erode to a 0 – and T2 (the “dephasing,” or coherence, time), which they attribute to better fabrication techniques, ratio engineering, and circuit parameter optimization. They also noted improvements in decoding, using two types of offline high-accuracy decoders.

Kelly added the significance of Google building its own fabrication lab – Sycamore was built at a shared cleanroom at the University of California, Santa Barbara – which was announced in 2013 and gave Google researchers more tools and greater capabilities. Willow was built in the dedicated lab, and redesigned internal circuits helped with improving T1 and ratio engineering.

In addition to the error correction capabilities, Google researchers tested Willow’s performance using the random circuit sampling (RCS) benchmark, which Hartmut Neven, founder and leader of the Google quantum lab, said in announcing the chip is the best way to determine if a quantum system is doing something that couldn’t be done by a classical computer. The benchmark tests the performance of quantum systems versus classical computers.

In 2019, using the RCS benchmark, it would have taken the fastest classical computer 10,000 years to do what the first iteration of Sycamore could do. Google last year touted what a later iteration of Sycamore could do. With Willow, a computation that the new chip ran in less than five minutes would take the massive Frontier supercomputer at Oak Ridge National Laboratories – with 1.68 exaflops of performance – 10 septillion years (1 plus 25 zeros).

A key to Willow’s capabilities is not only the improvements over Sycamore, but also the integration of all the parts, Kelly said.

“The qubit quality itself has to be good enough for error correction to then kick in and our error correction demonstration shows that everything works at once at an integrated systems level,” he said. “It’s not just the number of qubits or the T1s or the two-qubit error rate. It’s really showing everything working at the same time, and that’s one of the reasons that this challenge has remained so elusive for so long.”

Neven said in the Willow announcement that “all components of a chip, such as single and two-qubit gates, qubit reset, and readout, have to be simultaneously well engineered and integrated. If any component lags or if two components don’t function well together, it drags down system performance. Therefore, maximizing system performance informs all aspects of our process, from chip architecture and fabrication to gate development and calibration. The achievements we report assess quantum computing systems holistically, not just one factor at a time.”

Charina Chou, the lab’s director and chief operating officer, said during the briefing that while discoveries up to now with quantum are exciting, they “could also have been done using a different tool of classical computing. So our next challenge we’re looking at is this: Can we show beyond classical performance? That is performance that is not possible, really realistically, on a classical computer, on an application with real-world impact. … Nobody has yet demonstrated this in the NISQ [Noisy Intermediate-Scale Quantum] era, the era we are in before full-scale quantum error correction.”

It’s a goal that other vendors – including Amazon, Microsoft, IBM, and a host of startups – also are pursuing Google’s hope is that Willow is a key step to reaching it.

Quite interesting! It seems there is an open access version of the Nature paper “Quantum error correction below the surface code threshold” ( https://www.nature.com/articles/s41586-024-08449-y ) available at: https://arxiv.org/abs/2408.13687 — it gives the research background behind what went into Sycamore and Willow if I understood well.

In the “Outlook” section of that paper, they note that a 10⁻⁶ error rate may result from 1457 physical qubits (distance-27 logical qubit). Might that suggest the start of a well-behaved “continuum scale” of quantum phenomena?

Reminds me of a Tess Skyrme article from last April ( https://www.eetimes.eu/quantum-computing-the-logical-next-steps/ ) where the required ratios of physical to logical qubits (that vary among modalities) were listed as 2:1 for photonics, 10:1 for neutral atoms and trapped ions, and more than 1,000:1 for the superconducting case. The 1457:1 ratio seems to match that perspective (if superconducting).