We will always complain about the weather. It is part of the human condition. But someday, perhaps in five years but hopefully in well under ten years, thanks to parallel advancements in HPC simulation and AI training and inference, we will have no cause to complain about the weather forecast because it will be continuous, hyperlocal, and absolutely accurate.

That day, when it comes, will be the culmination of one of the most important endeavors in the related fields of weather forecasting and climate modeling that human beings have ever engaged in. And without a doubt, the world will be a better place when we can more accurately predict what is going to happen with our immediate weather and our long-term climate, both at the scale of individuals all the way out to the scale of the entire planet and even to the edges of the space through which it moves.

Whether or not we can change what is already happening, much less reverse it, is a subject for intense debate, but it is clear to everyone that we need to know what is going to happen with the weather and the climate on short and long timescales so we can manage our lives and our world.

We are at a unique time in the history of weather forecasting and climate modeling in that new technologies and techniques are coming together at just the moment when we can make the exascale-class supercomputers that are necessary to employ them. And we can get those exascale-class supercomputers at a reasonable price – reasonable given the immense performance they contain, that is – and at the exact moment that we need them given the accelerating pace of climate change and the adverse effects this is having on all of the biological, economical, and political systems on Earth.

Doing so requires breaking through the 1 km grid resolution barrier on weather and climate models, which is a substantial shrink in resolution compared to what is used in the field on a global scale today. For instance, the Global Forecasting System (GFS) at the US National Oceanic and Atmospheric Administration has a 28 km grid resolution, and the European Centre for Medium-Range Weather Forecasts (ECMWF) global forecasting simulator has a 20 km resolution. You can run at a higher resolutions with these and other tools – something around 10 km resolution is what a lot of our national and local forecasts run at – but you can only cover a proportionately smaller part of the Earth in the same amount of computational time. And if you want to get a quick forecast, you need to tighten in quite a bit or have a lot more computing power than a lot of weather centers have.

Weather forecasting was done, somewhat crudely as you might expect, on computers powered by vacuum tubes back in the 1950s, but with proper systems in the late 1960s, weather models could run at 200 km resolutions, which means you could simulate hurricanes and cyclones a bit. It took five decades of Moore’s Law improvements in hardware and better algorithms in the software to drive that down to 20 km, where you can simulate storms and eddies pretty faithfully. But even at these resolutions, features in the air and water systems – importantly clouds – exist at a much finer grained resolution than the 10 km grids we have today. Which is why weather and climate forecasters want to push down below 1 km resolution. Thomas Schultess of ETH Zurich and Bjorn Stevens of the Max Planck Institute for Meteorology explained it well in this chart:

This sentence from the chart above explains why exascale computers like the “Frontier” supercomputer installed at Oak Ridge National Laboratory, the world’s first bona fide exascale-class machine as gauged by 64-bit floating point processing, are so important: “We move from crude parametric presentations to an explicit, physics based, description of essential processes.”

It is with this all in mind that we ponder the work being done at Sandia National Laboratories on a variant of its Energy Exascale Earth System Model, also known as E3SM, that pushed the grid size of a full-scale Earth climate model down to 3.25 kilometer resolution. That variant, known as Simple Cloud Resolving E3SM Atmosphere Model, just looked at clouds, and in a ten day window for access to more than 9,000 nodes on the Frontier system, the model was able run at greater than one simulated year per wall clock day, or SYPD in weather-speak.

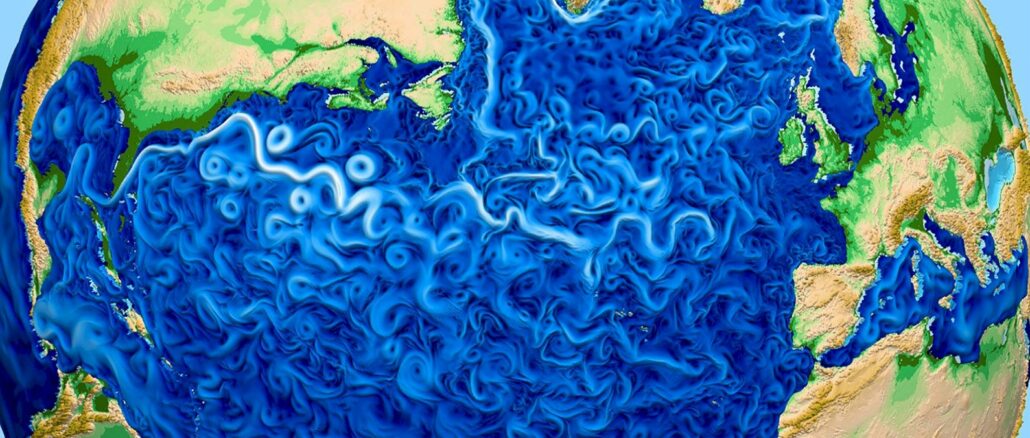

In the image above, the simulation of the Earth’s clouds is showing a tropical cyclone off the coast of Australia, and then it is zooming in on a section of that cyclone, showing a vertical cross-section of where ice and liquid water are swirling around.

“Traditional Earth system models struggle to represent clouds accurately,” Mark Taylor, chief computational scientist of the E3SM project, said in a statement accompanying the performance results. “This is because they cannot simulate the small overturning circulation in the atmosphere responsible for cloud formation and instead rely on complex approximations of these processes. This next-generation program has the potential to substantially reduce major systematic errors in precipitation found in current models because of its more realistic and explicit treatment of convective storms and the atmospheric motions responsible for cloud formation.”

The E3SM project spans eight of the labs that are part of the Department of Energy’s Office of Science, and it develops advanced climate models not just for us directly, but for national security reasons.

This is a first step in pushing down the model resolution, but we do shudder to think of what it might take to add in ocean models and to push the grid resolution down below 1 km. Adding other simulation layers and driving down that resolution by more than a factor of 3X is going to take a lot more oomph.

When we talked to the US National Oceanic and Atmospheric Administration, which runs the National Weather Service, last June about its pair of 12.1 petaflops weather forecasting systems, which run at 13 km resolution for a global model, we did some back-of-the-envelope math and reckoned it would take 9.3 exaflops to do a 1 km model. (To double the grid resolution takes 8X the compute, according to Brian Gross, director of NOAA’s Environmental Modeling Center.) That math is simple, but getting the money to build such a machine – or a pair of machines because weather forecasting requires a hot spare – would be plenty expensive.

But the work on E3SM and tuning it up on Frontier is an important step in the right direction. We would personally advocate for spending a hell of a lot more money on weather and climate forecasting systems than we currently do so we can get the better models that we know are possible. And then we can complain about the weather, and not the forecasting.

We look forward to seeing if this SCREAM variant of E3SM comes up for a Gordon Bell prize this year, and hope it does so we can get some more details on it.

TPM. Great article. So well done.

Thanks Mike. Appreciate it. Hope you are well.

I’m sick and tired of this global warming and green energy scams.

I too get this feeling, a lot of times; there seems to be a lot of duplicity in promoters of this and that new zeitgeist, and what not, being fashionable means X, and you’re excluded if different, non-conforming. In such situations it helps me to relate back to folks whose opinion I value, for whichever reason, here Sandra Snan (of Chicken Scheme) — she (can be crude) writes this: “Everything is on the cinder unless we all sober the fuck up wrt climate change and slam the breaks on emissions!” — admitedly her opinion, but I value it!

Sonehow, Joni Mitchell’s song doesn’t quite scan to the new lyrics

“I’ve looked at clouds from both sides now,

from crude parametric presentations

to an explicit, physics based, description of essential processes on a 1km^3 scale”.

Well played….

Not a peep about AI or ML? Isn’t climate modeling exactly the sort of workload (lower precision) machine learning is perfect for? With all those GPUs in Frontier not sure why they’re still doing it in this brute force sort of way.

I don’t think the E3SM people are doing that, but clearly there is a way to interleave AI in the ensembles to do an effective speedup.

Great article and superb application of contemporary HPC oomph to better understand important phenomena! I’ve looked around on the web a bit and it seems that the transition from E3SM to SCREAM also involves code conversion from Fortran to C++ “to enable efficient simulation on CPUs, GPUs, and future architectures” (using the Kokkos library). It’ll be great to follow how this opens the door to various performance-enhancement strategies and how that pans out in terms of SYPD (as you state!).

The domain discretization of the spherical shell is somewhat anisotropic. In the horizontal, it is 1 node per 10 km^2 (or approx 3.2 km between nodes, in x and y directions), with an upcoming target of 1 node per km^2 (1 km between nodes, in x and y). So they’re looking at 510 million nodes in x-y to cover earth’s 510 million km^2 surface area. The node spacing is finer in the z-direction (vertical), and ranges from 50 m between nodes near the surface, to 250 m between nodes in the stratosphere (at 40km or 60km top elevation), for a total of 72 vertical nodes for E3SM, and 128 such nodes for SCREAM. Accordingly, with SCREAM, there are 65 billion simultaneous equations to be solved (and iterated over for nonlinearity) per time step! WoW!

Correction: make that approx. 400 billion simultaneous equations … as this is (I think) 3-D Navier-Stokes plus heat and moisture transport, and therefore (at least) 6 dependent vars per node (vx, vy, vz, P, T, H2O vapor), mapped over 65 billion nodes … Definitely awe-inspiring stuff!

I’ll add that the grid-Courant-number limit on accurate computations constrains time-step size to possibly less than 1/100 of an hour (for up to 100 km/h winds over a 1km grid), that is roughly 30 seconds, and means approximately 1 million time steps per year (plus nonlinearity iterations). Shorter time steps (and more of them per year) would be needed for higher maximum wind speeds (in conventional FDM/FEM).

Updated to add (sadly): Just 2 days before this article was published, Dr. Ivo Babuška, pioneer of the numerical solution of CFD equations, and of a-posteriori error analysis for mesh refinment, passed away (April 12, 2023), at the age of 97. He was a pre-former colleague who retired from UMCP in 1994 or 1996 (a couple years before I got onboard). He uncovered the formerly unknown eponymous Babuška–Brezzi (“inf-sup”) condition required for solving Navier-Stokes (and other saddle-point systems) whereby velocities need to be discretized at a higher-order (eg. 2nd-order) than pressure (eg. 1st-order) to prevent wild checkerboard oscillations in the solution. He would probably get a kick to know that, becasue of him, more than 400 billion simultaneous equations will now need to be solved by the SCREAM model!

It is a nice article but it seems to make the false claim that forecasts can be (close to) perfect as long as you have sufficient compute power. The atmosphere and the complete earth system is highly chaotic and cannot be deterministically described by physical equations. Yes, forecast errors will gradually reduce but will never go away completely.

Great point! Chaos theory means sensitivity to initial conditions (via non-negative Lyapunov exponents), and therefore, the more accurately one knows current conditions everywhere in a domain (vx, vy, vz, T, P, H2O vapor, at time=0), the farther into the future they can produce accurate predictions for the subsequent temporal evolution of the system (eg. predict weather accurately for 10-days into the future). However, even without “infinitely” accurate ICs (initial conditions), solution of the system for a substantial time span has the potential to resolve the phase-space attractor of the system (eg. Lorentz attractor) which determines all of its possible future states, and hence every possible weather that can occur in the target domain — which is still mighty useful (and awesome)!

It’s not pure unadulterated chaos thanks to the fluid viscosity of air (or its eddy viscosity computed from appropriate turbulence closures) which makes the flow dissipative on the whole: tornadoes, hurricanes, monsoons, and the like, eventually die-out as a result — but their frequency of occurence, and their intensity, may increase or decrease depending on global conditions of warming, cooling, and mis-exfoliation (eh-eh-eh!).

A turning point in GPU power IMO is the recent introduction at last, of chiplets to graphics processors also.

We have seen the radical effect AMD’s chiplets have had on the economics & performance of CPUs since Zen arrived in 2017. I am confident of similar gains as GPU chiplets evolve now the ice is broken.