Lawrence Livermore National Laboratory, Sandia National Laboratories, and Los Alamos National Laboratory are known by the shorthand “Tri-Labs” in the HPC community, but these HPC centers perhaps could be called “Try-Labs” because they historically have tried just about any new architecture to see what promise it might hold in advancing the missions of the US Department of Energy.

Sandia, which is where the Vanguard program to test out novel architectures is hosted, is coming back for seconds with the third generation of waferscale systems from Cerebras Systems in the hopes of pushing the performance barriers of traditional HPC codes on a machine that is actually designed to run AI training and inference.

Two years ago, Sandia acquired an unknown number of CS-2 systems from Cerebras, each of which has a CPU host and a WSE-2 waferscale processor, with the idea of offloading some matrix-dense HPC calculations to the 16-bit floating point cores on the WSE-2 engine.

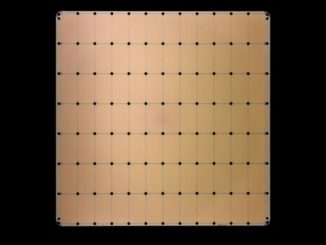

Why would Sandia even think about cutting the precision of its calculations from either 64-bit or 32-bit formats by a factor of four or two? Because those WSE-2 engines, as we detailed back in March 2022, cram 850,000 cores and 40 GB of on-chip SRAM memory to feed them, etched in 2.6 trillion transistors, into a square of silicon that is the size of a dinner plate and that has 20 PB/sec of memory bandwidth and 6.25 petaflops of oomph on dense matrices and 62.5 petaflops on sparse matrices.

The thinking was that certain kinds of workloads, having a calculation live on a single, massive device could result in an HPC simulation running a whole lot faster, provided that the problem fit into memory or could be broken down into pieces that spanned those cores.

A team of Sandia researchers proved this point earlier this year when a single CS-2 system was able to beat the “Frontier” supercomputer at Oak Ridge National Laboratory on a certain molecular dynamics simulation.

The bigger Frontier machine, which has 37,632 AMD “Aldebaran” MI250X GPU accelerators, can simulate a large number of atoms in a crystal lattice, but because of the weak scaling of the clustering of those GPUs, it cannot simulate atoms wiggling about for long periods of time. The latency between nodes makes this impossible.

But using a modified LAMMPS molecular dynamics simulation, Sandia set up a test that had lattices of tungsten, copper, and tantalum comprised of a static number of atoms – 801,792, enough for one WSE-2 core to hold data for one atom – and then simulated these lattices being whacked with radiation. The number of timesteps per second that could be processed in the LAMMPS simulation done at Sandia on one WSE-2 compute engine was 109X higher for copper, 96X higher for tungsten, and 179X higher for tantalum compared to the GPUs in the massive Frontier system. This gave tens of milliseconds of time for the simulation on the Cerebras iron and, as we pointed out back then, enough time to actually see what happens to the lattice when you poke it with energy.

On the Frontier machine, the scaling of this application petered out at 32 GPUs, which was disappointing and just goes to show you that it is hard to scale outside of a single compute device for certain kinds of applications.

At the time, we conjectured that Sandia could buy some more time – simulation time, that is – if it moved up to the WSE-3 compute engines in the CS-3 systems that were launched in March of this year. With the WSE-3 engines, Cerebras shrank the transistors to 5 nanometers, compared to 7 nanometers with the WSE-2, and boosted the core count to 900,000 but moved to an eight-wide FP16 SIMD unit, twice the width of the SIMD unit used in the WSE-2 and WSE-1 engines. We think the clock speed went up about 5 percent on the WSE-3s, and when you multiply out the clock crank, the core jump, and the SIMD bump, that is how you get 2X the performance out of the WSE-3 compared to the WSE-2.

Our guess is that this kind of performance boost might increase the simulation window on the tantalum lattice irradiation from 40 milliseconds to 80 milliseconds by moving from the WSE-2 to the WSE-3. Those nodes on the Frontier machine were simulating around 200 nanoseconds, by comparison.

Well, it looks like the folks at Sandia want to get their hands on some WSE-3 compute engines and find out. And we also strongly suspect that they want to figure out of they can scale that simulation up over multiple wafers and break through the 1 second simulation barrier.

Perhaps to that end as well as others, Sandia and Cerebras have started building a system nicknamed “Kingfisher,” which will start out with four CS-3 systems and which will be expanded to eight systems at some point in the future. The Kingfisher cluster will be double-timing it on both traditional HPC simulation work as well as AI work – generative AI, of course, but not necessarily restricted to this – that can augment the processing that the Tri-Labs do under the auspices of the National Nuclear Security Administration, which funds the Tri-Labs to manage the nuclear weapons stockpile of the US military. To be specific, Kingfisher is being funded by the Advanced Simulation and Computing Artificial Intelligence for Nuclear Deterrence program.

In a statement announcing the Kingfisher system, James Laros, one of the researchers who has been spearheading the work on the CS-2 systems at Sandia, said that the lab was exploring the feasibility of using future versions of the WSE compute engines “for a combination of Mod-Sim and AI workloads.” We have joked in the past with Cerebras co-founder and chief executive officer Andrew Feldman that what the world really needed was a WSE that had a 64-bit SIMD engine that could scale up to FP64 precision and maybe down to FP4 precision, and do it on the fly, perhaps in different blocks on the wafer or perhaps truly on the fly as code is running, so any of the codes at HPC centers could, in theory, run on Cerebras iron.

If enough of us say it, and enough of you fund it, perhaps this could happen. None of this sounds kookaburra to us. Nvidia is not focusing on FP64 performance with its GPU generations anymore.

The cost of the Kingfisher system was not announced, but we know that at list price – whatever that is in the HPC space – a CS-2 system with a single WSE-2 cost about $1.6 million last year when G42 started working with Cerebras to build out a fleet of “Condor Galaxy” clusters based on the CS-2 machines. Perhaps that means a 1.5X price increase to get to the CS-3, or perhaps it means 2X. In a world that commands better bang for the buck each generation, then $2.3 million to maybe $2.5 million might be justified. In a world where you need more performance and have few options, then $3.2 million for the cost of a CS-3 node makes sense. Either way, we think that Sandia is not going to pay anywhere near list price for a machine, but at the same time wants to help fund the companies that might help it run its simulations better. What we can tell you for sure is that the 32 GPU section of Frontier only cost about $425,000, but it could only scale so far.

So, what is scaling up the number of atoms and scaling up the time simulated worth to the NNSA? Probably a lot.

We look forward to learning more about what Kingfisher does, and how it does it.

One last thing: the work that the Sandia team did on molecular dynamics on the Cerbras waferscale systems is up for a Gordon Bell Prize this year. We hope they get it, just to keep the GPU vendors on their toes.

If this works out, Sandia and Cerebras may single-handedly reinvigorate 450 mm wafers. Imagine what those could do. Probably not, but one can dream!

We may even get nuclear fusion power in 47 instead of 50 years. And then use that power to bootstrap HPC for running Ansys, I mean, developing even better chips.

Thank you for the chuckles. . . .

Strong scaling is difficult and needed for dynamical systems when the time window is increased rather than the size of the phase space. Since the scaling on Frontier is limited to 32 GPUs, that means 8 nodes. So forget about ultra Ethernet; will CXL on the next generation PCIe fabric allow hundreds of GPUs to sit in the same node?

I’m as skeptical about latency with clustering lots of wafer-scale engines together as I was when Los Alamos National Labs built a huge cluster of Raspberry Pi computers to test fault tolerant scheduling and error recovery. On the other hand, since new functionality doesn’t evolve when environmental pressures are too high, maybe low-stakes hobby projects are needed for high-stakes gains.

Great work by the Tri-Labs Vanguard and Cerebras … and yes to wafer-scale FP64 dataflow SIMDs (for the future)! Breaking through “the 1 second simulation barrier” would be revolutionary and I hope they can make it.

But, the 6 Gordon Bell Prize Finalists for 2024 ( https://sc24.supercomputing.org/2024/10/presenting-the-finalists-for-the-2024-gordon-bell-prize/ ) include a team from University of Maryland College Park (with Max Planck and Berkeley) … so, ahem, I might have to give them my vote (if I had one that is)!

The Maryland Berkely entry is titled “Open-source Scalable LLM Training on GPU-based Supercomputers.”

Using a computer designed for science to train AI to me seems the opposite of using a computer designed for AI to do science. Does the wolf dwell with the lamb? It seems all mixed up. Luckily I don’t have a vote either.

I believe this article has significant implications for us.

In my view, utilizing transformer models for scientific advancement could create more value than their application in assistant-like functions. However, I think computational accuracy is crucial for this purpose. The current FP16 or lower precision used in LLMs may result in lower accuracy of generated molecular structures or materials.

If we were to increase the data format to FP32 or FP64, I believe we would see a dramatic decrease in floating-point operations per second due to the bottleneck between GPU and memory. To minimize these negative effects, while a Wafer Scale AI accelerator could be one approach, I think another viable alternative would be a structure that connects SRAM to memory components like DRAM/NAND and Processing Elements (PEs) through packaging.