With all of the hyperscalers and major cloud builders designing their own CPUs and AI accelerators, the heat is on those who sell compute engines to these companies. That includes Intel, AMD, and Nvidia, of course. And it also includes Arm server chip upstart Ampere Computing, which is taking them all on in CPUs, and soon, in AI processing.

When it comes to server CPUs, the “cloud titans” account for more than half the server revenues and more than half the shipments, and with server GPUs, which are by far the dominant AI accelerators, these companies probably account for 65 percent or maybe even 70 percent or 75 percent of revenues and shipments. (We have not seen any data here, hence the wide error bars on that statement.) As GenAI goes more mainstream and supplies of GPUs become more readily available – these two go hand in hand – then the share of revenues and shipments of datacenter GPUs and other kinds of AI accelerators should mirror that for server CPUs. At some point, as we have talked about before, half of the server revenues in the world will be for AI accelerated iron, and half will be general purpose CPU machines.

Unless what is happening on the personal computer with the AI PC happens with the datacenter server. Server CPUs could get much beefier AI calculating capabilities for localized AI processing, close to the applications that need it, much as our PCs and phones are getting embedded neural network processing right now. We happen to believe – and have always believed – that a large portion of AI inference would be done on server CPUs, but we did not see the enormous parameter counts and model weights of the large language models that support GenAI coming. It just got bigger faster than we expected – and we are not the only one that happened to.

And that means the AI processing integrated into server CPUs has to get bigger faster than what we expected, and Jeff Wittich, chief product officer at Ampere Computing, talked with us about this back in April when the company did a tiny reveal on its AmpereOne CPU roadmap.

Today, Wittich is doing a much bigger reveal as Ampere Computing unveils plans to put an Arm server CPU with homegrown Arm cores, a homegrown mesh interconnect, and now a homegrown and integrated AI accelerator in the field that is dubbed “Aurora” and that is probably going to come out in late 2025 or early 2026.

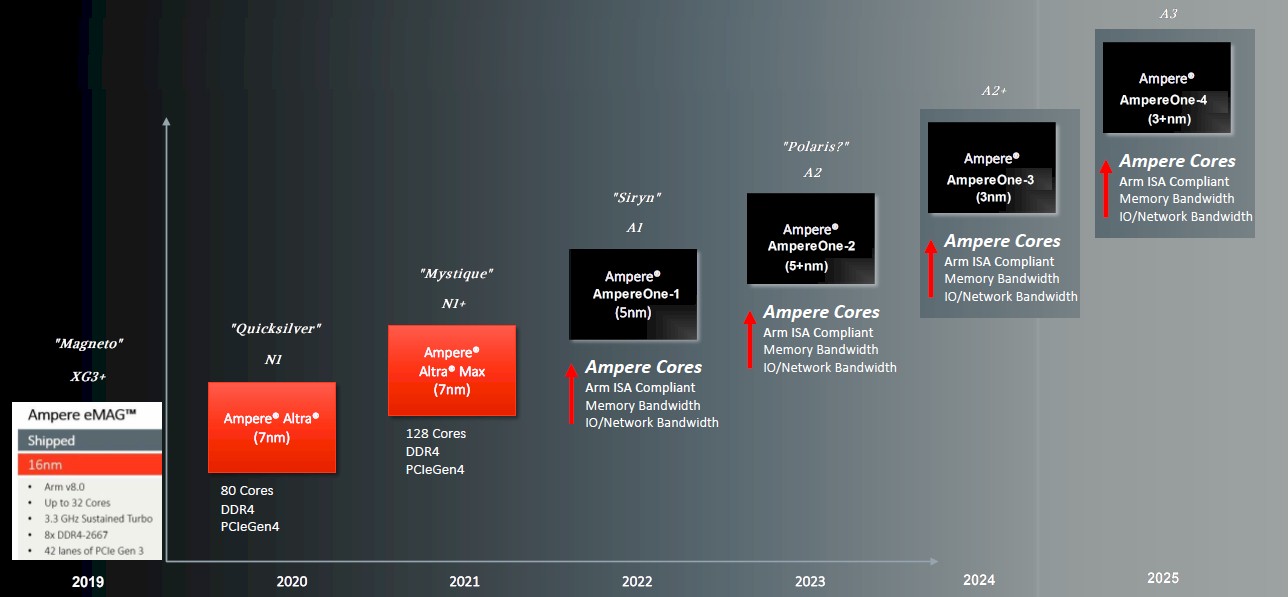

The new 2024 roadmap from Ampere Computing is consistent with the augmented roadmap that we put together back in 2022 based on everything we knew about at the time:

It is important to note that the calendar dates shown above are for when Ampere Computing announced the chips, not when they started shipping.

Because Ampere Computing did not have a code-name for its second generation AmpereOne chip, we invented one consistent with the X-Men theme that the company used for many years and named it “Polaris,” the daughter of Magneto and also given the name “Magnetrix.”

Here is the updated roadmap, which doesn’t show any more iterations than the one above, but names the fourth generation AmpereOne chip “Aurora,” part of the Canadian X-Men crew Alpha Flight, and adds some details on the architecture of future Ampere Computing Arm CPUs:

This roadmap tells us the current state of past, present, and future Altra and AmpereOne processors. We added the codenames as well as designations for the Arm Neoverse cores used in the Altra line and our own designations of the Ampere Computing homegrown cores used in the AmpereOne line. (The company doesn’t talk about its cores this way, and we are trying to encourage this behavior by doing this.)

We talked about the 192-core, eight-channel Polaris chip a number of times over the past year, and talked about the variant of this chip with twelve DDR5 memory channels and the kicker chip with 256 cores and twelve memory channels (which we are calling Magnetrix and is consistent with the MX designation from the company we suppose) back in April.

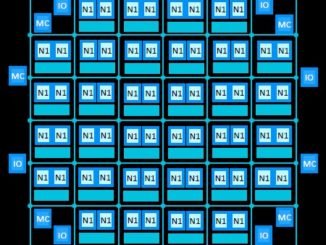

The big change with Aurora is that Ampere Computing is adding its own homegrown AI engine – presumably a tensor core that is more flexible than a flatter matrix multiplication engine, but Wittich is not saying. This Aurora chip will have up to 512 cores, and presumably at least 16 memory channels and it sounds like 24 channels would be better for balance. (A mix of HBM4 and DDR6 memory is possible but not likely given the high cost of adding HBM memory to a CPU.) This chip will also feature a homegrown mesh interconnect to hook CPU cores and AI cores together.

We also think that the future A2+ and A3 cores, as we call them, will be getting more vector units, but probably not fatter ones. (AMD has decided to go with four 128-bit units in its “Genoa” Epyc 9004 series rather than one 512-bit vector or two 256-bit vectors.) AmpereOne and AmpereOne M have two 128-bit vectors for each core, and it would not be surprising to us to see four 128-bit vectors in each core for AmpereOne MX and AmpereOne Aurora. (See why we use codenames? That’s a lot of repetition there.) So there will be different levels of AI acceleration in these chips, and with Aurora, we do not think the vector units will not be taken away from the A3 core to make room for more cores on a chiplet in a given process.

And perhaps most interestingly, the Aurora chip will remain air-cooled, even with a big fat AI engine (probably in a discrete chiplet) inside the package.

“These solutions have to be air cooled,” Wittich explained during a prebriefing on the roadmap and the microarchitecture of the Polaris chip, which we will cover separately. “These need to be deployable in all existing datacenters, not just a few datacenters operating at a couple of locations, and they have to be efficient. There’s no reason to back off of the industry’s climate goals. We can do both – we can build out AI compute and we can meet our climate goals.”

This is a tough challenge, as Wittich went over in his briefing. According to the Uptime 2023 Global Datacenter Survey, 77 percent of datacenters have a maximum power draw per rack of less than 200 kilowatts, and 50 percent have a maximum power draw of under 10 kilowatts. A rack of GPU accelerated systems, which has to be liquid cooled, comes in at around 100 kilowatts these days and people are talking about pushing densities to drive down latencies between devices and nodes, which will drive that up to 200 kilowatts. Wittich has been in serious discussions with companies that want to drive 1 megawatt per rack and somehow cool it.

We live in interesting times. But most of the corporate IT world will have none of that, and as we have pointed out before, Ampere Computing might have started out trying to make Arm server processors that the hyperscalers and cloud builders would buy, but it may end up selling most of its processors as a backup to homegrown efforts and to enterprises that neither can afford nor have the skills to design their own chips. Ampere Computing can position itself as the safe Arm server chip choice for those who cannot do their own Arm chips but who still want the benefits of a cloud-native Arm architecture.

Aurora might be just the right kind of chip for a lot of companied needing to do cheaper computing and AI on the side.

One last thing. Ampere Computing has a lot of ex-Intel people among the top brass, and wouldn’t it be funny if Intel Foundry rather than Taiwan Semiconductor Manufacturing Co ended up etching the Aurora chip?

512 cores Aurora (late 2025), wow! That keeps Ampere on a nicely competitive trajectory, from siryn’s 192 cores, against last year’s 128 Zen 4c core Bergamo and its likely Turin-family Zen 5c follow-on, as well as this year’s 288 E-core Sierra Forest. The 192-core Polaris (12 ch DDR5) and 256-core Magnetrix look like a solid in-between progression to me. Hopefully, PCIe 6.0 and CXL 3.0 enter the designs somewhere in this timeline too.