When people think of supercomputers, they think of a couple of different performance vectors (pun intended), but usually the first thing they think of is the performance of a big, parallel machine as it runs one massive job scaling across tens of thousands to hundreds of thousands of cores working in concert. This is what draws all of the headlines, such as simulating massive weather systems or nuclear explosions or the inner workings of a far-off galaxy.

But a lot of the time, supercomputers are not really used for such capability-class workloads, but rather are used to run lots and lots of smaller jobs. Sometimes these groups of simulations are related, as in doing weather or climate ensembles that tweak initial conditions of their models a bit to see the resulting statistical variation in the prediction. Sometimes the jobs are absolutely independent of each other and at a modest scale – on the order of hundreds to thousands of cores – and the supercomputer is more like a timesharing number-crunching engine, with job schedulers playing Tetris with hundreds of concurrent simulations, all waiting their turn on a slice of the supercomputer.

This latter scenario is just as important as the former in the HPC space, and a lot of important science is done at this more modest scale, which does not correlate to the complexity of the models being run. One such job at the National Center for Atmospheric Research in the United States is called the Community Earth System Model, the follow-on to the Community Climate Model for atmospheric modeling that NCAR created and gave away to the world back in 1983. Over time, land surface, ocean, and sea ice models were added to this community model, and other US government agencies, including NASA and the Department of Energy, got involved in the project. These days, the CESM application is funded mostly by the National Science Foundation and is maintained by the Climate and Global Dynamics Laboratory at NCAR.

NCAR, of course, is one of the pioneers of supercomputing as we know it, the history of which we discussed at length more than six years ago when the organization announced the deal with Hewlett Packard Enterprise to build the 5.34 petaflops “Cheyenne” supercomputer in the datacenter outside of the Wyoming city of the same name. NCAR was an early adopter of supercomputers from Control Data Corporation (of which in 1957 Seymour Cray was a co-founder with William Norris after both got tired of working at Sperry Rand) and Cray Research (which was created in 1972 when the father of supercomputing got tired of not running his own show), and so it is entirely within character for NCAR to be looking at cloudy HPC and seeing what it can and cannot do.

And to make a point that cloud HPC can offer benefits over on-premises equipment, Brian Dobbins, a software engineer at the Climate and Global Dynamics Laboratory, presented some benchmark results running CESM on both the internal Cheyenne system and on the Microsoft Azure cloud, which as much as anything else shows the benefits of having more modern iron run applications as well as proving that the cloud can be used for running complex climate models and weather simulations.

As we have pointed out a number of times, HPC and AI have a lot of similarities, but there is one key difference that always shas to be considered. HPC takes a small dataset and explodes it into a massive simulation of some physical phenomenon, usually with visualization because human beings need this. AI, on the other hand, takes an absolutely massive amount of unstructured, semi-structured, or structured data, mashes it up, and uses neural networks to sift through it to recognize patterns and boils that all down to a relatively simple and small model through which you can run new data. So, it is “easy” to move HPC up to the cloud (in this one sense), but the resulting simulations and visualizations can be quite large. And, to do AI training, if your data is in the cloud, that’s where you have to do the training because the egress charges will eat you alive. Similarly, if the results of your HPC simulations are on the cloud, they are probably going to stay there and you will be doing your visualizations there, too. It is just too expensive to move data off the cloud. Either way, HPC or AI, organizations have to be careful about where the source and target data is going to end up and how big and expensive it is going to be to keep it and move it.

None of this was part of the benchmark discussion that Dobbins gave, of course, as part of the Microsoft presentation on running HPC on Azure.

On the Cheyenne side, the server nodes are equipped with a pair of 18-core “Broadwell” Xeon E5-2697 v4 processors running at 2.3 GHz; the nodes are connected through 100 Gb/sec EDR InfiniBand switching from Mellanox (now part of Nvidia).

The Azure cloud setup was based on the HBv3 instances, one of several configurations of the H-Series instances available from Microsoft and aimed at HPC workloads. The HBv3 instances are aimed at workloads that require high bandwidth and have 200 Gb/sec EDR InfiniBand ports on their nodes, which top out with a two-socket machine based on a pair of AMD “Milan” Epyc 7003 series chips (we don’t know which one), each with 60 cores activated, running at a peak 3.75 GHz (according to Microsoft) but we think that is only max boost speed with one core activated and with all cores running you are looking at more like 2 GHz. Microsoft did say in its presentation that the HBv3 node delivered 8 teraflops of raw FP64 performance across those two sockets. Unless there is some overclocking going on, this 8 teraflops across the node seems a bit high to us – we expected somewhere around 7.68 teraflops with 120 cores at 2 GHz and 32 FP64 operations per clock per core. Anyway, when we do the math on those old Broadwell chips inside of Cheyenne, which can do 16 FP64 operations per clock per core, we get a mere 1.32 teraflops of peak theoretical performance per node.

So, yeah, the Azure stuff had better do a lot more work. Oddly enough, it doesn’t do as much as you might think based on these raw specs. Which just goes to show you that it always comes down to how code can see and use the features of the hardware – or can’t without some tuning.

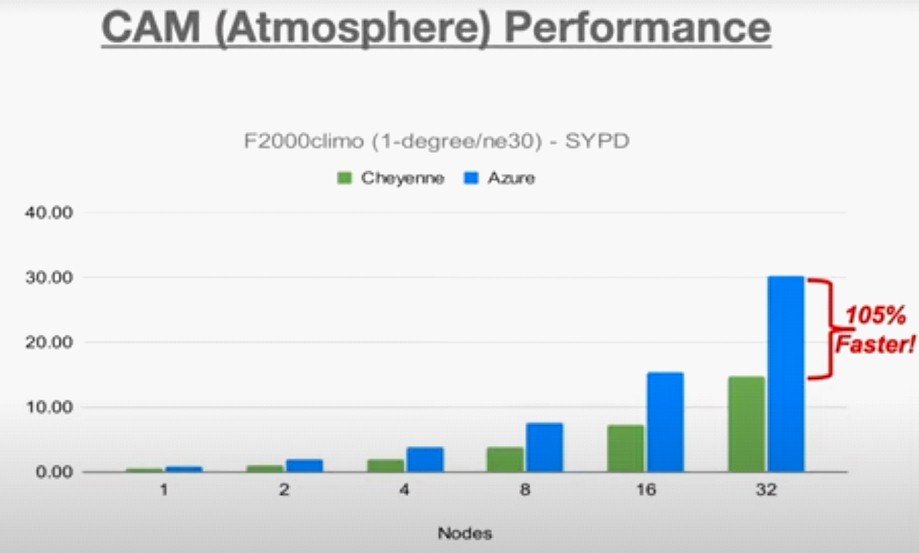

Here is the relative performance of the Community Atmosphere Model, which Dobbins says is one of the most computationally intensive parts of the CESM stack:

The ne30 in that chart refers to a one degree resolution in the model, and F2000climo means running from 1850 (before the rise of the Industrial Revolution) to 2000 (which is apparently the Rise of the Machines, but we don’t know for sure yet).

The X axis shows the number of Cheyenne nodes or Azure HBv3 instances (which are also nodes in this case) running the climate model, and the resulting number of simulated years per day that each set of machines can do. In all cases – big surprise – Azure beats Cheyenne. With 2X the interconnect bandwidth and 5.8X the raw 64-bit floating point compute, Azure had better beat Cheyenne. But it only beats it by a factor of 2X or so, but Dobbins says that NCAR is pushing the CAM model within CESM up to 35 simulated years per day running on a larger number of Azure nodes. He didn’t say how many more, but our eye says around 40 Azure nodes should do it. And our eye also sees that 80 modes isn’t going to give you 70 simulated years per day, either. CAM doesn’t scale that way – at least not yet.

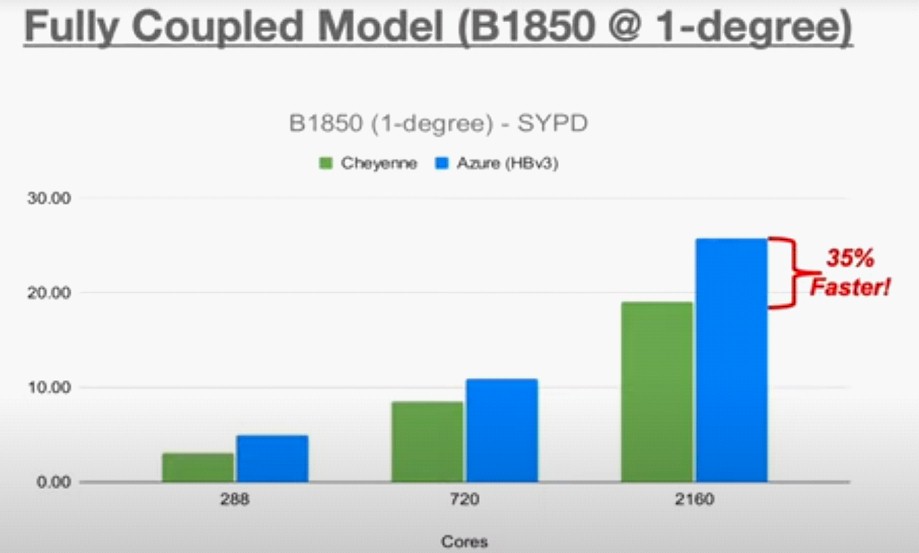

Simulating one part of the climate, such as the atmosphere, is one thing, but being able to run all kinds of models at the same time for different Earth systems is where the real sweat starts coming out of the systems, and on such a test, Cheyenne doesn’t do nearly as badly as you might expect:

This B1850 simulation, which means having 1850 as the initial start condition to the climate model, brings atmosphere, ocean, land, sea, ice, and river runoff models all together.

“It’s way complex and load balancing this is a big challenge, and so for the moment we ran identical configurations on Cheyenne and Azure to see how it faired,” Dobbins explained. “These results represent a low water mark, and with tuning we expect we will be able to run Azure faster.”

As you can see, as you scale the number of cores up, both machines scale their performance on the full CESM model, and with 2,160 cores, the Azure setup does 35 percent more work (in terms of years simulated per day of runtime) compared to the Cheyenne system. It is hard to say how hard the tuning can be pushed. Each part of the CESM simulation is different, and obviously did not, in the aggregate, behave like the CAM portion did.

We are not for or against any particular platform here at The Next Platform, and we love them in the past, in their time, as much as we love the ones coming down the pike. What we certainly are for is the wise spending of government funds and we are definitely for more accurate climate models and weather forecasts. So inasmuch as Azure can help NCAR and other weather centers and government organizations in accomplishing this, great.

But none of this comparison talks about money, and that is a problem. There is no easy way from the outside to compare the cost of the runs on Cheyenne to the cost of the runs on Azure because we don’t have any of the system, people, and facilities cost data attributable to Cheyenne over its lifetime to try to figure out the cost per hour of compute. But make no mistake: It would be fascinating to see what the per-minute cost of running an application on Cheyenne really is, with all of the overheads burdened in, compared to the cost of lifting and shifting the same job to Microsoft Azure, Amazon Web Services, and Google Cloud. This is the real hard math that needs to be done, and such data for any publicly funded infrastructure should be freely and easily available so we can all learn from this experience.

Thanks for the article on this, Timothy. You covered everything pretty well, but I’m happy to answer any questions people might have. And, as you pointed out, when we get around to tuning it, I expect performance to increase even further. We hit the 35+ simulated years per day (SYPD) mark on the CAM-SE ne30 run at 45 nodes, but in theory we can scale this out to 90 nodes, and I intend to try at some point.

Right now, though, our main focus is actually on enabling the wider community outside of NCAR to also run CESM in the cloud easily. The model gets a lot of use within NCAR, obviously, but it also gets a lot of use in research and industry across the world, and often times that use is more sporadic vs the constant use on our systems, and sporadic use is often a good fit for the cloud. We’re a bit further ahead with our AWS efforts here, but Azure will be supported too. Like you, we at NCAR like ALL platforms and supporting multiple clouds is part of that. The more science, the better!

In terms of cost, there are two different aspects that warrant focus – first, we intend for our cloud tools to show real-time pricing of runs, and by default, will launch systems into vendors & regions that offer the best pricing. That helps with competition between cloud vendors, but can still be a challenge if data needs to be moved later. But what about on-prem vs cloud? Realistically, the cloud is not competitive with NCAR’s systems there. If you want to do a back-of-the-envelope calculation, public sources say our incoming system, Derecho, cost between $35M – $40M. It’s part CPU, part GPU, and I don’t even know the breakdown of the costs, but let’s assume 90% of the cost is CPU nodes, and add a bit for power, and estimate a lifetime of 5 years, and divide the cost per hour by the number of cores, and you’ll get something hovering around $0.003 per hour. The advertised AMD Zen3 cloud nodes, from both AWS and Azure, are about 10x that with on-demand pricing, at a still-not-bad $0.03/core-hour. That’s CPU alone, and doesn’t include people or facilities, but it’s not enough to make the cloud competitive with our own systems. Over time, as our systems age and clouds bring new hardware online, it gets closer, but the edge is still very much given to in-house systems under constant, full load.

That said, thankfully we don’t need to have the cloud be competitive to our internal systems. We view the cloud as complementary to them, not a replacement for them. Our systems are free to use for NSF-funded earth system research -no charging for time!- but there’s a whole community of users out there who don’t fit into that category, or have ‘burst’ usage patterns, or need the immediate, on-demand nature of the cloud. Those are where I see it being useful for CESM, with the added bonus of the cloud enabling preconfigured platforms – just launch and you’re ready to run CESM, no porting or complex configuration needed.

If anyone has questions, I’m happy to answer them. We’ve actually been working with both AWS and Azure, and both teams have been incredibly helpful. We’re looking forward to helping the research community easily use their platforms for science.

Now that, Brian, is a freaking comment. People, that is how it is done. It is what I expected, and you saved me a lot of hassle with my own estimating, which I just did not have the energy for today thanks to a blasted headcold. My math has shown consistently that cloud — I can’t bring myself to say public cloud anymore — costs something like 5X to 10X more than on premises gear and that is if you can keep clusters busy on premises enough to drop the price. Run it at 50 percent utilization, and it is really costing you twice as much.

Formatting for that prior comment got all messed up. Oops. If it can be edited, great.

This is an awesome article and Thanks, Brian, for an awesome postscript comment. I wish I had taken that fluid dynamics class in school but I least I know what a Reynolds number is.