Sponsored Post: As in so many other aspects of life, not all compute workloads are created equal – they need a more subtle approach to getting the best out of them which brings a more potent balance of hardware and software into the mix.

And that means the international CPU cores race could be over because cores alone just don’t cut it when it comes to meeting datacenter, network and edge processing requirements any more. Just throwing more of them at the problem suggests that at a certain point they will hit a plateau in terms of performance and performance per watt which can’t be raised without the additional boost provided by dedicated accelerators.

Nor can core frequency or clock speed be relied on as the primary indicators for which processor is delivering the best performance when it comes to today’s CPU intensive workloads. In fact for certain real-world applications, and particularly those involving AI/ML, you could be getting more sustained performance out of a CPU that is operating at a lesser clock frequency because its architecture is built to get more done per clock tick, so it’s accomplishing more even with fewer clock ticks per second.

Imagine digging sand in a pit, for example. If you get a shift on, you can manage about ten scoops per minute. But when a commercial excavator rolls in, its bigger shovel manages to carry massive payloads per scoop even with fewer scoops per minute, so it gets a lot more work done when it comes to tons of dirt moved.

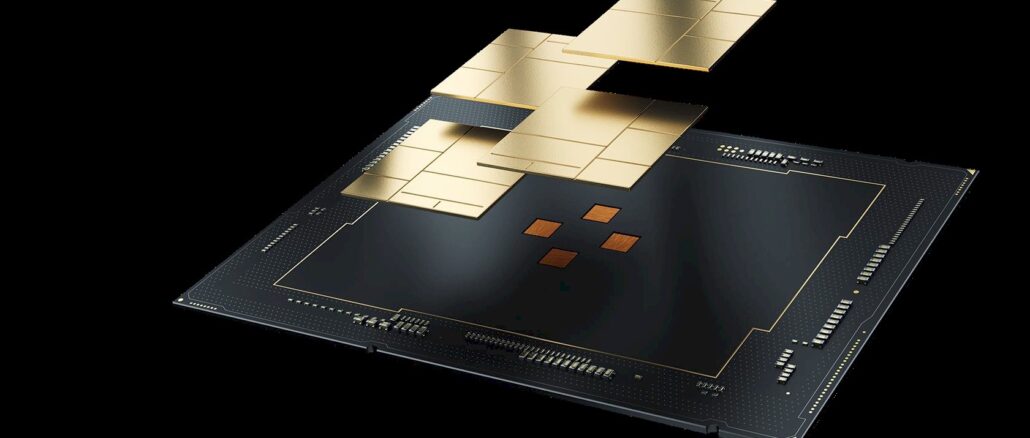

It’s sort of this direction that Intel has been taking with the evolution of its 3rd and 4th Gen Xeon CPUs over the last few years. And the company will continue that workload-first approach to innovation, design and delivery into future processors too. It’s no longer so much about the individual parameters like core count or clock frequency, as it is about a combination of workload performance, power efficiency, sustainability, total cost of ownership and performance management.

Because the metric you use to measure efficiency makes all the difference. Whether its workload performance per watt, inferences per second per watt (or per dollar), or inferences per second per mm² of silicon – all of them are valid calculations which can make a huge difference to overall application speed.

Stop focusing on core count alone, and pay more attention to other components boosting performance, like workload accelerators on Intel Xeon processors.

You can read more about how the accelerators on Xeon CPUs are supplementing the cores to enhance workload performance here.

Sponsored by Intel.

It would be great to see some hard performance and efficiency numbers from Sunspot and Borealis, on standard HPC workloads (HPL, HPCG, etc … scaled appropriately), with and without acceleration, to get a better handle on these “excavators” — something beyond (more detailed than) the 1.56x, 7.3x and 4.1x of the product brief, if at all possible.

I would suggest the paper by Shipman, Swaminarayan, Grider, Lujan, and Zerr, on “Early Performance Results on […] Sapphire Rapids with HBM”, available at arxiv.org.