As more enterprises embrace hybrid and multicloud strategies and begin to extend their IT reach out to the edge, scale becomes an issue. Red Hat, a longtime player in the datacenter thanks to its Linux distribution and related systems software, has worked hard over the past decade to establish itself in the hybrid cloud world. It was an effort that worked well enough to compel IBM to spend $34 billion in 2019 to buy it to try to change its own future.

Red Hat customers that are making the move into the cloud are at different stages, but many are trying to figure out how manage their way through a world of clouds and edges, containers and Kubernetes.

“The majority of them are now saying they adopted containers first … then some of them might have directly gone to Kubernetes at some point,” Tushar Katarki, director of product management for OpenShift Core Platform at Red Hat, tells The Next Platform. “Now it’s all good. ‘We get the application portability, we get the agility with containers and Kubernetes. We can optimize our resources, we get to deploy it on various clouds. But now we have a proliferation of this. We have had some good success with this. So that means I have to scale everything. I have to scale my clusters. I have to scale the number of users. I have to scale the number of applications. I have to scale our operations.’ We have been on this journey to help with that. … ‘How do I scale operations? How do I scale applications? How do onboard more applications in this so-called digital transformation and modernization in more cloud-native apps?’”

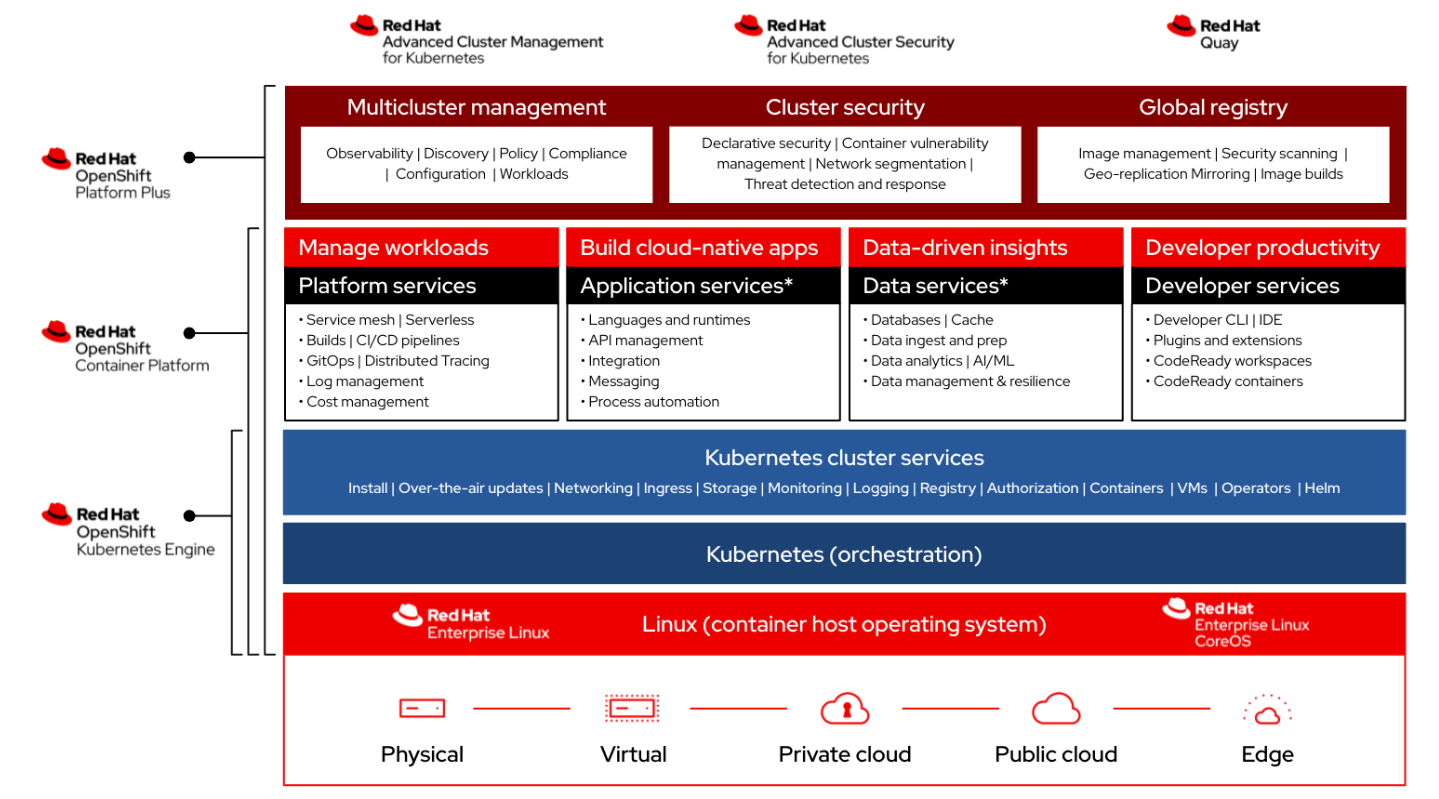

The vendor a year ago introduced OpenShift Platform Plus, offering enterprises looking to wrangle their rapidly expanding IT environments what the name implies – a single hybrid cloud platform play that brings together various OpenShift features to enable organizations to more easily run their applications in the cloud and at scale. It not only includes Red Hat’s OpenShift Kubernetes platform, but also its Advanced Cluster Management (ACM), Advanced Cluster Security (ACS, technology inherited from its acquisition of StackRox last year), OpenShift Data Foundation for software-defined storage and data services optimized for OpenShift, and Quay (a central registry).

It touched on everything, Katarki says.

“OpenShift Platform Plus really was about how you manage and secure and be compliant at a scale when I talk about OpenShift clusters, if I’m the admin, if I’m a compliance officer, if I am a security officer,” he says. “But it’s also as an application tool that I would like deploy and manage at scale.”

Red Hat this week is unveiling the latest iteration of OpenShift Platform Plus, which include updates to OpenShift (version 4.11), ACM (2.6) and OpenShift Data Foundation (4.11). And much of the focus in on enabling organizations to continue scaling their IT operations even as they stretch out to the cloud and the edge. The edge is increasingly becoming a key factor in enterprise IT as more infrastructure, applications and technologies – think AI and analytics – are being pushed out to where the data is being generated, whether it’s in mobile devices, far-flung sensors or other systems well beyond the walls of the core datacenter.

For a while, Red Hat offered a three-node cluster, the smallest support cluster size that included control and worker nodes. Last year, with OpenShift 4.9, the company introduced a full OpenShift deployment in a single node, creating a third option – including the three-node cluster and remote worker topologies – when thinking about the edge. Red Hat said that ACM could create and manage up to 2,500 single-node OpenShift at the edge using zero-patch provisioning and is now making that capability generally available, Katarki says.

He also says scale refers to the range of workloads that can run on the platform. In that sense, Red Hat is helping Nvidia expand the reach of its AI Enterprise software suite, which was unveiled last year, offering what the chip maker says is a complete portfolio of AI and machine learning tools optimized to run on its GPUs and other hardware. Red Hat and Nvidia earlier this year talked about strengthening their relationship and integrations between OpenShift and Nvidia’s AI Enterprise 2.0.

“That was only available on bare-metal and on-prem environments,” Katarki says. “Now, with 4.11, this is being extended to public cloud. This, again, is about scale in a different way. This also ties into that hybrid cloud. OpenShift has always been about, no matter where – on-prem on or any public cloud – you will have OpenShift and now you will have Nvidia AI Enterprise that is certified and it really doesn’t matter whether you run it … your data scientists are going to see the same experience from an AI perspective on those platforms.”

Similarly, OpenShift can now be installed directly from the Amazon Web Services and Azure marketplaces, giving enterprises more options for access it and paying for it. Red Hat expects to expand the availability to other public clouds, including Google Cloud, he says.

As IT becomes more distributed and data and applications are created and access outside of datacenter firewalls, security becomes an increasingly important factor that also needs to scale. The “shift-left” movement in software development includes addressing security in applications earlier in the development cycle. OpenShift 4.11 includes such features as pod security admission integration to enable organizations to define isolation levels for Kubernetes pods to ensure more consistent pod behaviors and more architectures for sandboxed containers, including running sandboxed containers in AWS and one single-node OpenShift. The sandboxed containers offer more isolation layers for workloads even at the edge.

“Security is not just about at runtime, but it is about how to think about security at all stages of your software production and bringing that to as a service,” Katarki says. “A practical example of that is it’s no longer enough to see that you have a private network for your organization and ingress and egress to that network has been secure. The other change that I’ve seen as containers and Kubernetes [have become commonplace is] those security officers and others had to learn about this new technology. They grew up in the age of Unix or they maybe have grown up with the age of a VM server, but what does it mean in terms of containers and Kubernetes and how do I look at it? How does this look the same as before so I can use some of this experience? How is it different? I get a lot of questions about awareness, the desire to know how Kubernetes and OpenShift is secured. The multi-dimensionality of it, the shifting left of security and security is everywhere; it’s everybody’s job to be secure.”

Along with that comes automation, he says. Organizations now have hundreds of clusters and thousands of containers and machines; managing all that manually is impossible.

“The idea really is, how do you observe it? How do you secure it? How do you do this at scale?” he says. “We introduced this concept of a compliant operator and that’s where ACM and ACS and this idea that such a platform provides this security and governance at scale is one of the major things. We talk about 2,500 nodes [being managed by ACM]. How do you secure them? Those are all tied together with OpenShift Platform Plus.”

Be the first to comment