Nvidia and VMware have forged a tight partnership when it comes to bringing AI to the enterprise, which stands to reason given the prevalence of VMware’s ESXi hypervisor and vSphere management tools across more than 300,000 companies worldwide. But there is another important server virtualization and container platform provider: Red Hat.

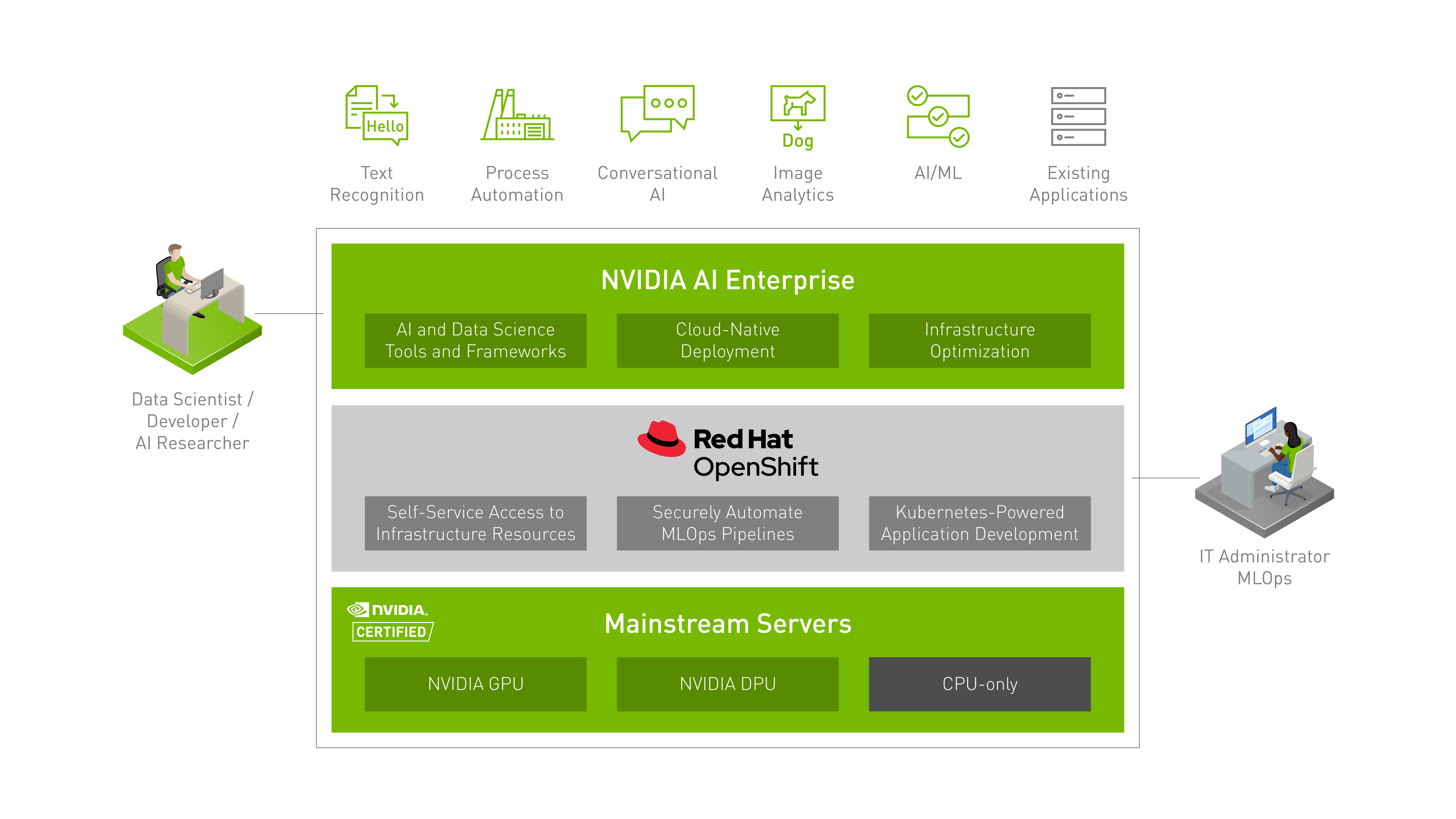

If you are a tech industry giant that wants to put a greater spotlight on what your technologies can do for enterprises that want to run AI workloads, you can do worse than make those statements at Nvidia’s bi-annual GPU Technology Conference. And that’s what Red Hat is doing this week, highlighting tighter integrations between its OpenShift Kubernetes platform and Nvidia’s AI Enterprise 2.0, software suite introduced last year as a way of making AI capabilities available to a wider range of enterprises, and introducing the latest release of OpenShift. Opening up AI and machine learning technologies to more companies and not just available to research labs, educational institutions and the largest organizations has been an ongoing effort of many in IT for the past few years.

Red Hat is among those vendors.

“There has been a lot of adoption, but when we talk about adoption, if you think about these early adopters, these are the innovators,” Tushar Katarki, director of product management for OpenShift Core Platform at Red Hat, tells The Next Platform. “The work now is trying to get the technology mainstream. People see the advantages of it. As we try to get to mainstream, one of the biggest challenges that any typical enterprise will face in regards to software. It has to scale, it has to be secure, it has to be compliant. Then they have to integrate it into existing processes. Then there is AI and machine learning itself, which is in some ways unique in that it requires a lot of compute. There are data scientists who want services that are easy to use.”

Red Hat points to Gartner numbers showing that worldwide AI software revenue this year will hit $62.5 billion, a 21.3 percent year-over-year increase and that enterprises increasingly want to integrate AI and machine learning capabilities into their cloud-native applications. OpenShift is the type of scalable and flexible platform that is needed to deploy these applications quickly, the company says.

More tightly combining OpenShift with the work that Nvidia has done over the years – not only with its GPUs but also through its CUDA software platform, RAPIDS software libraries, hardware offerings like the DGX A100 AI-optimized system and AI Enterprise 2.0 – enables this to scale. AI Enterprise already supports VMware’s Tanzu Kubernetes offering.

“Once you have trained your model, once you have played with your data, trained your model, your hyper-parameter tuning, what have you, now you want to express it,” Katarki says. “You want to make it available as a tool to the developers so that they can incorporate it into their applications. What is the cloud-native way to do it? It’s to basically make it a cloud-native application with the REST API. All of this runs on top of OpenShift with Nvidia Enterprise AI. … That is certified, that is tested and that is joint support for customers.”

OpenShift is certified and support with AI Enterprise 2.0, with the software suite being optimized for the Kubernetes platform. Having OpenShift now supported on the DGX A100 server enables organizations to consolidate its machine learning operations – data engineering, analytics, training, software development and inference – onto a single piece of hardware.

All of this adds to the work the two vendors already have done together, including enabling Nvidia GPUs to support Red Hat’s OpenShift Science cluster and the planned extension of the OpenShift Data Center Cloud Service to include Nvidia’s AI Enterprise.

“This allows the AI and machine learning to scale,” Katarki says. “OpenShift itself is a cloud platform, is a DevOps platform, is a container platform, depending on what abstraction level you at. You are managing the best of data science and the best of cloud, and DevOps and cloud-level development, and you’re bringing that to our customers.”

At the same time, Red Hat is putting more functionality into the latest release of OpenShift – version 4.10 – designed to make it easier to run AI and machine learning workloads, including in hybrid cloud environments. Those also include two additional steps with Nvidia. The first is making OpenShift available on Nvidia’s LaunchPad, a private AI infrastructure platform introduced last summer and expanded in November that gives organizations short-term access to Nvidia AI tools. It essentially offers Nvidia’s GPUs and AI Enterprise on OEM systems as a service. With OpenShift onboard, they now can leverage the Kubernetes platform.

For hybrid clouds, OpenShift can now run on Arm processors, both in Amazon Web Services (AWS) via a full stack automation installer provisioned infrastructure (IPI) and a user provisioned infrastructure (UPI) for bare-metal environments on existing infrastructure. OpenShift on Arm is a good price-performance story, but it’s not the only one, Katarki says.

“What we’re introducing with 4.10 is OpenShift on Arm on AWS and OpenShift on Arm on bare metal,” he says. “That really is a hybrid cloud play. It is going from something that you might develop in the core, on AWS and then you might want as a container that workload runs on the edge. That edge device is typically what I would call bare metal. It could be virtualized, too, but that’s how we are. that’s how we think about the hybrid cloud. But OpenShift is a platform. That becomes a major way in which we are tying machine learning, hybrid cloud and edge concepts together.”

There also is OpenShift IPI support for Microsoft Azure Stack Hub and IBM Cloud, as well as Alibaba Cloud as a technology preview. Through this, enterprises can get a fully automated, integrated and single-click installation of OpenShift 4.

Be the first to comment