Adam Selipsky spent 11 years helping Andy Jassy build Amazon Web Services from a fledgling compute and storage utility to the world’s largest public cloud services provider before leaving in 2016 to become CEO of analytics software maker Tableau Software. When Selipsky returned in May as AWS CEO – replacing Jassy, who replaced Jeff Bezos as chief executive officer of Amazon – Selipsky took the reins of a behemoth that in the most recent financial quarter accounted for almost all of Amazon’s profits and continues to reign at the top of an increasingly competitive public cloud market.

In the quarter that ended in September, AWS saw sales jump year-over-year by 38.9 percent – to $16.11 billion – and operating income hit $4.88 billion, up 38.1 percent. While the mother shipped was rocked by costs associated with the COVID-19 pandemic, AWS – like most cloud services providers – saw business accelerate, given the almost overnight shift to remote work and enterprises ramping up their shift to the cloud. It is a business with more than 200 services and more than 475 types of cloud instances.

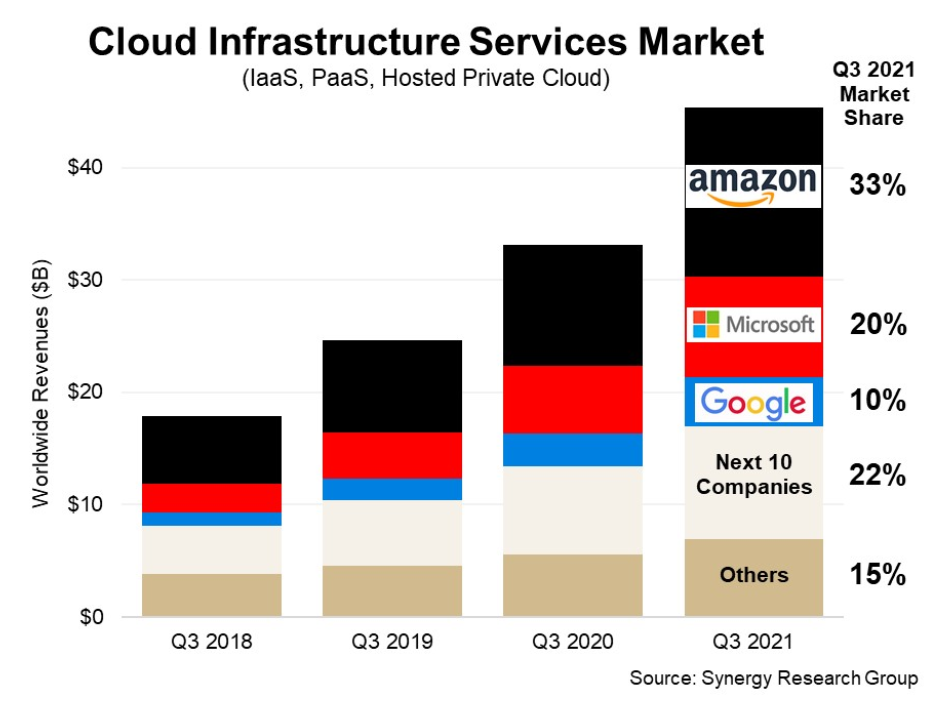

That could be seen in the overall numbers for enterprise spending on cloud infrastructure services in the third quarter, which passed the $45 billion mark and grew 37 percent from the same period in 2020, according to Synergy Research Group. AWS kept hold of 33 percent of the market, followed by Microsoft Azure with 20 percent and Google Cloud at 10 percent. Even as the top three continued to bring in more market share, there was still growth to be had in the space, with the next 10 largest cloud providers seeing 28 percent revenue growth and revenue for small to midsize providers jumping 25 percent.

It was against this backdrop that Selipsky this week took the stage at the AWS re:Invent conference for the first time as CEO. Much of what he touched upon was a continuation of what the cloud giant had been doing under Jassy, such as extending its reach into enterprise datacenters to essentially play on both sides of the hybrid cloud fence and to begin stretching its technologies and services into the fast-growing edge space.

However, he also began to put his own mark on the company and its future. During interviews leading up to the event, Selipsky talked about steps AWS needed to make to meet enterprise demand, including offering higher level services tailored to specific industries. AWS already has started down this road – Selipsky pointed to AWS Cloud Contact Center for call centers, Amazon HealthLake for data in the healthcare field and AWS for Industrial, launched last year to improve operations and supply chains in that sector.

More of that is coming, he said during the keynote, in which guest speakers included executives with such major companies as 3M, Nasdaq, and United Airlines.

“Over the past couple of years, we’ve built abstractions or higher-level services that make it even easier and even more accessible for people to consume the cloud and interact with AWS across a wide range of industries, from health care, where we’ve launched targeted services like Amazon Health Lake to financial services or manufacturing or automotive,” he said.

The heightened focus on services optimized for specific industries comes as Microsoft promotes Azure as a public cloud for major corporations – about 90 percent of Fortune 500 companies use Azure – and as Google Cloud continues the push started when George Kurian took over as CEO in 2019 to bring more enterprises to the business.

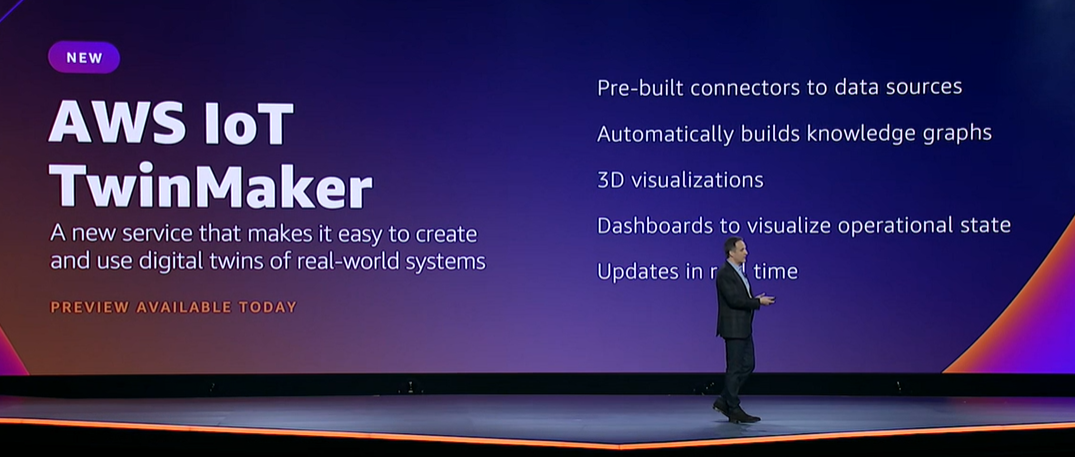

At re:Invent, Selipsky unveiled a partnership with Goldman Sachs to create Goldman Sachs Financial Cloud for Data to enable financial services organizations and banks to leverage data, software tools, and cloud services the two companies have developed together over the last several years. AWS IoT TwinMaker is aimed at the industrial sector to make it easier to create and manage digital twins, using virtual representations rather than physical models for such tasks as designing factories, equipment and production lines.

For the automotive industry, Selipsky introduced AWS Automotive that pulls together a range of AWS products and services into a single place and AWS FleetWise, a service to enable automakers to more easily and quickly collect sensor and telemetry data from automobiles and other vehicles.

“What all of these capabilities have in common – that are purpose-built for specific industries or specific business functions or use cases – is that they put the power of AWS cloud in the hands of more users, and we’re going to continue to build more of these abstractions on top of our existing foundational services, collaborating with industry leaders on new offerings or building brand-new applications,” Selipsky said.

The CEO said AWS also will expand its presence in on-premises datacenters as the IT world continues to shift to hybrid clouds. Cloud providers and traditional IT infrastructure vendors alike are looking to establish themselves both on-premises and in the cloud. AWS is doing it through both hardware – its Outposts systems and Graviton processors – and its services, which can be housed and access via Outposts.

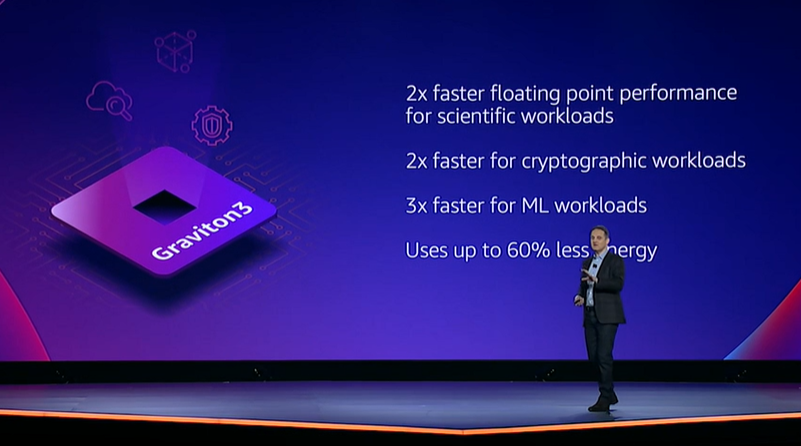

Selipsky also announced Graviton3, the company’s third-generation Arm-based processor, that he said is 25 percent faster running general compute workloads that its predecessor and is even better for some specialized workloads. Graviton3, available as preview, offers twice the floating-point performance for scientific workloads and twice the speed for cryptographic workloads and is three times faster running machine learning workloads. It also uses up to 60 percent less energy for the same performance.

Also unveiled is the new EC2 C7g cloud instances based on Graviton3, which chief evangelist Jeff Barr wrote in a blog post will be available in a range of sizes – including bare metal – and will be equipped with DDR5 memory, which draws less power and provides 50 percent more bandwidth than DDR4.

“These instances are going to be a great match for your compute-intensive workloads: HPC, batch processing, electronic design automation (EDA), media encoding, scientific modeling, ad serving, distributed analytics, and CPU-based machine learning inferencing,” Barr wrote.

AWS also is pushing forward with its custom chips for machine learning workloads. The company in 2019 introduced its first Inferentia processor for machine learning inference tasks and the first cloud instance built on the chip, the Inf1, which Selipsky said offers 70 percent lower cost-per-inference than comparable GPU-based EC2 instances.

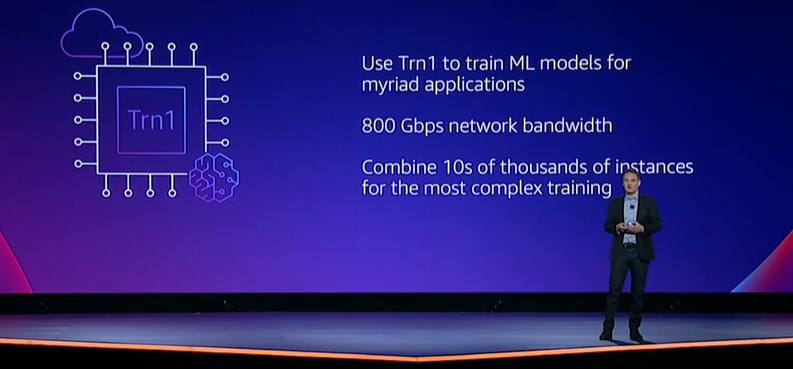

A year later, AWS introduced Trainum, a processor for training deep learning models. The CEO announced the Trn1 instance powered by Trainium and aimed at such workloads as natural language processing, object detection, image recognition, recommendation engines and intelligence search. Each instance can support up to 16 Trainium chips, up to 800 Gb/sec of EFA networking throughput – double the bandwidth available in GPU-based instances – and high-speed intra-instance connectivity.

The instances will be deployed in EC2 UltraClusters, which can scale to tens of thousands of Trainium chips to petabit scale. The Trn1 UltraClusters are 2.5 times larger than previous EC2 UltraClusters, according to AWS.

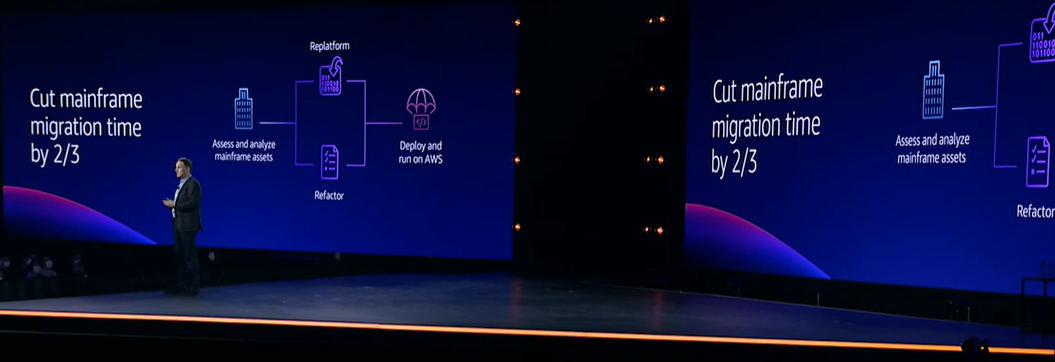

AWS also is reaching out to mainframe users with its new AWS Mainframe Modernization service, designed to enable enterprises to more quickly move workloads off the big iron and into the cloud. Selipsky said that currently organizations have two approaches for doing this: either a lift-and-shift approach to migrate an application essentially as is or refactor and break down an application into microservices in the cloud.

“Neither road is as easy as customers would like, and in fact, whichever way you go can take months, even years,” he said. “You have to evaluate the complexity of the application source code, understand the dependencies on other systems, convert or read, compile the code and then you still have to test it to make sure it all works. While we have lots of partners who can help, even then, it’s a lot of time.”

With the service, the time needed to move mainframe workloads to the cloud can be cut by as much as two thirds. It includes development test and deployment tools and a mainframe-compatible runtime environment. If an enterprise wants to re-platform an application with minimal code changes, it can use suite compilers in the service to convert the code without losing functionality before migrating. If an organization wants to re-compose the applications, the service can automatically convert the COBOL code to Java.

Reaching out to the edge, the company introduced AWS Private 5G, a fully managed service enterprises can use to deploy a private global 5G network. With significant increases in speed and capacity, 5G is becoming a key enable to edge deployments and the new service is designed to enable organizations to set up and scale a private mobile network in days.

“You get all the goodness of mobile technology without the pain of long planning cycles, complex integrations and the high upfront costs,” the CEO said. “You tell us where you want to build your network and specify the network capacity, we ship you all the required hardware, the software and the SIM cards. Once they’re powered on, a private 5G network just simply auto-configures and sets up a mobile network that can span anything from your corporate office to a large campus, the factory floor or a warehouse. You just pop the SIM cards into your device and, voila, everything’s connected.”

For analytics, a key service for AWS and other cloud providers, the company is now offering serverless options for its Redshift, EMR and MSK services and an on-demand option for Kinesis.

“There are some people who want these benefits without having to touch any infrastructure at all,” Selipsky said. “They don’t want to tune it optimized clusters. They don’t need access to all the knobs and dials and servers. Others don’t want to have to deal with forecasting the infrastructure capacity that their applications need. We already eliminated the need for infrastructure and capacity management with Athena and Glue. We asked ourselves whether we could take infrastructure completely out of the equation for our other analytics services.”

Be the first to comment