We don’t use the first person a lot here at The Next Platform, but I am going to make an exception. And I ask your forbearance because the point I want to make is interesting and relates directly to what I am thinking about Amazon Web Services as we are in the midst of a three-week core dump that is called the re:Invent conference.

Way back in the dawn of time, when I was a cub reporter with sharp teeth but not very big jawbones or strong jaw muscles, I managed somehow to get an interview with the world’s highest paid and first significant chief information officer. In fact, that term was coined back in the late 1980s to describe DuWayne Petersen, who was executive vice president of operations, systems, and telecommunications at Merrill Lynch, which at the time was a freestanding brokerage firm and the largest one at that with a very rich and complex IT infrastructure.

It was around this time that people were starting to call what had always been referred to as data processing by a new name: information technology. And this was a very big deal for me. So I put on my gray flannel suit and wing tips, grabbed my notebook and tape recorder, and headed down to the American Express building within the World Trade Center complex to meet the world’s first million-dollar CIO and to do my first big interview.

In this business, you always depend on the kindness of strangers and the insights that they are willing to share. That is, in fact, the currency that we trade, that they trust we will help the IT sector better understand itself and evolve in a positive direction. It’s an active role we were taught and have enthusiastically embraced. And my interview with Peterson was the first big example of such openness and kindness that I experienced, and it made me believe that possibly, just possibly, this career that I had chosen only a year at that time – to try to understand the IT realm and say something meaningful – was not going to be so terrible after all.

Peterson got his bachelors degree in industrial engineering from MIT about the time I was born it looks like, and then got an MBA from the University of California at Los Angeles. He eventually rose to be the vice president of MIS at RCA Corp in 1973, and in 1977 he moved to be the executive vice president of IT at Security Pacific Corp, which is now part of the Bank of America behemoth as Merrill Lynch is. During his tenure, the bank spun out its IT department into a separate service bureau called Security Pacific Automation Co, and Peterson was its chairman and CEO. In 1986, he got the CIO job at Merrill Lynch, about a year before the October 1987 stock market crash.

The day I met Peterson was memorable because it was August 2, 1990, and Iraq was invading Kuwait that morning. When I got to his office, we started talking about the giant IBM mainframe cluster Merrill Lynch operated, and the fiber optic network that the brokerage had laid between its datacenters on Staten Island and the New York Stock Exchange to reduce the latencies between Wall Street and its systems. We also talked about the prospect of using Unix systems, then coming into vogue from Sun Microsystems, Hewlett Packard, and others, and particularly so with IBM’s announcement of its Power-based RS/6000 line earlier in February of that year. Merrill Lynch was implementing a client/server architecture for its traders, based on IBM’s and Microsoft’s OS/2 platform on the client side, with lots of local processing there to make the load less heavy on the mainframe and to make the application less dependent on the network between users and those mainframes. (The whole idea of client/server in the enterprise had not yet really taken off, and Merrill Lynch was on the bleeding edge of that idea to try to cut its IT infrastructure costs.) Peterson was also big on datacenter consolidation, leveraging big systems and fast networks to replace many scattered systems that were running at lower utilization.

To show me how this client/server thing worked, Peterson asked me to come around to his side of the desk. He showed me his own portfolio as well as all of the Wall Street tickers and such operating across those screens, plus all the system and network monitoring he could do, and I remember him losing many more times money than I made in a year in the course of our interview, and Peterson never broke a sweat for either the systems handling the stock selloff that day or his own portfolio.

What I remember most was Peterson telling me that as a matter of research and development, Merrill Lynch had one of everything, and sometimes more, because it was only by truly putting your hands on something and trying it out could you really bank on it. Such diversity was a kind of insurance against difficulties in the future, and it gave Merrill Lynch a kind of vertical as well as horizontal mobility within its stack. This approach was not free, Peterson explained, but the incremental cost was offset by the leverage Merrill Lynch could get from understanding competing technologies and being able to deploy them. This included, by the way, the outsourcing of technologies as well as keeping some on premises, and Peterson famously outsourced Merrill Lynch’s telecom network to MCI (the rival to AT&T in the long distance market in the 1980s who compelled the Federal government to break up Ma Bell in 1984) to save money and improve that network.

In a sense, what Peterson did at Merrill Lynch was build an IT infrastructure with hybrid vigor, both on premises and outsourced – although because the public cloud utility was not yet in existence because the public Internet had not yet been developed, all he could do was offload processing from mainframes to rings of servers and then desktops. The point was, the infrastructure was always evolving, taking advantage of lower cost approaches. And the other point today is, these are not new ideas, but old ones, as we see companies like AWS revisit them.

This is key, and an old lesson that reverberates as we contemplate the announcements by Andy Jassy, chief executive officer at Amazon Web Services, during the first week of the re:Invent conference.

Jassy, more than anyone else, has espoused the idea that “in the fullness of time,” as he often puts it, all workloads would move to the public cloud. But things are not that simple, and we are nearly a decade and a half into the revolution that AWS caused by creating a credible cloud computing and storage service – EC2 and S3 – and then expanding it to do more and more things with a steady drumbeat of technological innovation that has been bewildering to watch and mirrors the kind of innovation that Merrill Lynch and companies like it had to do for themselves, and largely by themselves, in decades gone by.

What is different about AWS is that there is now a big company that was initially trying to keep itself on the leading edge of technology for its own parent company – Amazon, the online retailer – and it thought it might sell some slices of itself to cover its costs. We all could benefit from that innovation that Amazon itself needed. But now the virtuous feedback loop is so much larger. AWS is now at the point where the collective needs of its millions of customers are driving the engineering requirements of AWS itself a lot more than that parent company, and all AWS customers (including Amazon) benefit directly from that; those on other big public clouds benefit indirectly because of the competitive pressure and response of those other big public clouds – mostly Google and Microsoft in the United States and Alibaba, Baidu, and Tencent in China, but there are others.

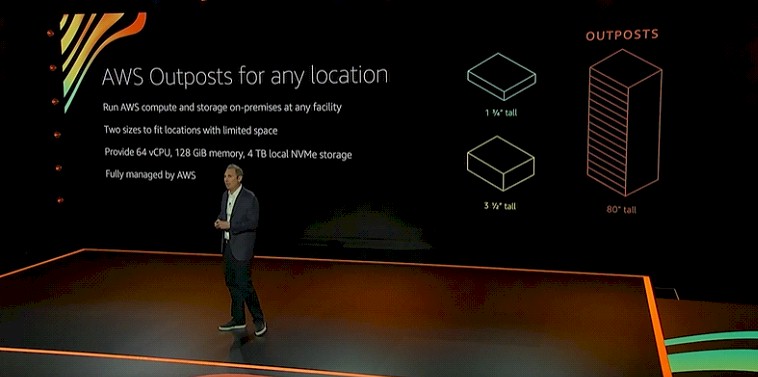

The hybrid vigor that AWS has is multidimensional, and AWS is adding more dimensions as the years ago by. This includes AWS backtracking on its original position that its customers should be on the public cloud and releasing its Outposts variant of its infrastructure that companies can install on premises and run like AWS infrastructure up in the cloud. Now, AWS is rolling out 1U and 2U Outpost server instances that can be deployed in edge cases like remote offices and facilities – something we are certain that Jassy would never think was necessary only a few years ago.

The Elastic Kubernetes Service is being rolled up as a distro that anyone can deploy and the Elastic Container Service is also being extended down from the AWS cloud into on premises datacenters to manage Kubernetes.

Amazon is similarly taking a hybrid approach with compute.

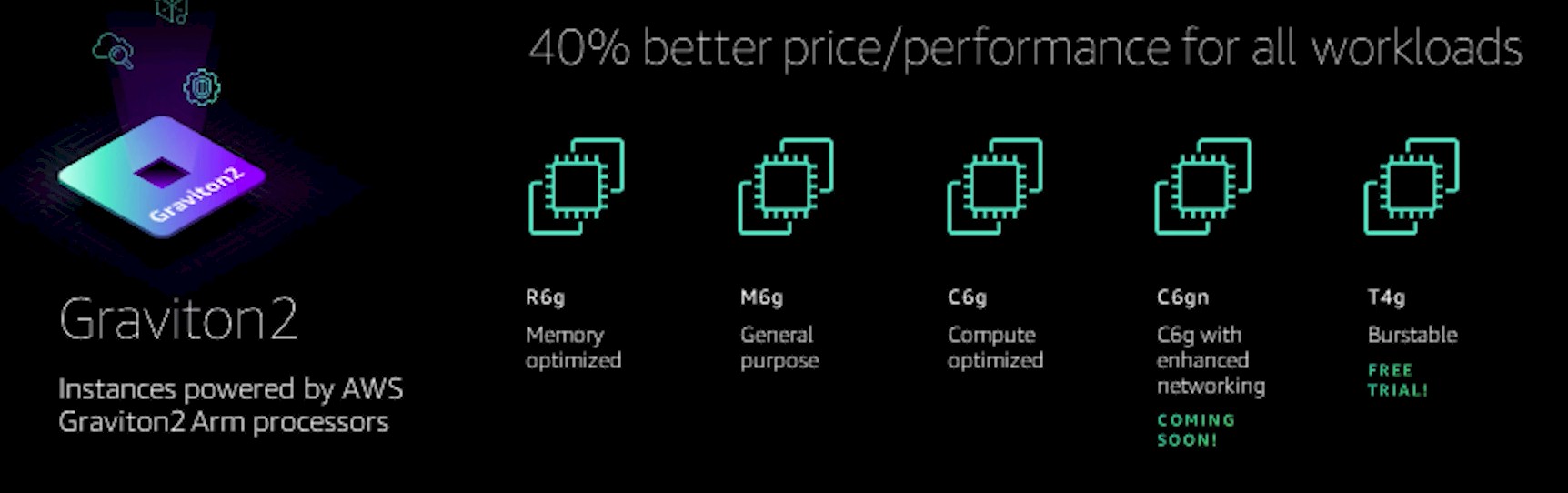

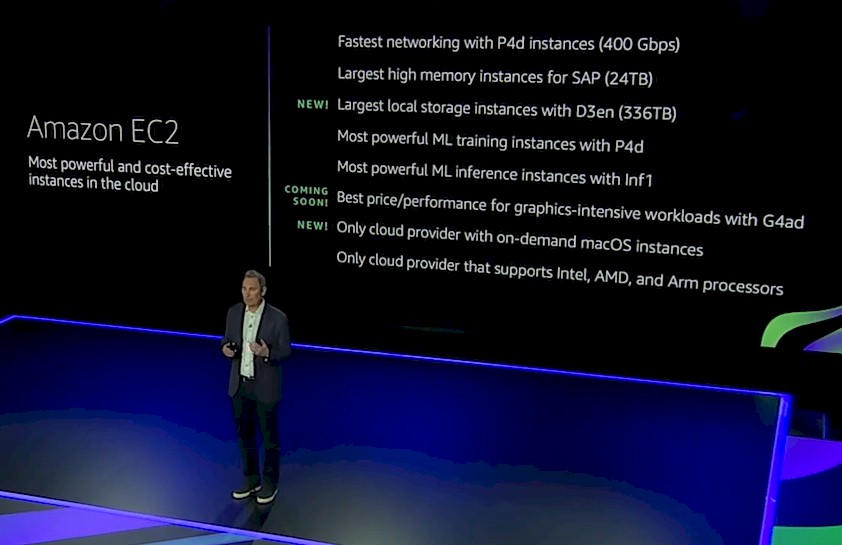

For CPU compute, AWS has instances based on the latest Intel Xeon SP and AMD Epyc processors as well as its own Graviton2 Arm processors, which are the foundation of its “Nitro” DPUs but which can offer 40 percent better bang for the buck compared to its X86 instances, according to Jassy.

“That is a big deal – think about what you can do for your business if you have 40 percent better price/performance on your compute,” said Jassy, launching a new Graviton2 instance that has 100 Gb/sec networking into and out of the device. The Graviton2 was launched a year ago, and we think it has 64 cores with no threading on the cores, and Jassy says to expect more on this front.

For specialized machine learning compute, AWS has its Inferentia home-grown matrix engine, announced this time last year, and Amazon itself uses Inferentia to spit out million of inferences per hour for its Alexa service, according to Jassy. And the move to this homegrown Inferentia chip, instead of using CPU or GPU or even FPGA instances, to run the inference has lowered the cost of inference by 30 percent and reduced the latency of inference by 25 percent. (Interestingly, only 80 percent of the inference workloads for the Alexa application have been moved to Inferentia.) And here is the important bit that Jassy divulged: “It turns out that 90 percent of your costs is not in the training, but in the inference.” He also said that nobody was trying to help customers save money there, which is a ridiculous thing to say considering how many startups there are and how much low-precision math has been added to CPUs, GPUs, and FPGAs in the past several years.

For machine learning training, AWS has been investing as well, and at re:Invent this year, it spoke a little bit about a training chip, presumably a big, bad version of Inferentia, that it has created called Trainium. Which could be the second-best product name of 2020 – the TeraPHY silicon photonics chip from Ayar Labs is winning that right now, we think. (It does sound a bit too much like Intel’s ill-fated Itanium, though. . . . What could go wrong with that?)

Anyway, back to machine learning training. In the first half of next year, just to hedge its bets even further, AWS will offer instances on its EC2 cloud equipped with the Gaudi neural network accelerators from Intel – these are from Habana Labs, not the Nervana Systems acquisition in 2016, which was mothballed in the wake of the Habana deal this time last year. Again, Jassy said that these Habana instances will offer about 40 percent better machine learning training price/performance than its current EC2 P4 and P4d GPU instances, which employ Nvidia’s “Ampere” A100 GPUs, and will support the PyTorch (Facebook) and TensorFlow (Google) machine learning frameworks.

But, AWS wants to experiment, and perhaps strike a little fear into the hearts of those making custom machine learning silicon or GPUs for accelerating machine learning training, which are by design created to be more general purpose than chips like Intel’s Gaudi.

“Like with generalized compute, we know that if we want to keep pushing that price/performance envelope on machine learning training, we are going to have to invest in our own chips as well,” explained Jassy. “It is a custom chip from AWS to offer the most cost/effective training in the cloud. Trainium will be even more cost effective than the Habana chip I mentioned and it will support all of the major frameworks – TensorFlow, PyTorch, and MXNet – and it will use the same Neuron SDK as our Inferentia customers use.” MXNet is the Microsoft framework, and if you have that and the other two, you have covered a lot of ground. Other frameworks will no doubt follow.

The Trainium chip will have the “most teraflops of any ML instance in the cloud,” according to AWS and will be available in the second half of 2021 as an EC2 instance for raw compute or as part of the SageMaker machine learning software service.

That AWS needs to invest in its own chip development may be literally true given the state of development in machine learning training chips today and the prices that these companies want to charge for their devices and the risks of depending on them to make the deliveries they promise at the time they promise them on their roadmaps. But it is not necessarily true that AWS has to do this. And in fact, one could argue – as Google has in the past with us for all manner of compute – that it should not be true if the semiconductor industry is working properly. But companies in the chip racket have experienced an unusually high profit margin in the past decade or so – and Intel has benefited most from this as workloads moved off other architectures to its Xeons or started there from scratch, and Nvidia has benefitted from GPU compute in much the same way. But those profits – mark our words – on a per unit basis are going away, and if chip makers can’t make it up in volume, they are going to go away in an absolute sense year-on-year, too.

And AWS, as one of the largest consumers of compute in the world, is going to deal with its risk by creating CPUs and accelerators, and maybe even GPUs at some point of it comes to that, and it will buy up parts by the palettes from Intel, AMD, and Nvidia to give cloud customers those choices, too. Companies that want AMD CPUs and GPUs, or Intel CPUs and GPUs, or even Nvidia CPUs and GPUs, will be able to start with them in their own datacenters and then move workloads over to AWS. But then there will be the AWS homegrown alternatives, which will push the price/performance envelope not because AWS is any smarter than Intel, AMD, or Nvidia when it comes to chip design, but because it doesn’t have to command all of those margins that Wall Street expects of them and still does not expect from Amazon, the parent company. AWS will be able to make it up in volume, and also use its leverage as a big chip buyer to get very highly discounted devices from the companies it is competing with. And conversely, the AWS chip teams will be under pressure to deliver something that is always cheaper than what AWS can do with some other company’s device.

The point is, by supporting all of the relatively high volume compute types and brands, in the cloud or on premises, using the same compute abstraction framework and tools, with millions of customers driving requirements, AWS will have a kind of hybrid vigor like the IT world has never seen.

Good luck competing against that, everyone.

Great article!

I think you meant “kind” here:

“… mirrors the kid of innovation…”

Yes, thank you. Sometimes the dyslexia is running high.

Even better: “buy up parts by the palettes”

From hitchhiker’s guide – “so, if people want fire that can be fitted nasally, what color should it be, smarty pants?”.

Humor is always good.