The cloud and the related edge already are rapidly influencing almost every aspect of IT, from the technology that is being adapted and created to how that technology is being consumed, as illustrated by the growing numbers of established hardware vendors – including Hewlett Packard Enterprise, Dell, and Cisco Systems – that now are offering more of their portfolio as services. Software companies like VMware that had become major players over the years for what they could do in the datacenter are now remaking themselves for the cloud. And cloud service providers like Amazon Web Services, Microsoft Azure and Google Cloud are becoming the largest and, thus, most influential consumers of datacenter hardware.

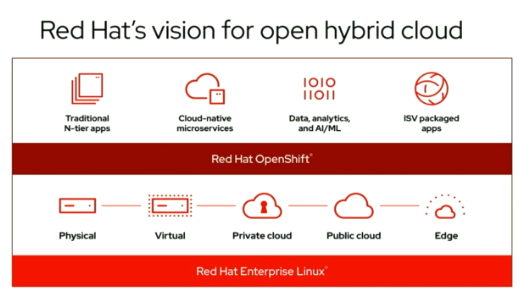

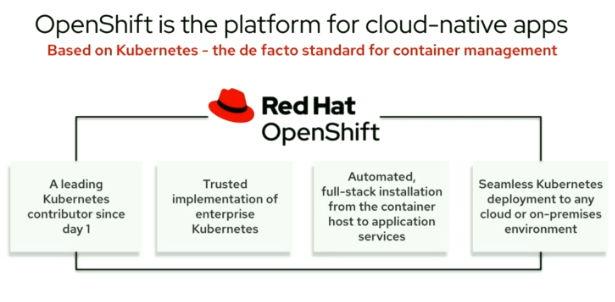

This push to the rapidly expanding hybrid cloud and now the edge has not escaped Red Hat or its owner, IBM. IBM spent $34 billion two years ago to buy Red Hat and make it and its technologies – particularly the OpenShift container and Kubernetes platform – the central piece of its strategy to make cloud and artificial intelligence (AI) the cornerstones of its future.

And Red Hat, which over the past two-plus decades had become the preeminent open source technology provider in the datacenter, is now sharply focused on hybrid cloud and the edge. The news coming out of this week’s virtual Red Hat Summit played to that evolution. The company unveiled OpenShift Platform Plus, which wraps together the features developed for the three editions of OpenShift with greater security (Advanced Cluster Security for Kubernetes, with technology inherited from its acquisition of StackRox in February), management (Cluster Management for Kubernetes) and container registry (Red Hat Quay).

The company unveiled the expansion of Red Hat Insights, its predictive analytics tool, across hybrid clouds with Insights for OpenShift and Ansible Automation Platform and with greater capabilities for Red Hat Enterprise Linux, all designed to give enterprise more visibility across their multiple clouds. Red Hat also is offering OpenShift API Management, OpenShift Streams for Apache Kafka and OpenShift Data Science as fully managed cloud services and launched RHEL 8.4 as a foundational element to its Red Hat Edge initiative, which is aimed at ensuring the company’s technology can reach into this fast-growing part of the IT space.

Red Hat studies indicate that 72 percent of IT leaders surveyed said open source will help drive the adoption of edge computing – which the company sees as an extension of the hybrid cloud environment – over the next two years and that by 2025, the Internet of Things (IoT) and edge-related devices will generate as much as 90 zettabytes of data. OpenShift already can support three-node clusters for space- or resource-constrained locations and the company is adding more edge-friendly capabilities in RHEL 8.4.

The cloud’s effect on IT will continue well into the future. During a session at Red Hat Summit, Chris Wright, the company’s senior vice president and CTO, said there is an ongoing shift from delivering software as packages and delivering it as services. As a result, Red Hat is embracing the concept of “Operate First,” an extension of the vendor’s “Upstream First” philosophy of doing its work in the open, even with Red Hat products.

“Historically, our model for delivering packaged software has focused on the Upstream First model,” Wright said. “We get our code and features into the upstream open source communities, where they are maintained by those communities – of which we’re apart – and they float down into our products from there. This is very important for sustainability and the quality of those contributions. The process is democratized and benefits from the meritocracy of ideas and code.”

However, packaged software is usually operated by a particular company and that operational expertise doesn’t connect back to the open source communities that helped with the technology’s development. With Operate First, the idea is to put open-source projects to a production cloud to get feedback from the cloud provider’s operators on the operational considerations of the effort, shifting the focus away from software availability to operating services, particularly with software becoming such a large part of every business.

“We’d love to bring the community power that we see in creating the open-source software to the operationalization of that same software and have it all happening within the same open-source project that can include things around automation for how you run the software,” Wright said. “But it could also be architectural. For example, how do you produce software that’s easy to operate? How should we be designing differently for scale, telemetry and visibility? Another dimension is, how do you validate that as you’re making changes in the software, that you’re not introducing complexity for those operating the software? There’s a gap between those with operational experiences and the open-source community. There’s a great opportunity for us to fill that gap with community efforts around operationalizing software projects that we all depend on.”

During the Red Hat Summit, the company announced it was donating $551.9 million in software subscriptions to Boston University to fuel several open source projects that form an open research cloud initiative that includes the Massachusetts Open Cloud (MOC). It also said it will spend another $20 million to continue the research work being done at the Red Hat Collaboratory at BU. The aim of both efforts is to help drive innovation in the hybrid cloud and operations at scale through open research.

Wright pointed to work Red Hat was doing as an example of how this Operate First model works. The company was doing work to drive data processing in OpenShift, an effort that led to the open-source Open Data Hub project. Red Hat needed to decide which projects to pull together to enable data science workflows on OpenShift and how to automate the deployment and management of a service that could be included in the Open Data Hub. The company focused on the use of Kubernetes operators using the operator SDK. Red Hat chose the MOC to host a publicly available deployment of the service.

“Bringing together the teams that were responsible for running the services, the projects themselves, the automation around running the services and the infrastructure to support those services in the MOC is an example of bringing all the key ingredients together to create an open and Operate First experience for us,” he said. “It’s been quite informative and it’s helped us improve the software that we’re delivering in Open Data Hub.”

As the cloud evolves, the edge will take an on an increasingly larger role. As more compute, storage and software capabilities are pushed closer to where users of devices and applications are and data is being generated, there will be more “laws-of-physics challenges,” Wright said. Those include everything from latency to the amount and types of data being produced. The edge is an evolution of the cloud, just in a more distributed form of compute.

“The cloud itself has changed our expectations around how we consume applications,” Wright said. “We now have this expectation that we consume applications as services, and that doesn’t just disappear because the application happens to run at the edge. It’s a matter of being able to deliver on that expectation by extending our existing capabilities out to edge deployments. Edge is going to add complexity, whether it’s due to running applications on small footprints or trying to manage many, many smaller deployments and the best way to manage complexity is do automation, software and tooling. Humans don’t scale as well as computers scale, so Kubernetes and services built using Kubernetes operators are more relevant now than ever.”

That’s going to force changes in the technology being used. For high-end telco 5G, manufacture of machine learning use cases, enterprises will need systems with Xeon-class CPUs with 24 or more cores, 128GB of RAM, 40 Gigabit Ethernet. However, low-end uses cases like IoT gateways, smart displays or robotics will use Intel Atom- or Arm Cortex-class CPUs with one to four cores, one to 16GB of RAM and a 1GbE connection. “That’s quite a diversity of hardware capabilities and processor architectures that you need to support to cover all these use cases,” Wright said.

Red Hat is working to ensure that products like RHEL can address these varied hardware environments, he said. The company has an emerging technology project called MicroShift that is looking at what OpenShift is built on RHEL to be used with deployments optimized for small footprints that have limited resources and the capabilities that will be needed. Red Hat is experimenting with highly integrated single-board systems like Nvidia’s TX2 and Xilinx’s Zynq UltraScale modules.

The deployment topology in these environments also is different.

“Often you have only one host available to deploy on and that host can have intermittent network connectivity with latencies of 200 to 500 milliseconds and bandwidth of maybe 200 to 400 megabits per user,” the CTO said. “Not only that, but the bandwidth is expensive because it’s coming from a satellite. This really causes you to think hard about what capabilities you need from your operating system to support deployment and updates to both the OS and the application in those kinds of environments. Once you figure that out, you have lots and lots of these deployments and you need to figure out how you’re going to consistently manage all those. Physical access security is also a concern. A lot of devices are in places where they can be more easily exploited than servers in a traditional datacenter.”

Tech vendors are under pressure to make the right decisions to the emerging challenges presented by the cloud and the edge. Both will play a central role in IT in the future and a wrong move can put cause a company to lose ground in what promises to be a highly competitive area.

Be the first to comment