No matter what kind of traditional HPC simulation and modeling system you have, no matter what kind of fancy new machine learning AI system you have, IBM has an appliance that it wants to sell you to help make these systems work better – and work better together if you are mixing HPC and AI.

It is called the Bayesian Optimization Accelerator, and it is a homegrown statistical analytics stack that runs on one or more of Big Blue’s “Witherspoon” Power AC922 hybrid CPU-GPU supercomputer nodes – the ones that are used in the “Summit” supercomputer at Oak Ridge National Laboratories and the “Sierra” supercomputer used at Lawrence Livermore National Laboratory.

IBM has been touting the ideas behind the BOA system for more than two years now, and it is finally being commercialized after some initial testing in specific domains that illustrate the principles that can be modified and applied to all kinds of simulation and modeling workloads. Dave Turek, now retired from IBM but the longtime executive steering the company’s HPC efforts, walked us through the theory behind the BOA software stack, which presumably came out of IBM Research, way back at SC18 two years ago. As far as we can tell, this is still the best English language description of what BOA does and how it does it. Turek gave us an update on BOA at our HPC Day event ahead of SC19 last year, focusing specifically on how Bayesian statistical principles can be applied to ensembles of simulations in classical HPC applications to do better work and get to results faster.

In the HPC world, we tend to try to throw more hardware at the problem and then figure out how to scale up frameworks to share memory and scale out applications across the more capacious hardware, but this is different. With BOA, the ideas can be applied to any HPC system, regardless of vendor or architecture. This is not only transformational for IBM in that it feels more like a service encapsulated in an appliance and will have an annuity-like revenue stream across many thousands of potential HPC installations. It is also important for IBM in that the next generation exascale machines in the United States, where IBM won the big deals for Summit and Sierra, are not based on the combination of IBM Power processors, Nvidia GPU accelerators, and Mellanox InfiniBand interconnects. The follow-on “Frontier” and “El Capitan” systems at these labs are rather using AMD CPU and GPU compute engines and a mix of Infinity Fabric for in-node connectivity and Cray Slingshot Ethernet (now part of Hewlett Packard Enterprise) for lashing nodes together. Even these machines might benefit from BOA, which gives Big Blue some play across the HPC spectrum, much as its Spectrum Scale (formerly GPFS) parallel file system is often used in systems where IBM is not the primary contractor. BOA is even more “open” in this sense, although like GPFS, the underlying software stack used in the BOA appliance is not open source anymore than GPFS is. This is very unlikely to change, even with IBM acquiring Red Hat last year and becoming the largest vendor of support contracts for tested and integrated open source software stacks in the world.

So what is this thing that IBM is selling? As the name suggests, it is based on Bayesian optimization, a field of mathematics that was created by Jonas Mockus in the 1970s and that has been applied to all kinds of algorithms – including various kinds of reinforcement learning systems in the artificial intelligence field. But it is important to note that Bayesian optimization does not itself involve machine learning based on neural networks, but what IBM is in fact doing is using Bayesian optimization and machine learning together to drive ensembles of HPC simulations and models. This is the clever bit.

With Bayesian optimization, you know there is a function in the world and it is in a black box (mathematically speaking, not literally). You have a set of inputs and you see how it behaves through its outputs. The optimization part is to build a database of inputs and outputs and to statistically infer something about what is going on between the two, and then create a mathematical guess about what a better set of inputs might be to get a desired output. The trick is to use machine learning training to watch what a database of inputs yields for outputs, and you use the results of that to infer what the next set of inputs should be. In the case of HPC simulations, this means you can figure out what should be simulated instead of trying to simulate all possible scenarios or at least a very large number of them. BOA doesn’t change the simulation code one bit – and that is important. It just is given a sense of the desired goal of the simulation – that’s the tricky part that requires the domain expertise that IBM Research can supply – and watches the inputs and outputs of simulations and offers suggested inputs.

The net effect of BOA is that, over time, you need less computing to run an HPC ensemble, and you also can converge to the answer is less time as well. Or, more of that computing can be dedicated to driving larger or more fine-grained simulations because the number of runs in an ensemble is a lot lower. We all know that time is fluid money and that hardware is also frozen money depreciated one little trickle at a time through use, and add them together and there is a lot of money that can potentially be saved.

Matt Drahzal, offering manager for HPC cloud for Power Systems at IBM, walked us through how BOA is being commercialized and some of the data from the early use cases where BOA was deployed.

One of the early use cases was at the Texas Advanced Computing Center at the University of Texas at Austin, where Mary Wheeler, a world-renowned expert in numerical methods for partial differential equations as they apply to oil and gas reservoir models, used the BOA appliance in some simulations. To be specific, Wheeler’s reservoir model is called the Integrated Parallel Accurate Reservoir Simulator, or IPARS, and it has gradient descent/ascent model built within it. Using their standard technique for maximizing the oil extraction from a reservoir with the model, it would take on the order of 200 evaluations of the model to get what Drahzal characterized as a good result. But by injecting BOA into the flow of simulations, they could get the same result with only 73 evaluations. That is a 63.5 percent reduction in the number of evaluations performed.

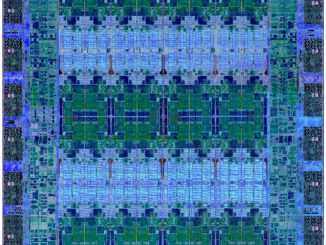

IBM’s own Power10 design team also used BOA in its electronic design automation (EDA) workflow, specifically to check the signal integrity of the design. To do so using the raw EDA software took over 5,600 simulations, and IBM did all of that work as it normally would do. But then IBM added BOA to the stack and redid all of the work, and go to the same level of accuracy in analyzing the signal integrity of the Power10 chip’s traces with only 140 simulations. That is a 97.5 percent reduction in computing needed – or a factor of 40X speedup if you want to look at it that way. (Drahzal warns that not all simulations will see this kind of huge bump.)

In a third use case, a petroleum company that creates industrial lubricants, whom Drahzal could not name, was creating a lubricant that had three components. There are myriad different proportions to mix them in to get a desired viscosity and slipperiness, and the important factor is that one of these components was very expensive and the other two were not. Maximizing the performance of the lubricant while minimizing the amount of the expensive item was the task in this case, and this company ran the simulation without and then with the BOA appliance plugged in. Here’s the fun bit: BOA found a totally unusual configuration that this company’s scientists would have never thought of – and was able to find the right mix with four orders of magnitude more certainty than prior ensemble simulations and did one-third as many simulations to get to the result.

These are dramatic speedups, and demonstrate the principle that changing algorithms and methods is as important as changing hardware to run older algorithms and methods.

IBM is being a bit secretive about what is in the BOA software stack, but it is using PyTorch and TensorFlow for machine learning frameworks in different stages and GP Pro for sparse Gaussian process analysis, all of which have been tuned to run across the IBM Power9 and Nvidia V100 GPU accelerators in a hybrid (and memory coherent) fashion. The BOA stack could, in theory, run on any system with any CPU and any GPU, but it really is tuned up for the Power AC922 hardware.

At the moment, IBM is selling two different configurations of the BOA appliance. One has two V100 GPU accelerators, each with 16 GB of HBM2 memory, and two Power9 processors with a total of 40 cores running at a base 2 GHz and a turbo boost 2.87 GHz and 256 GB of their own DDR4 memory. The second BOA hardware configuration has a pair of Power9 chips with a total of 44 cores running at a base 1.9 GHz and a turbo boost to 3.1 GHz with its own 1 TB of memory, plus four of the V100 GPU accelerators with 16 GB of HBM2 memory each.

IBM is not providing pricing for these two machines, or the BOA stack on top of it, but Drahzal says that it is sold under an annual subscription that runs to “hundreds of thousands of dollars per server per year.” That may sound like a lot, but considering the cost of an HPC cluster, which runs from millions of dollars to hundreds of millions of dollars, this is a small percentage of the overall cost – and can help boost the effective performance of the machine by an order of magnitude or more.

The BOA appliance became available on November 27. Initial target customers are in molecular modeling, aerospace and auto manufacturing, drug discovery, and oil and gas reservoir modeling and a bit of seismic processing, too.

Interesting article, but can’t find any information about “GP Pro” – is it something as simple as wrapping GPy in a commercial package I wounder.

Me neither. Clarifying with IBM.

There is a new paper from IBM and Carnegie-Mellon on arxiv, 2011.08432 which might explain some of the Gaussian Process work being done.