Over the next year, we should get a good sense of how the Cerebras CS-1 system performs for dual HPC and AI workloads between installations at Argonne and Lawrence Livermore labs, EPCC, and the Pittsburgh Supercomputing Center (PSC).

While HPC/AI blended workloads are at the top of R&D priorities at several supercomputing sites and national labs, it is the PSC installation that we are most keen to watch. This is because the Neocortex system the center was purpose-built to explore the convergence of traditional modeling and simulation and AI/ML with the Cerebras architecture as the foundation of the HPE-build system.

This week, PSC announced that the Neocortex supercomputer is open for wider end user applications, which means we can expect to see a fresh wave of published results in areas ranging from drug discovery, molecular dynamics, CFD, signal processing, image analysis for climate and medical applications, in addition to other projects. While there are no other accelerators on board the Neocortex system for direct benchmarking, work on the PSC system will yield insight into the competitive advantages of a wafer-scale systems approach.

All of this comes at a time when it is unclear which non-GPU accelerator for AI/ML applications on HPC systems will start grabbing share. At the moment, there is a relatively even distribution of AI chip startups at several labs in the U.S. in particular with Cerebras and SambaNova sharing the highest count (of public systems) and Graphcore gaining traction with recent wins in Europe in particular, including systems at the University of Bristol for shared work with CERN.

Despite progress getting novel accelerators into the hands of research centers, it is a long road ahead to challenge GPUs for supercomputing acceleration. Nvidia has spent well over a decade porting and supporting HPC applications and has plenty of hooks for AI/ML. In short, it is going to take massive power and performance gains to get some centers to buy into novel architectures for AI acceleration anytime soon after years of GPU investments. Nonetheless, what we are watching is what architecture plays the HPC/AI convergence game best. And PSC seems to think it has picked a winner in Cerebras via the (relatively small, $5 million) Neocortex machine.

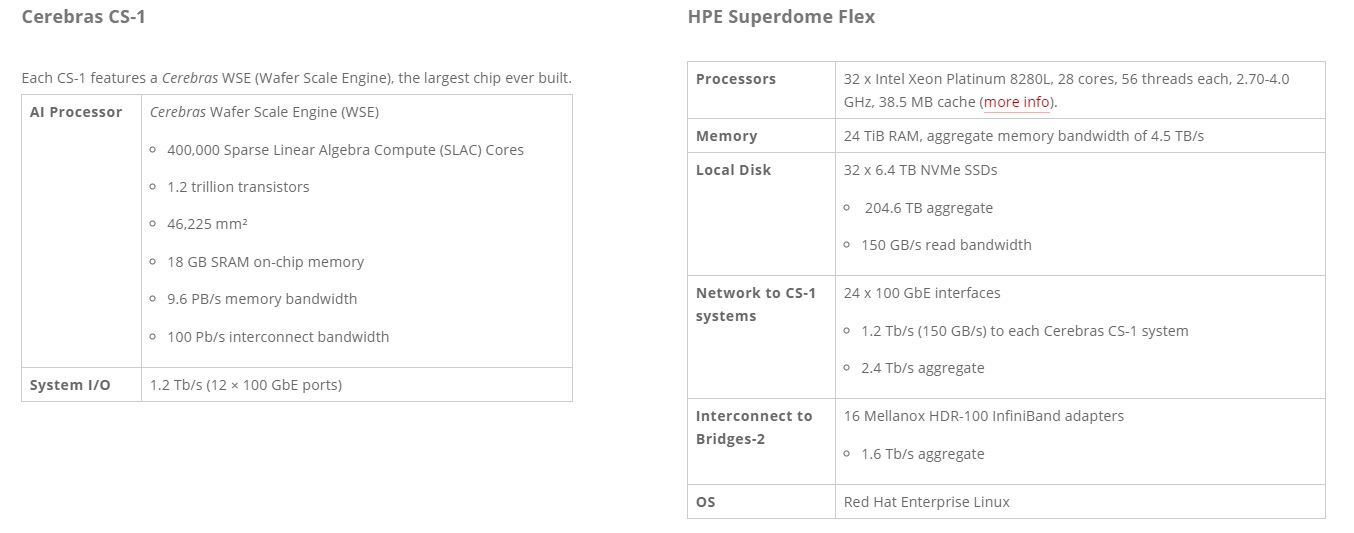

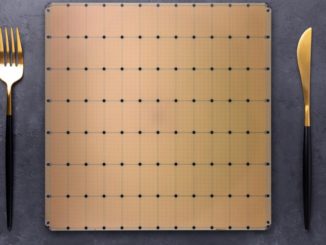

Each Cerebras CS-1 is powered by one Cerebras Wafer Scale Engine (WSE) processor, designed to accelerate training and inference with results emerging on real-world HPC applications (albeit those translated to TensorFlow). The Cerebras WSE is the largest computer chip ever built, containing 400,000 AI-optimized cores implemented on a 46,225 square millimeter wafer with 1.2 trillion transistors (versus billions of transistors in high-end CPUs and GPUs).

Neocortex will use the HPE Superdome Flex as the front-end for the Cerebras CS-1 servers. HPE’s line on this is that specially designed system will enable flexible pre- and post-processing of data flowing in and out of the attached WSEs, preventing bottlenecks and taking full advantage of the WSE capability. The HPE Superdome Flex itself will have 24 terabytes of memory, 205 terabytes of flash storage, 32 Intel Xeon CPUs, and 24 network interface cards for 1.2 terabits per second of data bandwidth to each Cerebras CS-1.

“The Neocortex program introduces a spectacular cutting-edge supercomputer resource to accelerate our research to combat breast cancer,” said Dr. Shandong Wu, Associate Professor of Radiology and Director of the Intelligent Computing for Clinical Imaging (ICCI) Lab at the University of Pittsburgh, who leads a team employing deep learning on Neocortex for high-resolution medical imaging analysis for breast cancer risk prediction. “The program also has a very responsive support team to help us resolve issues and has enabled great progress of our work.”

With Neocortex, users will be able to apply more accurate models and larger training data, scale model parallelism to unprecedented levels and, according to PSC, avoid the need for expensive and time-consuming hyperparameter optimization. A second initiative will focus on development of new algorithms in machine learning and graph analytics.

Dr. John Wohlbier of the Emerging Technology Center at the CMU Software Engineering Institute, another early user, says “As early users, we are working to use Neocortex to train novel graph neural algorithms for very large graphs, such as those found in social networks. We are excited to explore to what extent will AI-specific ASICs, such as the one in Neocortex, enable model training and parameter studies on a much faster timescale than resources found in a typical datacenter or the cloud.”

Over the course of 2021 we’ll be tracking published results for comparison between Cerebras CS-1, SambaNova’s DataScale systems, and Graphcore’s MK2 and its various installations. The opening of Neocortex is the first step in having another productive point of comparison.

Be the first to comment