Big Blue shelled out an incredible $34 billion to buy open source infrastructure software juggernaut Red Hat, and it is determined not to just tend and grow that business, which brought in around $3.85 billion in sales in 2019 as the deal closed and probably somewhere around $4.6 billion in 2020. That’s not enough. IBM wants to expand the use of Red Hat Enterprise Linux and the OpenShift implementation of the Kubernetes container orchestrator created by Google on its Power Systems and System z machinery and to convince customers who might otherwise just buy servers based on the Intel Xeon SP or AMD Epyc processors to adopt IBM’s platforms.

This is a tall order, and particularly so since IBM cannot in any way deprecate that Linux stack running on X86 or more aggressively tune up that stack running on Power or z processors. The IBM hardware has to live by its own merits and through its own total cost of ownership calculations. And, believe it or not, some very credible arguments can be made for using Power or z machinery to support modern, containerized and possibly virtualized workloads atop IBM’s indigenous systems.

It may not be the popular choice out there in the datacenters of the world, but with somewhere between 175,000 and 200,000 Power Systems customers – even IBM is not sure of the number because so much of its iron goes through the reseller channel and so much is vintage iron that is just humming along after being installed a long time ago – and around 5,000 of the largest companies in the world in all of the key industries still using mainframes for back-end systems of record as well as analytics of various kinds. These companies have lots of X86 iron running Linux and Windows Server applications, and if IBM can convince even a small part of them to convert Windows to Linux and then pull Linux onto its centralized systems – which can be distributed computing clusters with two sockets per node, not just big, fat NUMA machines with from four to sixteen sockets in the case of Power iron – then it can have a tidy systems business that might start growing again. We analyzed this possibility from a financial perspective back in January when going over IBM’s financial results for 2020 and forecasting trends out a decade. It is not out of the realm of possibility.

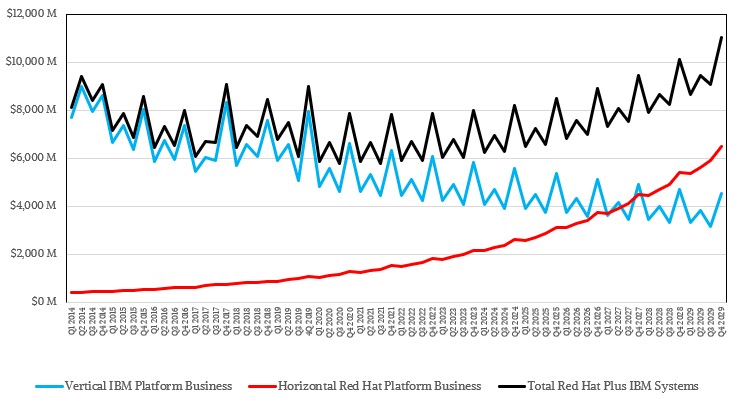

This chart above summed that up, and read the detailed coverage for how we made that chart. And once again, this is not what we definitely think is going to happen, but rather what it might look like just if present conditions in that underlying IBM systems business (Power and z together, including hardware, software, and services) and the overlay of the Red Hat infrastructure software business (RHEL, the RHEV implementation of the KVM hypervisor, the OpenShift implementation of Kubernetes, the Ansible system administration and management tools that compete with Puppet, Chef, and homegrown scripts, the JBoss application server, and possible the OpenStack cloud controller where virtualization control is needed) are added up.

IBM clearly wants to stop the decline in its Power Systems business and shore up its System z business, and that is going to take some technical feats as well as the addition of Red Hat to the mix. Red Hat is definitely the egg here that IBM wants to hatch to make hybrid Red Hat-Power and Red Hat-z chickens.

Recently, IBM has done a bunch of things that will help the company make the case for running the Red Hat stack on Power, and the company is also touting the much better TCO Power Systems offer running OpenShift. We expect IBM to make a lot of noise later this year and early next year when Power10 systems start rolling out, too. (More on that in a moment.)

Two years ago, IBM finally – after much poking and prodding – launched Power-based instances on its IBM Cloud, which it calls Power Virtual Server, and the capacity of that Power cloud has been growing as well as expanding around the globe. IBM has Power Virtual Server instances based on two-socket Power S922 servers and sixteen-socket Power E980 servers in its datacenters in Dallas, Texas and Washington DC in the United States, in Montreal in Canada, in San Paolo, Brazil, in two separate datacenters in Sydney, Australia, in Osaka and Tokyo in Japan, in Frankfurt, Germany, and in two datacenters in London, England. IBM has been banging the drum about hybrid computing, and it wants to be the platform for both sides of that hybrid for its IBM i, AIX, and Linux customers on Power.

Being hybrid needs a bunch of things, and it is not just a matter of having PowerVM server virtualization hypervisors and OpenShift container orchestration on both on-premises and cloud gear. One of the things that IBM is working with the independent software vendor community to deliver is a virtual serial number, which will allow a virtual machine to be configured and isolated and treated like a distinct piece of hardware for the purposes of application and systems software licensing. Only by having this can that portion of IBM’s Power Systems base that does not code its own applications and databases – and that is a big portion of the base – actually employ hybrid cloud.

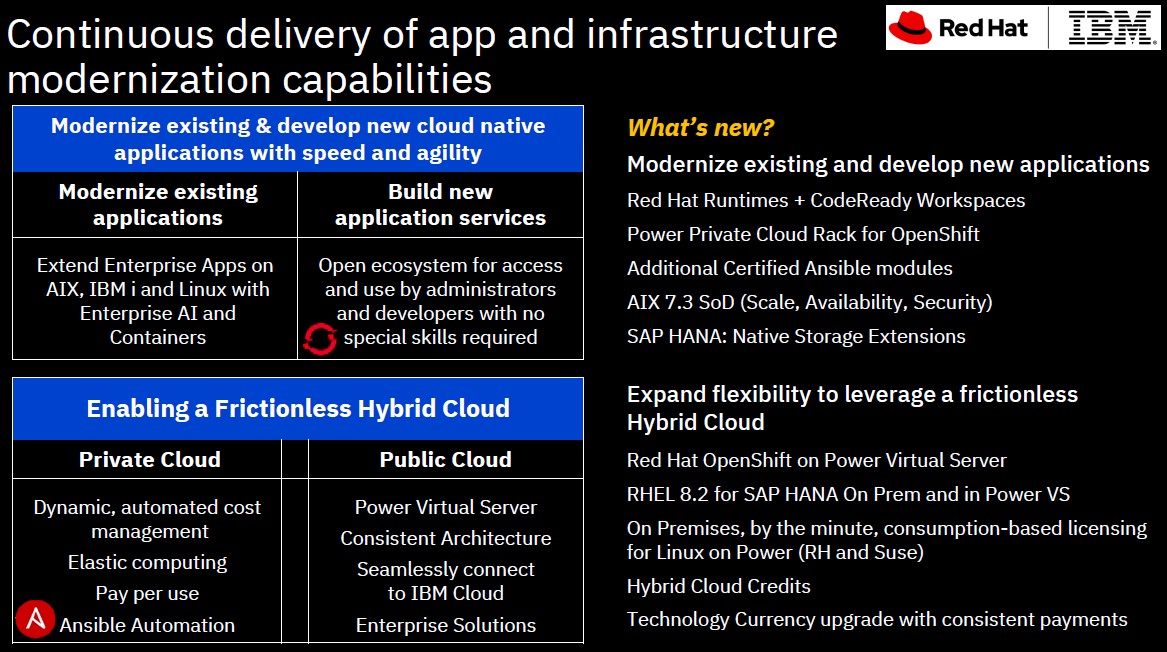

The important new thing above is that the Red Hat Runtimes, a kind of superset of JBoss with cloud native runtimes built in, and CodeReady Workspaces, a containerized implementation of the Eclipse Che integrated development environment aimed at OpenShift, are now running natively on Power platforms with RHEL. There are also, says Sibley, 102 Ansible modules that are available for Power Systems platforms (about 40 percent of them are for Big Blue’s proprietary IBM i operating system, interestingly) and out there on GitHub, they have been downloaded 13,000 times.

Another thing that IBM has been working on is cloudy metered pricing that is available both with Power Virtual Server cloud instances and with on-premises gear. This can’t be perfectly aligned, but IBM is trying to get closer than you can get by leasing iron on site and paying by the month and buying it on the cloud by the hour. The way the onsite cloudy pricing for Power Systems works is that IBM charges a base amount for the hardware, and then processing cores, memory capacity, and storage capacity latent in the machine can be turned on and off on an hourly basis at the same costs as that capacity on the IBM Cloud.

“It is a pretty interesting dynamic to try to come up with that,” Steve Sibley, vice president and global offering management for Cognitive Systems at IBM, tells The Next Platform. “We think we have pretty good and aligned pricing, although we have not rolled out the specifics quite yet. If anything, we are going to price it to encourage a little bit of usage on the cloud just to encourage companies to get started.”

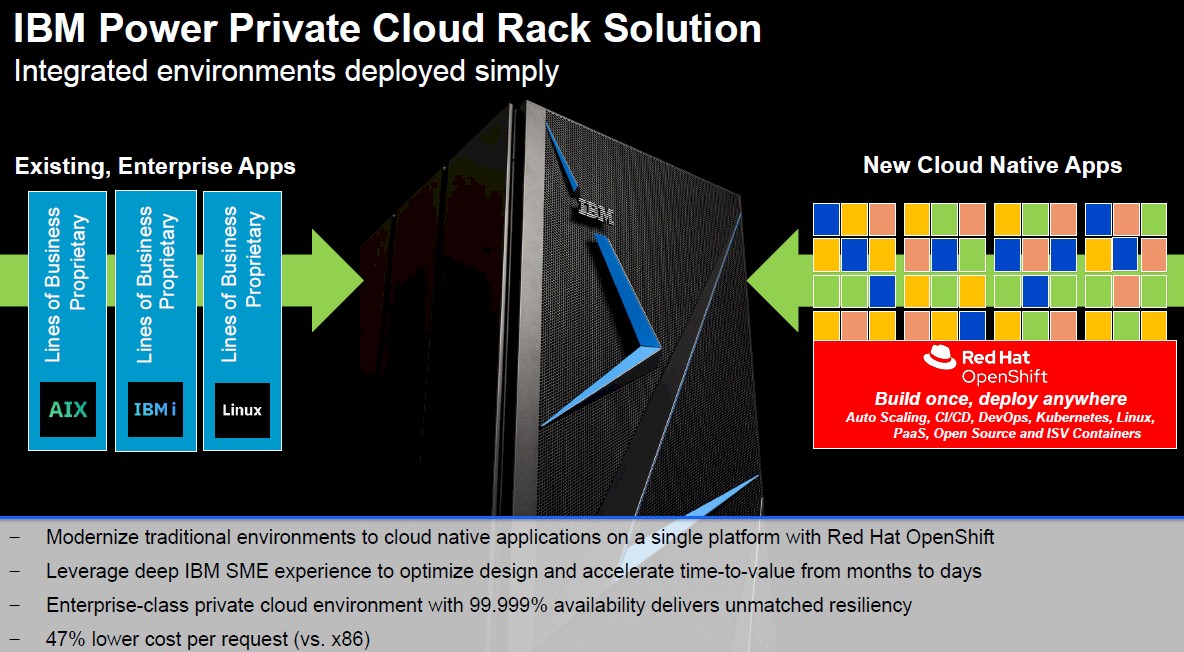

To make life a bit easier for those AIX and IBM i customers contemplating the move to microservices and containers for greenfield applications – and let us assure you that they are all looking at this because of their aging programmer and system administrator populations on those legacy AIX and IBM i platforms –Big Blue has recently fired up something called the IBM Power Private Cloud Rack Solution, which you can think of as a preconfigured OpenShift setup akin to what Google is doing with its Anthos stack. (We would have called it the Red Hat Power Stack, but IBM’s marketing people would never do that.)

The big item that will catch the eye of Big Blue’s AIX and IBM i customers is that it says that this stack, including a consulting engagement from its Lab Services division of its Global Services behemoth, can take an eight-week deployment of Power Systems clusters and the Red Hat OpenShift stack and condense it down into eight hours. The minimum configuration of this preconfigured cluster is three Power S922 servers, each with a pair of ten-core Power9 processors, 256 GB of memory, and 3.2 TB of NVM-Express flash. These machines are linked with Fibre Channel switches to a shared FlashSystem 5600 all-flash array with 9.6 TB of capacity. The nodes have RHEL 8 atop IBM’s PowerVM hypervisor; RHEL CoreOS is used on the virtual machines supporting OpenShift. This will be available on March 12.

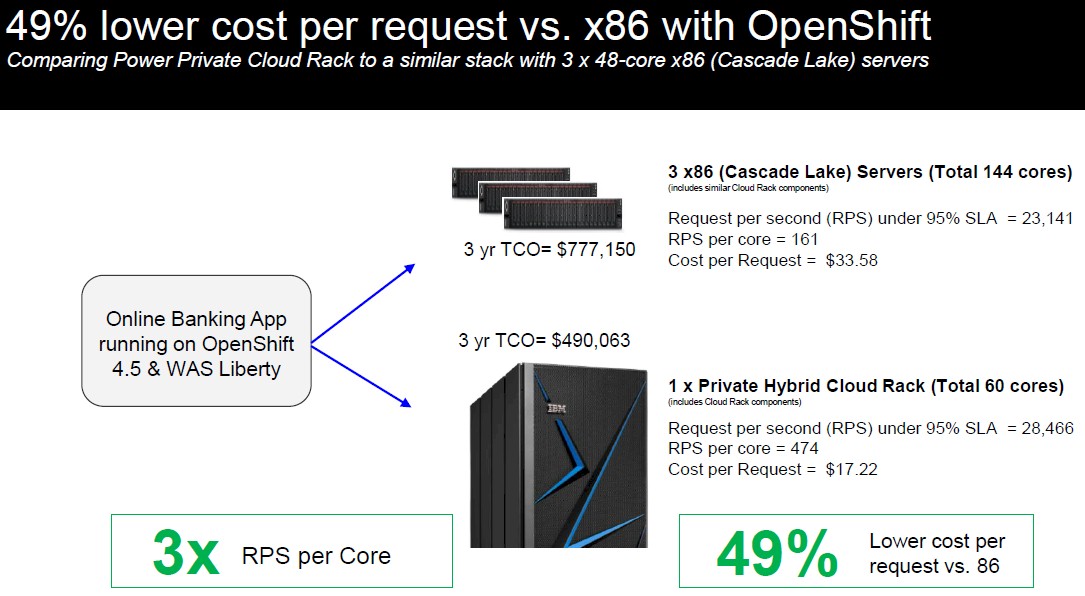

What existing AIX and IBM i customers and potential greenfield Red Hat on Power customers will be looking at, ultimately, is the TCO of Power clusters versus X86 clusters for running OpenShift. To give a sense of this, IBM took an actual set of online banking applications from one of its customers, which was running atop the Liberty freebie edition of IBM’s WebSphere Application Server (akin to Red Hat’s JBoss), and measured the responses per second of the online app running on a set of Power machinery and on a set of servers using the “Cascade Lake” Xeon SP processors from Intel. The IBM Power9 chips have eight threads per core across 60 cores for a total of 480 threads, which the Cascade Lake machines had a total of 144 cores and 288 threads. (To be precise, the IBM machines used Power9 chips with ten cores each and the Intel machines used Xeon SP chips with 24 cores each, and there were three machines each.)

Here is the fine print that was at the bottom of this chart, which you would never be able to read if we didn’t extract it:

“This is an IBM internal study designed to replicate multitier banking OLTP workload usage in the marketplace of an IBM E950 (40 core Model 9040 MR9) with a total of 1 TB memory extrapolated (based on IDC QPI performance metric) to 60 cores running on 3 nodes of IBM S922 (20 core Model 9009 22G) with a total of 768 GB memory. The OpenShift cluster consisted of three master nodes and two worker nodes using OpenShift version 4.5.5 and Red Hat Enterprise Linux CoreOS (RHCOS) for IBM Power across five PowerVM LPARs. A sixth PowerVM LPAR on the system ran the OpenShift load balancer. SMT8 mode was enabled across all Power LPARs. Results are based on an extrapolation to 3 servers from an X86 cluster configuration comprised of two servers running VMware ESXi 6.7 with eight VM guests (three masters, four workers, and one load balancer) using OpenShift version 4.5.6. Each worker node guest had access to all vCPUs on the physical server on which it was running. Compared X86 models for the cluster were 2 socket Cascade Lake servers containing 48 cores and 512 GB each for a total of 96 cores and 1 TB of memory. Both environments used JMeter to drive maximum throughput against four OLTP workload instances using a total of 500 JMeter threads. The results were obtained under laboratory conditions, not in an actual customer environment. IBM’s internal workload studies are not bench mark applications. Prices, where applicable, are based on US prices as of 02/13/2021 and X86 hardware pricing is based on IBM analysis of US prices as of 09/ 20/2020 from IDC. Price comparison is based on a 3 year total cost of ownership including HW, SW, networking, floor space, people, energy/cooling costs and three years of service & support for production and non production (dev, test and high availability) environments.”

As you can see, the IBM cluster processed 28,466 requests per second, compared to 23,141 requests per second for the Intel cluster, which gave IBM a 23 percent performance advantage. (Obviously, this banking application loved threads and memory bandwidth.) With the Intel cluster costing 58.5 percent more over three years ($777,150 for the X86 cluster versus $490,063 for the IBM cluster), that works out to a 48.7 percent lower cost per request. Moving to Linux is going to happen either way, and a 20 percent bang for the buck advantage should be enough to raise eyebrows and get out more than a few checkbooks. This is 2.5X that level more of an advantage.

It would be interesting to see how this banking app does on impending “Ice Lake” Xeon SP clusters, or impending AMD “Milan” Epyc 7003 clusters. Those gaps will close, and then IBM will late this year deliver its own Power10 big NUMA machines with four or more sockets and will deliver entry machines with one or two sockets based on Power10 early next year. And as we have pointed out, the Power10 chips and their memory area network present some very interesting possibilities, architecturally speaking, that neither Intel nor AMD can match. OpenShift will be able to do things on this Power10 iron that Xeon SPs and Epycs just cannot do – and that will be because of hardware advancements that IBM has made and therefore Big Blue will not be able to be accused of preferential treatment for its Power Systems. They will have earned that preferential treatment by virtue of engineering and nothing else.

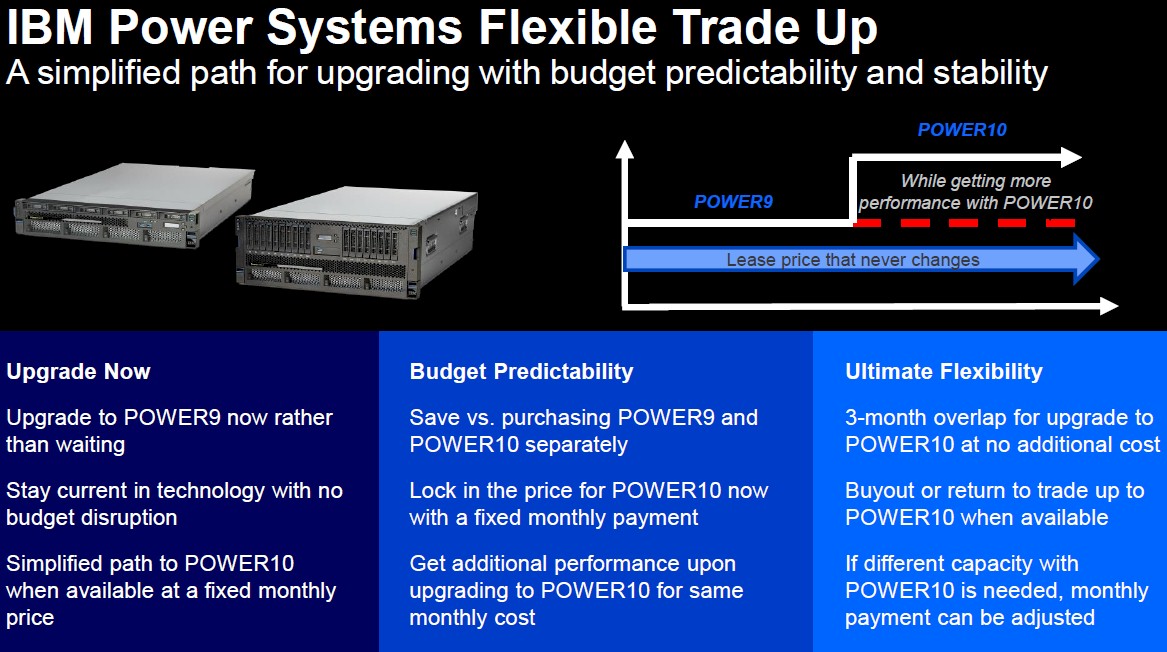

For those customers who want to buy now and can’t wait for Power10 to launch, IBM has one final deal sweetener, called the Flexible Trade Up:

Under this deal, Sibley explains, IBM will give customers a five-year or six-year lease on the machinery if they buy now. This is particularly important to customers looking to build clusters with fairly modest one-socket or two-socket machines. Halfway in the middle of the lease, IBM will give customers a 30 percent to 40 percent performance bump, the amount varying depending on the initial configuration and where the Power10 chips end up in terms of performance per thread and per core, and the lease payments stay the same. If customers decide that they don’t need the extra performance in the middle of the lease, then they can pay out the lease, own the machinery, and drop their payments by that 30 percent to 40 percent.

“With the Intel cluster costing 58.5 percent more over three years ($77,150 for the X86 cluster versus $490,063 for the IBM cluster), that works out to a 48.7 percent lower cost per request. ”

You may want to look for a dropped digit in the calculation, there’s an order of magnitude discrepancy within even the reported numbers quoted above.

The chart is correct. I dropped a 7 in $777,150. All fixed.