When it comes to data-intensive supercomputing, few centers have the challenges Pawsey Supercomputing Centre faces. While the center handles its fair share of scientific workloads for Australian research, the radio astronomy user base, which is about a quarter of its compute cycles and nearly all of its archival storage, is what really drives Pawsey to be at the leading edge of data management and I/O.

Most of the center’s researchers run single core jobs with four-node jobs being the “sweet spot” in terms of usage, according to David Schibeci, head of supercomputing and technical manager for the newly announced HPE-built all-AMD (CPU, GPU) supercomputer. The exception to all rules at Pawsey are the data processing requirements from the various radio astronomy sites, which do a large amount of processing on Pawsey’s existing systems with the new machine expected to continue that trend.

The other “sweet spot” Pawsey found is in its I/O architecture, which balances interconnect, NVMe, and smart orchestration. While the requirements driven by radio astronomy in particular might not match the more general purpose compute needed elsewhere, what they decided to do I/O-wise on their latest Cray-HPE machine is noteworthy in that it takes advantage of some new system and software features in HPC.

“Because of radio astronomy, memory bandwidth and I/O are important; data is a more important part of the workflow than compute.” He points to the Cray-HPE “Shasta” based system’s Slingshot interconnect as central to the machine’s value. With this being Ethernet based, they can plug it into the rest of the center’s network to provide access to long term storage (which includes two massive SpectraLogic tape libraries) or their custom-built cloud network. The goal is to provide a more seamless way for those researchers to move data to different parts of the system without major overhead.

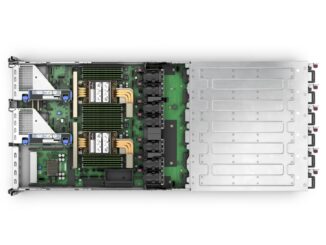

Pawsey skipped the HPC-specific storage vendors, including those that are specializing in NVMeOF approaches entirely and went with HPE for the entire environment, which includes something a bit different: 400 TB of what Schibeci refers to “rPool” storage, which will allow the center to have a collection of NVMe servers to provide file systems on demand. They were compelled in this direction because the HPE servers they will deploy don’t have local storage, which wouldn’t suit the wider base of researchers who want fast, temporary storage they don’t have to share. Further, this NVMe pool approach lets Pawsey have a middle ground of sorts, being able to provide a local-like file system on the fabric that’s dynamic and can be carved up into temporary bits then released back into the larger pool.

Overall, the storage environment goes well beyond the pools. “We’ll have just above a petabyte of flash and about 8.8 petabytes of spinning disk. Those two tiers for research means they get access to fast NVMe based flash, then can seamlessly tier to spinning disk, so we get the best balance between capacity and speed. This is why HPE was more interesting than the other providers,” Schibeci explains.

“We could have provided users with the option to have an all-flash file system, which meant you got a very fast but very small file system. That doesn’t provide enough capacity for researchers and so for HPC to provide the best of both worlds we now get fast flash storage but capacity from the hard drive to keep within budget.”

Another challenge Pawsey has with balancing traditional HPC and radio astronomy (with some extra footwork for other big users of their systems in bioinformatics and medical applications) is dealing with mixed workloads. Historically, this isn’t something Lustre, their file system of choice, has been good at. “Most of our scratch storage is Lustre, we’ve been using it for 11 years but the big difference with this new system is the mix of flash and local disk. Lustre has improved in this regard over the last couple years, especially with metadata performance and overall I/O. Having the rPool as another option adds another tool to the box, as do containers.” He says they’ve been using the HPC-native Singularity container approach for almost a year with particular success in the resource-intensive radio astronomy codes. “In a lot of ways there are software solutions to hardware problems,” Schibeci adds.

Compute-wise, radio astronomy is about 25% of compute resources at Pawsey but 85% of their storage capacity. They have two large SpectraLogic tape libraries almost entirely dedicated to it. “That’s always been the issue with radio astronomy, it’s a data-driven problem. That’s one of the reasons why going to something like Slingshot on the Cray-HPE system makes sense, we can move data in and out of the system easier.

Schibeci adds that one of the goals longer term is to start to look at object storage more seriously to keep important data at the ready. All things are possible with a single fabric, which was something they didn’t have in previous Cray machines. Before there was a high-speed internal fabric and a separate storage fabric. Another “pie in the sky” goal is to be able to seamlessly jump between cloud and supercomputing resources, something they’ve been working toward with containers and is now a new capability in the HPE-Cray Shasta management system where it’s possible to orchestrate containers across a system. This also adds the ability to have more complicated workflows without users being heavily aware of the complexity on the infrastructure side.

Much of their orchestration, aside from HPE-Cray native tools is around NextFlow, which has caught on at several major supercomputing centers. Schibeci says the radio astronomy and bioinformatics users found this especially useful for complicated workflows. In addition to this, Pawsey is exploring Kubernetes as an option that’s now part of their HPE stack via Kubernetes Pods.

Pawsey has taken quite a leap already by moving to an all-AMD heterogenous system, but like many other HPC sites with demanding workloads, they’re always doing the price/performance dance. Schibeci says that even though the radio astronomy segment of their user base is demanding from a resource allocation standpoint, having an outlier set of workloads and users keeps the center at the forefront of what’s new. “It forces us to keep close to the cutting edge and lifts all the other researchers up at the same time.”

Be the first to comment