If there is one thing that is absolutely immune from inflationary curbs and that is, to a certain degree, also contributing to inflationary pressures in the global economy, it is generative AI. In fact, from the limited data we have about server and storage spending in the world right now, it looks a lot like AI infrastructure spending is propping up the revenue streams for servers and storage while the underlying spending for datacenter gear for other workloads has gotten even weaker than it was at the beginning of the year.

To put it bluntly, the core server and storage markets are in recession as enterprises take a pause and hyperscalers “consume” the infrastructure they have already bought late last year, but spending on expensive AI systems has absolutely exploded.

This is the kind of explosion in “high performance computing” that the traditional HPC simulation and modeling community has always dreamed was possible but which has never quite materialized. Which is sad, we would argue, because there is a fair argument to be made that HPC does more useful stuff in terms of helping people create things or fix things than generative AI does, and there is every expectation that generative AI will eliminate whole classes of work from the global economy “in the fullness of time” as Andy Jassy, Amazon chief executive officer, is fond of saying when he thinks about long term trends.

But, no one wants to talk about that. So we will move on and set the stage for our analysis.

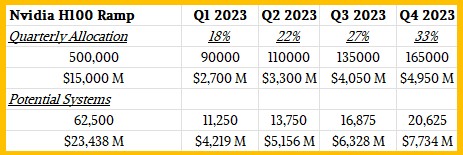

We were taught by our physics professor in college to make a rough estimate our answer before we start to solve the problem, thereby doing error correction as you go along. So let’s start with the rumor going around earlier this summer that Nvidia can only make around 500,000 “Hopper” H100 GPUs in calendar 2023.

If you assume that Nvidia’s partners can sell them at an average of $30,000 a pop, then those H100 sales alone generate $15 billion in revenues all by themselves that contribute to the revenues that companies like IDC count each quarter. If you assume a gradually increasing distribution of those 500,000 H100 GPUs as 2023 progresses – 18 percent in Q1, 22 percent in Q2, 27 percent in Q3, and 33 percent in Q4 seem like a reasonably guess for a ramp – then in Q2 2023 alone, those GPUs drove $3.3 billion in sales. Now, at the systems level, if the average machine has eight of these GPUs and is loaded up similarly to an Nvidia DGX H100 with memory, flash, and network interfaces, then at the system level these GPU-laden systems based on the H100s accounted for around $5.2 billion in sales. Add in other accelerators from Nvidia as well as those from anyone else who is selling a box that can do matrix math at scale, and maybe you have something around $7 billion in revenues just from the AI boom.

Here is our rough estimate table, pick at it as you will:

Before this generative AI boom, the average selling price of a server, according to out historical IDC data, was somewhere around $7,000 – and that was with a pretty hefty machine learning AI cycle happening, some big HPC installations, and a mainframe and Power Systems ramp happening at IBM at the same time in early 2021. (This is the last time IDC put out its quarterly server tracker to the public.)

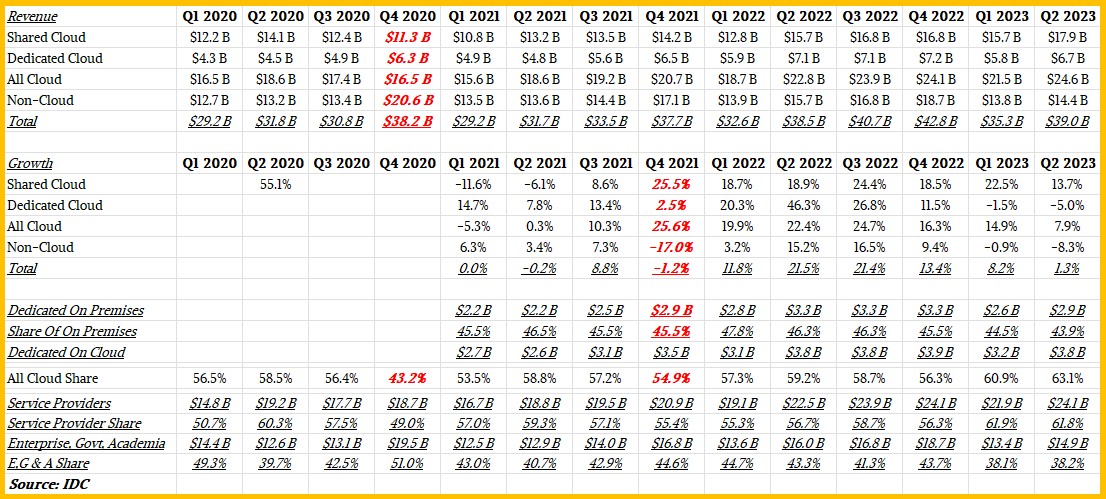

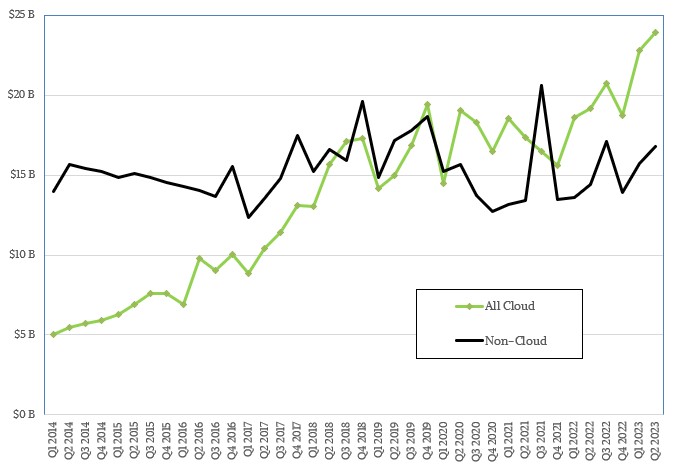

After dicing and slicing the data from IDC for datacenter infrastructure spending in Q2 2023, which was just released, we think a big portion of the $39 billion in spending was driven by AI systems. If it is around $7 billion, as we estimated above, then only $32 billion was for infrastructure to support other kinds of workloads. With all of that, spending for all server and storage infrastructure was only up by 1.3 percent in Q2 2023, which is much more anemic than the 8.2 percent growth that IDC reported for Q1 back in April and that we commented upon here. There was sequential growth between Q1 and Q2, which is good, rising from $35.8 billion in the first quarter and we are approaching the $40.7 billion in spending seen in Q3 2022 and looking towards the peak $42.8 billion in spending seen in Q4 2022 just as the GenAI revolution was building up steam.

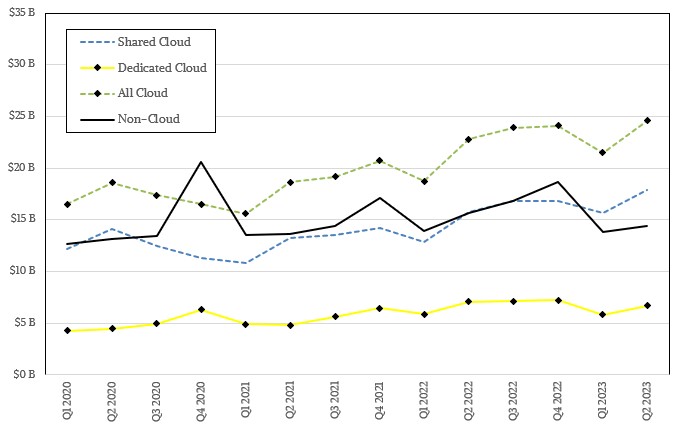

Here is our summary data of the datacenter server-storage model that IDC put out in 2021 to get rid of double counting of storage in its independent server and storage trackers. For hyperscalers and cloud builders, storage is just a skinny server CPU motor and a lot of disk or flash storage in a big fat box. How do you count that? A server or storage? IDC did both, which meant you could not reconcile the two except how IDC has done it in its current methodology by counting everything at the same time.

Here is the data for cloud and non-cloud infrastructure spending using this new methodology from Q1 2020 through 2Q 2023:

As usual, anything in red bold italics is an estimate by us to fill in gaps in the data.

We just finished the third quarter of 2023 last week, so data is not available for that, and it takes IDC a while to reconcile all of the financials from the public companies and build its datacenter spending model So we won’t see the third quarter numbers probably until January 2024.

In the second quarter of this year, spending on shared cloud infrastructure – again, servers and storage but not networking – by the hyperscalers and cloud builders rose by 13.7 percent to $17.9 billion, but spending on dedicated cloud infrastructure – which means stuff that runs in corporate datacenters as well as outposts of the big clouds that run either in co-location facilities or in corporate datacenters – was down by a little more than 5 percent year on year to $6.7 billion. In Q1, spending on dedicated cloud was down 1.5 percent to $5.8 billion. Two consecutive quarters of decline is a recession.

Spending on non-cloud infrastructure – things like big X86, Power, or mainframe systems and their SAN storage that supports relational databases and ERP, supply chain management, warehouse management, and customer relationship management applications – fell by 8.3 percent to $14.4 billion. Again, there has been sequential growth in this non-cloud segment, which is good, from $13.8 billion in Q1 2023, but non-cloud spending was down 0.9 percent in Q1 and is down 8.3 percent in Q2 and two consecutive quarters of decline is a recession.

Here is the thing. The intense GPU shortage means that the biggest players in GenAI – Microsoft, Google, and Amazon Web Services – are getting preferential allocations from Nvidia, and this in turn is accelerating the adoption of infrastructure rental from the cloud builders. As long as there are shortages, this will be the case. But with Nvidia reportedly being able to ramp to 1.5 million to 2 million H100 GPU units in 2024, perhaps the scarcity will abate some and the prices will come down and the server market will normalize. That certainly happened after the server memory spikes of 2018 and 2019, when DRAM prices doubled and also drove up server average selling prices.

The point is, when you need 20,000 to 25,000 GPUs to train a state of the art GenAI model, 500,000 units is only on the order of 20 to 25 clusters. There are more than 20 to 25 organizations worldwide who want to do this, and given it takes months to train these models with trillions of parameters to sift through and trillions of data tokens to chew on, that means that maybe you can have hundreds of organizations share that infrastructure. Even 2 million units at this scale is only several hundreds of customers sharing the infrastructure – and that is not going to necessarily meet the GenAI demand.

Which probably means that GPU pricing will continue to be high and therefore AI system prices will remain high.

The thing that has us concerned is that server and storage shipments were down 23.2 percent in the second quarter – we don’t know the number of machines that shipped because IDC didn’t provide them. This is after an 11.4 percent shipment decline in Q1 2023. Two quarters of consecutive decline is a recession in server and storage shipments.

Looking ahead to the end of this year, IDC is projecting that cloud infrastructure spending will grow by 10.6 percent to $101.4 billion; an earlier projection three months ago thought the growth would be 7.3 percent for the full year. The shared cloud portion is expected to grow by 13.5 percent to $72 billion, and the dedicated cloud portion will grow by 4.1 percent to $29.4 billion for all of 2023. Non-cloud server and storage spending will fall by 7.9 percent to $58.5 billion. We think all of that growth in the cloud will be driven by AI servers, which in turn is driven by the increasing allocations of GPUs from Nvidia and other AI engines from its competitors.

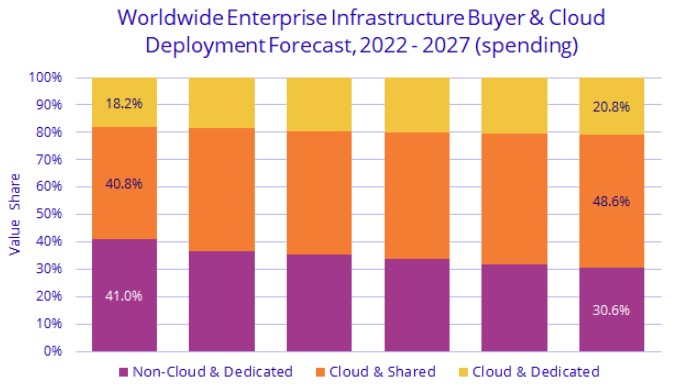

The longer term forecast is to cloud infrastructure spending to grow at a compound annual growth rate of 11.3 percent between 2022 and 2027 inclusive, ending up at $156.7 billion at the end of 2027 and accounting for 69.4 percent of total server and storage spending. Shared cloud will reach 109.7 billion in 2027, with an 11.6 percent CAGR, and dedicated cloud will hit $47 billion with a 10.7 percent CAGR. Non-cloud server and storage spending will rise, believe it or not, to $69.1 billion, representing a 1.7 percent CAGR between 2022 and 2027 according to IDC’s forecast.

I see your concern about the “R” word here. It occurs in 2 sub-segments (dedicated-cloud and non-cloud) while “Total” still has positive growth (8.2% and 1.3%, for Q1-Q2 ’23, in the big table). If the digestive plug-up is caused by CoWoS delaying availability of much desired HBM then transit should become more regular, pronto, with “simple” investments into known tech (flux capacity).

Also, with so much (long awaited) new tech finally pushing out, and rapidly so, there could be some decisional issues at play (pending broader benchmarking, or top500 results, to aim right) of whether to remain Zen, go full-hog SR, wait for rock solid Granite, a ride in the Sierra, or a digestive walk on the wild side with some of ARM’s cheeky twelve Olympians (of the Neoverse). DC piping choices have just exploded it seems, from a couple years ago when EPYC meditation sat as the lone ranger of success in such operation! 8^p

AI is eating the world.

It’s like that witch in Hansel and Gretel … Hey, look, a gingerbread, cake, and candy house! p^8