For those who have followed the evolution of supercomputing over the years, the Earth Simulator series of systems has represented the state of the art in workload-oriented design. This week it was announced that the fourth Earth Simulator system will be coming online in March 2021 with a blend of classic NEC pure vector processors mixed with Nvidia GPU accelerators.

The Japan Agency for Marine Earth Science and Technology (JAMSTEC) is sticking to its NEC roots for the newest machine. This will be based on those SX-Aurora TSUBASA vector processors from NEC and paired with Nvidia’s A100 GPUs with Mellanox/Nvidia’s HDR 200Gb/sec InfiniBand to tie it together. This combination will, at theoretical peak, give the system 19.5 petaflops, securing it a probable slot in the top 10 supercomputers list or a close number 11 or 12 ranking. (19.5 petaflops is the exact peak performance of the SuperMUC machine at Leibniz, trailed by the number 10 “Lassen” machine at Lawrence Livermore National Laboratory with 18.2 petaflops.)

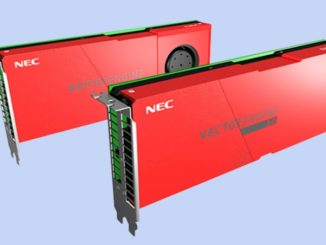

We have analyzed the NEC Aurora architecture in the past and what’s notable is that this same analysis from 2017 still applies since this is the exact same device for a 2021 machine. In other words, this is not a new machine to honor a new architecture. The new element is in GPU acceleration, which could be used for benchmarking between NEC parts and GPUs or are serving a critical role in any future AI training plans (or both).

We have feelers out now to understand how many nodes there are overall on the machine, which we can backwards-math ourselves since we don’t know how many of the nodes have GPUs, how many per node, etc. But one thing we do know is that this will complicate a base of legacy applications that have been primed for pure vector since the dawn of time if GPU offload is introduced. It could also be that there are relatively few GPUs on this new machine and they are installing them to do some performance comparisons and code work. We’ll update the story when we have more on the node and GPU distributions. It could very well be that the projected petaflop performance is heavily influenced by GPUs, after all.

The Earth Simulator supercomputers will be used to model an ever-increasing array of earth systems (climate at the forefront), but for context, let’s pop back in time for a moment for some context. And there’s a point to this context that goes beyond mere history. The evolution of function is the issue here. At the beginning of the NEC vector engines, these machines were incredible in terms of compute and bandwidth and efficiency. So much so that when Fujitsu and NEC tried to sell similar systems to the U.S. for weather modeling they were blocked several years ago. But as time has gone on, that story has fallen apart a bit, at least in terms of being a sole winner when it came to compute/memory/efficiency.

The first machine, which roared to life in 2002 (after a planning phase that began in 1997) was an NEC-built system based on the SX-6 architecture that spanned 640 nodes and absolutely killed the then-current record for the highest performance of any other supercomputer. For context, the top machine at the time was the ASCI White super, which lagged by 5X according to 2002 LINPACK results. The first Earth Simulator supercomputer held that title for two years until the BlueGene architecture from IBM came along and ushered in a new (but relatively short-lived) architectural heyday.

The second incarnation of the Earth Simulator machine came in 2009 and was architecturally similar with the upgraded SX-9 processors, which allowed about 4X the performance per node, reducing the node count dramatically and giving the system the designation of the most efficient architecture of the time, even compared to BlueGene, which was notable in that aspect as well.

The third generation Earth Simulator system appeared on the Top 500 list in 2015 with peak performance of 1.3 petaflops. This was based on NEC’s SX-ACE and a huge increase in node count with 5,120, which was later paired with another Japanese supercomputer for large-scale earth systems modeling (the Gyoukou system) for a total of 19 peak petaflops.

Just for fun, let’s take a look at how the pure vector approach might scale. Perhaps NEC needed to get JAMTECH teams on board with acceleration and code refactoring because the approach won’t continue to offer performance/scalability or anything even close to the great power efficiency story it was once heralded for. From that 2017 deep dive into the architecture (and remember, we just had “Volta” V100s for comparisons):

The top-end Tsubasa system has 32, 48, or 64 Vector Engines, meaning four, six, or eight of the A300-8 enclosures linked together with 100 Gb/sec InfiniBand. This rack of machines can deliver 157 teraflops at double precision, 76.8 TB/sec of aggregate memory bandwidth, and do so in under 30 kilowatts of power.

Of course, InfiniBand scales a lot further than that. To reach a petaflops of compute would require around six and a half racks and about 191 kilowatts of juice, which is not too bad, and a simple two-tier Quantum HDR InfiniBand switch fabric with a couple of switches could easily handle those nodes (with 40 200 Gb/sec ports or 80 100 Gb/sec ports per switch). To get to exascale, however, is problematic. With the current SX-10+ chip, it would take 6,369 racks of machines and 191 megawatts of juice to hit 1,000 petaflops. A factor of four contraction in the power budget would be better, and a factor of 10X reduction in form factor would still be pushing it.

To continue forward with the pure vector approach from NEC (at least in the absence of a new incarnation of the Tsubasa design) would require a nuclear power plant for exascale-class math capability.

This is not to say this architecture has hit the end of its lifespan, but continued upward scaling of performance as things stand will have to come to a standstill without going through all the tough trouble of code work required to use those GPUs to their fullest. It’s a great architecture for things that need a lot of heavy math capabilities and bandwidth and not every science program is exascale geared.

Hi from Spain.

Si dicen que un proyecto de computacion no requiera ExaScala, es que no merece la pena.

regards.

Just “returned” from the virtual NEC User Group meeting where this project was presented. The VE to GPU ratio is quite close to 100, so the vector engines get very very little help. They’re rather there to broaden the scope of this machine.