Users of relational database management systems are accustomed to sub-second response for relatively simple online transaction processing, and have been able to enjoy those zippy responses for decades. The big caveat there is relatively simple transactions – looking up data or processing an order – and against fairly modest databases weighing in at hundreds of gigabytes to tens of terabytes for most enterprises.

To be sure, enterprises have thousands of little databases doing all kinds of work for thousands of applications, but in the modern era, when all data is sacred and stored for potential use to gain insights and it is spread across all kinds of unstructured, semi-structured, and absolutely structured OLTP and data warehouse databases, big companies need something that can scale up to petabytes and beyond and that can look and feel like a single database for users even if, under the covers, it is a hodge-podge collection of data stored in countless formats. In fact, you want a kind of uber-database that skins all of these databases and presents them to programmers as if it were one giant, sensible, organized database.

That, in a nutshell, is what the PrestoDB database originally created by Facebook can do, although that was not its original design intent, and that is why Dipti Borkar and Steven Mih have formed a company called Ahana to extend and commercialize PrestoDB.

One of the ironies of this is that Google Ventures, which now calls itself simply GV, just led a $2.25 million funding round, along with participation from Leslie Ventures (headed up by Mark Leslie, one of the co-founders of file system maker Veritas) and other angel investors who kicked in some funds last year to get Ahana going and allow Borkar and Mih to leave their jobs at Alluxio, the company that is the commercializer of the Tachyon in-memory data caching layer that was created by the AMPLab at the University of California Berkeley. (We did a deep dive on Tachyon and its Alluxio variant back in February 2016.) So where is the irony? The Presto project and the PrestoDB and PrestoSQL variants of the database that are derived from the ideas embodied in Facebook’s major in-memory database (the social network has Scuba as a super-fast but more limited database in addition to Presto) are the biggest threats to Google’s own BigQuery massively scalable database. Facebook’s is open source. Google’s is not. At least for now. And it is Google’s investment arm that is hedging its bets by investing in the competition.

It’s Like Magic

Like everyone else outside of Facebook, we first ran across the company’s Presto and Scuba in-memory databases back in November 2013, when Facebook was bragging about the Presto database at the Strata+Hadoop World event and when we also got Ken Rudin, its head of analytics, to talk a little bit about Scuba as well.

Facebook had actually talked about Scuba a few months earlier, but it did not garner as much attention because it was not fully SQL compliant and many features of commercial RDBMS systems – importantly table joins – were not supported. And Scuba did not have anything close to the scale of Presto, either.

At the time, Facebook had just broken through 1 billion users, Scuba was used for time sensitive and often time series data, such as changes in ad impressions, clicks, and revenue and could support 70 TB of compressed data and about 1,000 tables across hundreds of servers. Presto, on the other hand, implemented a 300 PB in-memory database, written in Java and running across tens of thousands of nodes in Facebook’s datacenters, that road atop Hadoop’s HDFS file system and did not make use of either the MapReduce protocol or its Hive SQL layer, which was created by Facebook in early 2008 and open sourced that summer. (We think Facebook was using a mix of memory and flash to store Presto data back then, and certainly not all of the 300 PB in the HDFS clusters was stored in memory because it would take several hundred thousand servers, even with 5:1 compression as it was touting at the time for storing HDFS data, to store 300 PB completely in memory.) Presto, which was created in 2012, was a native, distributed SQL engine that could access HDFS directly and because it was a massively parallel query engine that could pull data into memory as needed to process quickly, rather than reading raw data from disk and storing intermediate data to disk as MapReduce and Hive (which was really an SQL to MapReduce converter) did, as such it was on the order of 10X to 15X faster than Hive. (We conjecture that Scuba is an order of magnitude faster again than Presto.) Complex queries across large datasets might take seconds to minutes on Presto compared to minutes to hours on Hive.

We have always contended that this is not the complete test for performance. Cloudera had an SQL layer called Impala for HDFS, Pivotal had one called Hawq that was similar (and derived from the SQL query engine used in the Greenplum massively parallel database), and there were a few others. And while they could span a lot of data, they were slow as molasses compared to plain vanilla relational databases – with those traditional relational databases operating at a much smaller scale, of course. Here is the important thing: Impala and Hawq were tuned for adding SQL functionality to HDFS through MapReduce. Presto can talk directly to HDFS and has connectors to talk to all kinds of data sources, including file systems like HDFS and Cassandra (also invented at Facebook), object storage like Amazon Web Services S3, just about any relational database or document database or key/value store – and it can execute queries on that data, at its source, against all of these sources, at the same time to create that uber-database. This is the neat thing about Presto, which is becoming a universal SQL layer for all kinds of data, much as Tachyon/Alluxio is becoming a unifying caching layer for all kinds of databases and datastores. (You can run Alluxio underneath Presto to really boost performance, by how much remains to be seen.)

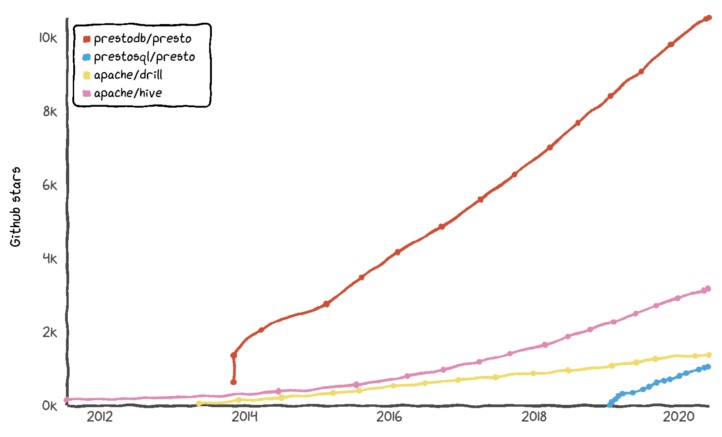

We don’t know how the performance gap between Presto and plain vanilla relational databases has closed in the intervening years, and we don’t know specifically how Presto matches up against Spark, the other in-memory system that also came out of the AMPLab at Berkeley. We will be getting to the bottom of that as soon as we can, with and without software such as Alluxio. But what we do know is that in the past seven years, Presto has been gaining momentum and is breaking into the bigtime. Perhaps twice, in fact. Here is where the story gets a little complicated.

When Rudin was talking about Presto and Scuba back in November 2013, that was also when the code was open sourced. In January 2019, after the code had been out for more than five years, the three original creators of Presto within Facebook – Martin Traverso, Dain Sundstrom, and David Phillips – created the Presto Software Foundation to steer development of Presto, creating a variant called PrestoSQL, which has a commercial entity supporting it called Starburst Data where these three people share the chief technology officer role. But then, in September 2019, Facebook, Twitter, Uber, and Alibaba, apparently not pleased with the input they had in the Presto Software Foundation set up by Traverso, Sundstrom, and Phillips, set up the Presto Foundation and slid it into the Linux Foundation to use its governance model. And Ahana, set up by Borkar and Mih, aims to be the commercializer of this particular variant of the Presto database, which has Facebook, Twitter, Uber, and Alibaba contributing their code changes to Presto.

In this particular situation, it is hard to say who forked the code first – does the company, Facebook, matter more than the three people who created the code? But the code bases for PrestoDB and PrestoSQL are probably drifting away from each other, and we have seen a lot of this in the database world for the past four decades. In the long run, there should only be one Presto community and one core code base.

Ahana, the company set up by Borkar and Mih, is clearly betting that the Presto Foundation and its PrestoDB variant is going to win in the long run, and they tell The Next Platform that in that long run, they expect for the two Presto groups to bury the hatchet, just like Docker and CoreOS did on container formats, for the good of the community. It seems pretty clear to us that the Presto foundation that has Facebook, Presto’s largest user, behind it will likely attract the most support.

All About Ahana

Borkar and Mih may not have worked at Google or Facebook, but they have their own specific database chops and more general experience in various layers of the IT stack.

Borkar, who is chief product officer at Ahana, got her bachelors degree in computer engineering at the University of Mumbai, her masters degree in computer science at the University of California at San Diego, and her MBA at the University of California at Berkeley. After getting her masters, she worked at IBM as a software engineer and eventually became the team leader integrating XML into Big Blue’s DB2 database and took over development of DB2 after that. She did a stint as senior product manager at NoSQL database provider MarkLogic, and then took the same role at NoSQL database supplier Couchbase, eventually adding the top sales and marketing jobs to her duties. She did a stint at GPU accelerated and in-memory database maker Kinetica and then took over as vice president of products at Alluxio in November 2018.

Mih and Borkar crossed paths a number of times before deciding to start their own company. He got his bachelors in electrical engineering at the University of California at San Diego, with a year of study abroad at Tokyo University. After that, he was a field sales engineer at AMD during the dot-com boom, and then was director of sales at EDA software supplier Cadence Design Systems for four years. Interestingly, he was vice president of worldwide software sales at Transitive, whose QuickTransit emulator was extremely dangerous in that it would allow any binaries to run on any CPU – Apple famously used it to run its PowerPC code on Intel Core processors when it ditched PowerPC chips. Mih was in charge of field operations for Mesosphere, the commercializer of the Mesos cluster controller that initially started at AMPLab and then was adopted by Twitter and Airbnb. Ten years ago, Mih took a job at Couchbase, where he met Borkar and where he ran worldwide sales, and then he got his first gig as chief executive officer at Aviatrix, a maker of software-defined networking middleware that runs on the major public clouds, masking their differences. In March 2019, Mih was tapped to be the CEO at Alluxio, where he worked with Borkar again and where they both became involved in the Presto Foundation set up by Facebook, and after talking about the potential for a different commercial entity behind PrestoDB, the two paired up and founded Ahana, celebrating by the leaf blower in Mih’s garage as the above picture shows.

Ahana is Sanskrit for “the first rays of the sun,” perhaps a metaphor for insight and certainly resonating with the HANA in-memory database created by application software giant SAP, which wants to have all of its customers move off of Microsoft SQL Server, Oracle whatever-name-they-use, or IBM DB2 at some point in the future.

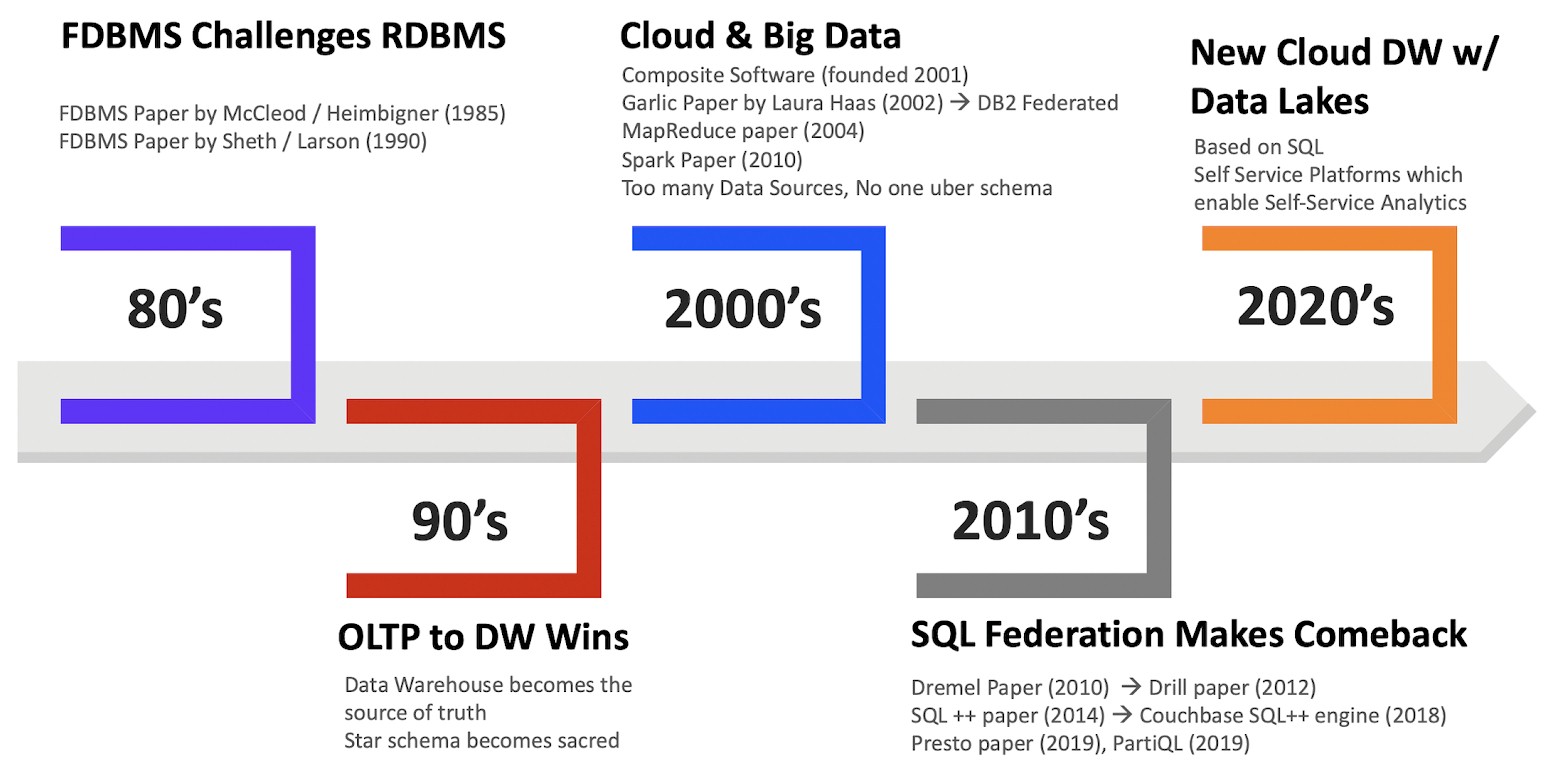

The first relational database in the world was IBM’s System R project in 1974, and among its many innovations was Structured Query Language, which allowed programmers to ask the databases complex questions that could not be easily done with flatfile datastores and hierarchical databases. (Google’s MapReduce is really a new twist on some very old ideas.) The System R concept, outlined by IBM Research database expert Edgar Codd in a groundbreaking paper in 1970, was prototyped at jet engine maker Pratt & Whitney and first commercialized in 1978 in the IBM System/38 minicomputer. Larry Ellison, Bob Miner, and Ed Oates might have founded Software Design Laboratories in 1977 and put their Oracle database in the field in 1979, beating IBM’s DB2 implementation of the relational database for the mainframe to market by three years, but they did not actually beat IBM to market – as is the legend and a misconception. It is safe to say that the lead that Ellison’s Oracle got over the mainframe, we think largely from his decision to code Oracle in C instead of assembly language as IBM did with DB2, was a pole position Oracle, as the company was eventually renamed, has never given up. Until now, perhaps, with so many database piranhas — not mention an SAP HANA and now Ahana — nipping at it and trying to take bigger bites of its core database business.

It took hardware a long time to catch up with relational databases, but eventually, through many innovations, these RDBMSes not only became usable, but pervasive. Some might have even said relatively easy. But scale problems persisted, and that is when federated DBMS software first came into being to try to deal with scale issues. You could only scale up so far within a single machine, so you had to scale out across a cluster of machines. IBM and Oracle made their money mostly on scale up machines, and there was plenty of money to be made selling big iron in the 1990s. But with the advent of the hyperscalers during the dot-com boom in the late 1990s and early 2000s, even big iron could not handle their database needs. And so, federation started to come back around on the guitar one more time. Once again, it was Big Blue that led the way, with Laura Haas of IBM’s Silicon Valley Lab revealing how IBM’s “Garlic” federation extensions to DB2 worked in 2002, which interestingly suggested that it would be interesting to unite both structured and unstructured data into the SQL processing.

“So the problem was not yet about different data types or different types of databases,” Borkar explains to The Next Platform. “The federated database had a query engine that would run the query, and push it down to the databases. But what happened with big data was a new kind of revolution, where storage, at that point HDFS and increasingly S3 cloud stores and similar technologies, were spread all over the place. Companies are looking to get insights out of that data, and there has been 30 years of research to optimize databases with all sorts of techniques in the code optimizer, which are getting added to Presto to a certain extent.”

The big problem that Presto solves is data movement. Because it is a distributed database query engine that can ride atop other databases and datastores, you can query data in place across those disparate data sources and aggregate answers back in the Presto in-memory database. You can run Presto in a homogenous fashion – Presto atop HDFS or Cassandra, for instance – but you don’t have to do that. Google proved that with Dremel in 2010 and Couchbase did it a few years later with SQL++, and Presto adopted many of these ideas, but perhaps the most powerful one is a very old lesson from very old databases, and one that was in databases from the beginning and predates Google’s MapReduce by decades: Move the compute to the data not the data to the compute.

Borkar and Mih say that the real punishment that organizations dealing with big data are wrestling with is not storing the data, but that 60 percent of the time, data scientists and system administrators are moving and pipelining data from one place to another so some system or another can chew on it.

What Ahana is trying to foster is what Mih calls data hackers and what Gartner calls citizen data scientists, and the idea is that Presto can be a unifying layer that allows analysts to ask questions of a portion of datasets – be they on S3, HDFS, relational databases, whatever – to test a hypothesis first and easily, and then set their query loose on a much larger dataset.

“Companies can interactively work with data where before they had to get engineers to look into the details and do the pipelines, which is costly,” says Mih. “And it costs again because data is duplicated all over the place, in a data warehouse or whatever. We call them data hackers because they are hacking data and getting insights. They just want to get answers, and they are struggling to do this with the old way of doing it with massive extract, transform, load operations. We think the modern way of doing this is a federated architecture, and the best implementation of this is Presto.”

Aside from the obvious competition with Google’s BigQuery – stay tuned, we are putting together a feature on the tenth anniversary of Dremel and BigQuery – there is another potential rival, and that is the Spark in-memory system that came out of AMPLab.

“Presto is an in-memory database,” explains Borkar. “You can spill to disk or flash, but it works best when it is in memory. That is fundamentally different from Spark, which between processing phases has to persist data. That is one of the reasons why Spark is slower than Presto, which creates a query plan and as it is executed the intermediate datasets are stored in memory. You can also spill Presto data to disk or flash if you don’t have enough memory, but that is not optimal.”

While Ahana has been launched, Borkar and Mih are not yet ready to talk about how they will package up PrestoDB and what extensions they plan to make to it. But what we do know is that they will offer a commercially supported implementation of Presto and it will happen before the year is done.

Be the first to comment