Indiana University is the proud owner of the first operational Cray “Shasta” supercomputer on the planet. The $9.6 million system, known as Big Red 200 to commemorate the university’s 200th anniversary and its school colors, was designed to support both conventional HPC as well as AI workloads. The machine will also distinguish itself in another important way, being one of the world’s first supercomputers to employ Nvidia’s next-generation GPUs.

We will get to that in a moment.

Although Big Red 200 is the first Shasta system to be up and running, it is one in a pretty long line of machines that Cray, now a unit of Hewlett Packard Enterprise, hopes to deploy in the coming decade based on this architecture. Notably, Shasta was tapped by the Department of Energy to be the basis of its first three exascale systems. Later this year, Berkeley Lab will be the recipient of a pre-exascale Shasta system, in this case, the NERSC-9 machine, code-named “Perlmutter.” Big Red 200 will have just a fraction of the capacity of those super-sized systems, but the use of Nvidia’s upcoming GPUs will make it a unique resource for anyone with access to the machine.

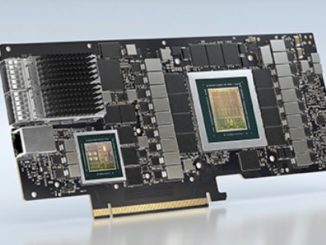

Those GPUs are expected to be plugged into Big Red 200 later this summer – that according to Brad Wheeler, vice president for information technology and chief information officer. The exact nature of those GPUs is unknown, which is understandable, inasmuch as Nvidia has not announced they are even on the way. The most likely explanation is that they will be the next-generation Tesla GPUs based on the upcoming “Ampere” architecture.

Our best guess is that Ampere GPUs will be unveiled in March at Nvidia’s GPU Technology Conference, which suggests they will be ready to ship in time for their summer rendezvous at IU. Note the Berkeley Lab’s Perlmutter system is also in line for these next-generation Nvidia chips in the same general timeframe as the Big Red 200 upgrade.

According to Wheeler, the addition of the new GPUs was something of a fluke. The original plan was to outfit the system with Nvidia V100 GPUs, which would have brought its peak performance to around 5.9 petaflops. But as they were getting ready to receive the system, an opportunity presented itself to wait a bit longer and move up to Nvidia’s newer technology. “At the last minute, we decided to take the machine in two phases,” explained Wheeler.

The first phase of the system – the one currently up and running at IU – is comprised of 672 dual-socket nodes powered exclusively by CPUs, in this case, “Rome” Epyc 7742 processors from AMD. (Yes, AMD ate Intel’s lunch yet again in another high-profile HPC deal). The second phase of the new IU supercomputer will commence this summer and will bring additional AMD Rome nodes online and these will be equipped with one or more of the next-generation Nvidia GPUs. When all is said and done, Big Red 200 is expected to deliver close to 8 petaflops.

As a result of the two-phase approach, waiting a few more months yielded an additional two petaflops of performance, even though, according to Wheeler, they ended up buying a smaller number of GPUs. (The newer silicon is expected to deliver 70 percent to 75 percent more performance than that of the current generation.) Perhaps more importantly, having the latest and greatest GPUs will help attract additional research dollars to the university, especially for AI-enabled research.

Speaking of which: The university is particularly interested in pointing out the artificial intelligence capabilities of the new machine, claiming that it will be “the fastest university-owned AI supercomputer.” Of course, until the new GPUs are unveiled, we won’t really know the extent of those capabilities, but they are almost sure to be more impressive than that of the current V100, which is certainly no slouch in that regard. Although, Big Red 200 is expected to deliver about eight times the peak performance of its predecessor, Big Red 2, Wheeler told us that for AI work, it will be “a far bigger jump.”

That is because Big Red 2, which was installed in 2013, was equipped with the now-ancient “Kepler” Tesla K20 GPU accelerators. That processor topped out at 1.18 FP64 teraflops and 3.52 FP32 teraflops. It had no specialized logic for machine learning, such as the Tensor Cores employed in the current “Volta” GPUs, or even FP16 capability, as is coming to many different compute engines in both the raw and bfloat16 flavor invented by Google and increasingly in favor. Big Red 2 was dismantled in December 2019 to make room for its successor, after having served IU researchers for seven years.

The new GPUs are certain to get a workout at IU. Researchers there are already applying AI techniques in areas like medical research and molecular genetics, cybersecurity, fraud prevention, and neuroscience, to name a few. Of course, the new system will also be expected to support more conventional HPC workloads, including the usual suspects such as climate modeling, genomic analysis, and particle physics simulations. Depending on the code employed, the GPUs could come in handy in these domains as well. “There were so many things being done algorithmically to really start to enable GPU use across a range of research disciplines and methods,” notes Wheeler.

To make up for the slight delay in GPU deployment, the university is upgrading its Carbonate cluster with 96 additional Tesla V100 accelerators. It was previously outfitted with 16 P100 GPUs and 8 V100 GPUs according to the system’s webpage, and was the main resource for IU researchers that needed modern AI hardware. The additional V100s will provide some extra capacity until phase two of Big Red 200 comes online.

What interconnect was delivered with this system?