Believe it or not, Cisco Systems has a bunch of customers for its UCS blade and rack servers that are in the gaming industry, which has its share of near-hyperscale players who have widely geographically distributed clusters spread around the globe so players can get very low latency access over the Internet to games running on that infrastructure. These gaming customers wanted something other than standalone rack servers or blade servers that converge compute and networking, so Cisco built it for them. And now you can buy it, too.

There is a kind of resurgence going on in modular servers these days. These machines, which are somewhere between a standard rack server which stands alone and a blade server that has a share chassis, I/O midplane, and power supplies, account for somewhere around 12 percent of server revenues according to the latest data from IDC and are growing faster than the market at large. Which is saying a lot considering the explosive growth in both shipments and revenues in the first quarter of this year. The most popular modular design – by far – is a standard 2U chassis that has four independent servers sleds in it, which share power, cooling, and storage across the nodes but which allows the nodes to run independently of each other with their own networking and I/O. As far as we know, this four-node modular machine was first created by Supermicro back in February 2009 with its SuperBlade Twin2 line, just ahead of the “Nehalem” Xeon 5500 processor launch that set Intel back on the path to dominance in the datacenter for a decade. Supermicro invented the half-width motherboard in April 2008, allowing two nodes to sit side-by-side in a single 1U rack server, called the SuperBlade Twin, and setting the stage for the four-node modular machine that was more widely bought because it had the same compaction but allowed for more storage in each unit because of the additional height of the 2U chassis. That additional storage allowed for RAID 5 data protection, an important thing in a world that had not yet perfected distributed object storage and erasure coding.

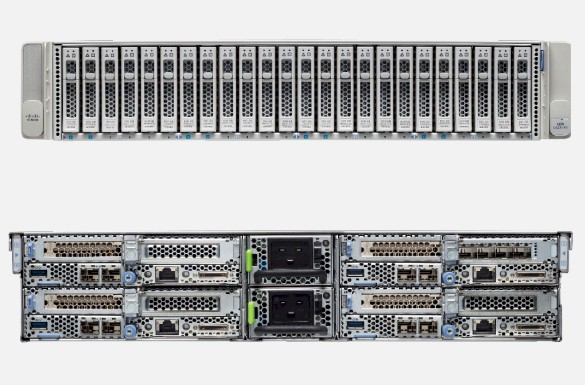

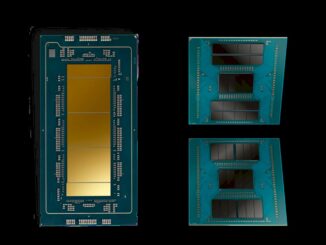

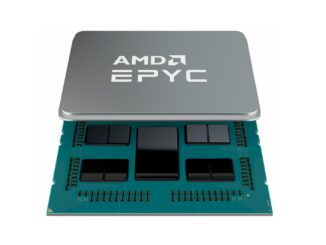

Gaming customers, along with their peers in the traditional HPC simulation and modeling markets as well as video rendering and streaming, are not just looking for server density, but are also interested in cramming as many cores as possible into a given area. They are always doing the math on price per unit of performance per unit of space per watt. That is why the new UCS C2400 modular servers being launched this week were designed around the “Naples” Epyc 7000 series processors from AMD, Todd Brannon, director of product marketing for the UCS line at Cisco, tells The Next Platform. While existing modular machines that have the same basic shape as the UCS C2400 have been around for a decade, the reason why the time is right for Cisco to do it now – aside from the literally demand from customers for a modular machine that has the consistent UCS Manager on premises and Intersight cloud management tools like the UCS blade and rack servers – is that there is now enough compute, memory, and I/O in these machines for them to be useful for a wider variety of workloads.

“As you well know, little inefficiencies at the node level get writ large when you operate at scale,” says Brannon. “So you have to let customers dial in the performance and the features they need for a specific workload in any platform. For the gaming companies, who pushed us to do this product, it is really about predictability. If they have a new title that is coming out, and there is some high demand in some part of the world that they were not expecting, they need to get some co-lo space and rapidly stand up capacity and they need to stamp out infrastructure in a programmatic way. With Intersight, we can distribute systems globally and manage them at a node level, independently, through the cloud, and they don’t even have to have nodes connected to the UCS fabric. They can see their whole server estate from anywhere. As multi-node servers have media that is denser and faster, particularly with NVM-Express, they have become more appropriate for a wider range of workloads. We see the multi-node form factor as something that is now going to come into more mainstream environments.”

Cisco got a proportionately large chunk of the fairly mature blade server market when it entered that arena in 2009, roughly a decade after the first blade machines appeared, and it hopes to repeat that success again with modular machines even though it is, technically speaking, pretty late to the party. A few years back, Cisco tried to do composable and modular machines with the UCS M-Series, and while these were interesting they were a bit ahead of the enterprise market and therefore the company mothballed them. So you can understand why Cisco waited to see serious demand pull and an expanding market before it jumped in with modular machines. Otherwise, all it is doing is getting into a price war with Supermicro, Dell, and Hewlett Packard Enterprise.

Another reason why Cisco is putting out the UCS C4200 is that there is also a play for these modular machines at the edge, where a four-node machine could end up being a baby distributed datacenter, sitting in a remote location like a 5G base station or in a retail location, doing a lot of local processing and shipping up summary data back up the network to the real datacenter. (We discussed our philosophy on edge computing recently, and it is something that we all have to pay attention to as compute and storage is getting more dispersed on the network.)

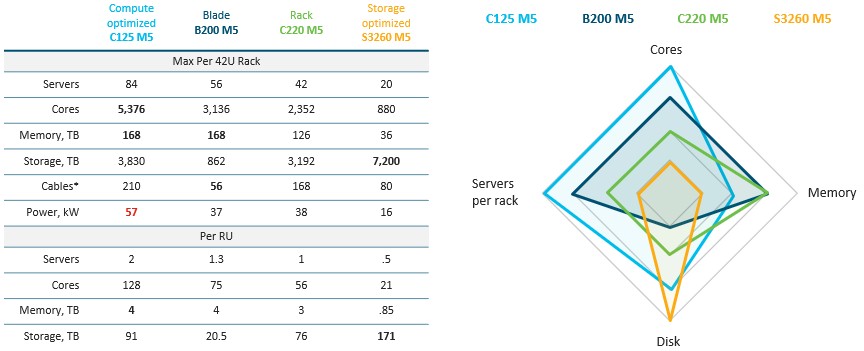

With various kinds of HPC systems – and gaming clusters and render farms are a kind of HPC, even if they are not using MPI to share work – the power density is more of a limiting factor than the compute density. Delivering a lot of power to a rack is a challenge, and getting it back out at heat is an equally large challenge, so cramming too much compute into a rack is not practical.

“Our customers need to take a holistic approach to density,” says James Leach, director of platform strategy for unified computing, who joined Cisco in 2010 after two decades at Compaq and HPE. “As for power density, we are right up against where most of our customers want to be. Over the past several years, power density has flipped over to become the driving factor, rather than physical compute density. The modularization is important, but getting more dense on the power doesn’t help. At some point, companies might as well build datacenters that are four feet high because they can’t fill their racks anyway.” And for those who have occasional needs for increased performance for specific processors or nodes and therefore spikes in thermals, the power capping features of the integrated management controller that is on all Cisco machines – B-Series blades and C-Series rack, storage, and now modular nodes – can be used to allow a little extra here by capping a little power there.

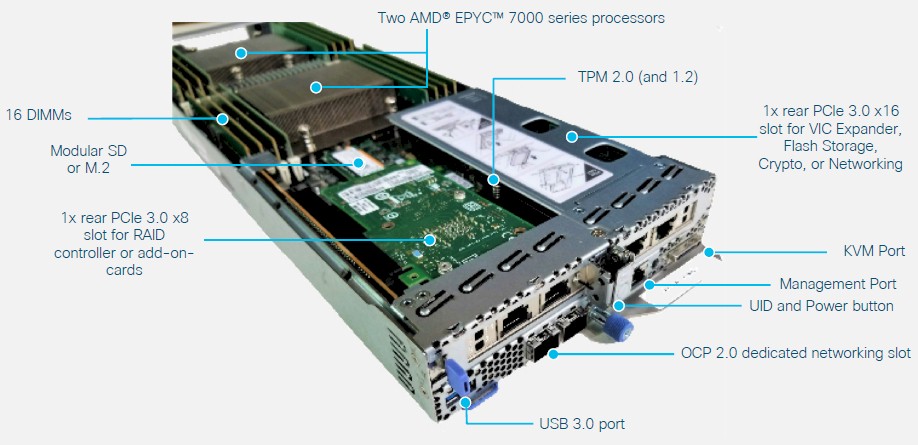

The C125 M5 server sled that slides into the UCS C4200 chassis is designed to host two Epyc 7000 series processors, which can be no hotter than 170 watts each. Notably that includes the 32 core Epyc 7501, the 24 core Epyc 7401 and 7401P, and the 16 core Epyc 7351, 7351P, 7301, and 7281. Cisco has the option of creating a true single-socket sled, but for customers who want a single socket machine now, they can drop in one of the 180 watt Epycs and then have another 160 watts of extra thermal room to play with to add other peripherals.

At the moment, each sled has six of the 24 storage drives assigned to it statically, but eventually, the drives will be dynamically configurable. For the moment, two of the six drives can be equipped with NVM-Express flash drives, which chop out the CPU and driver overhead of the storage stack and drop the effective latency and increase the effective throughput of the flash to something a lot closer to rated specs.

The C125 M5 sled has 16 memory slots, eight per socket, and can be equipped with up to 2 TB if you can afford to buy 128 GB memory sticks. (No one can afford this.) The memory can run at speeds up to 2.67 GHz. The node has two PCI-Express 3.0 slots, on x8 slot that can be used for a RAID controller or other add-ons and an x16 that can be used for the UCS fabric virtual interface controller (VIC) or other storage, networking, or cryptographic coprocessor cards. The VIC card currently supports 10 Gb/sec or 40 Gb/sec links and is working on variants of the VIC that can support 10 Gb/sec or 25 Gb/sec in one model and 100 Gb/sec in another. The company offers network interface cards that reach out in traditional fashion to top of rack switches that run at 25 Gb/sec, 40 Gb/sec, 50 Gb/sec, or 100 Gb/sec speeds as well. The sled also has a M.2 flash stick for local storage, and this is usually employed to host the operating system on servers that have this today. Over time, Cisco can create sleds that enclose GPU or FPGA accelerators, and hints that it might do these as sidecars within the C4200 enclosure for the CPU nodes.

The key thing for UCS customers is that the C125 M5 sleds in the C4200 enclosure allow 128 percent more cores than the densest B-Series blade servers, 50 percent more servers per rack than the plain vanilla C-Series rack servers, and 20 percent more storage than the densest C-Series rack servers, all with keeping the same UCS and Intersight management.

The C125 M5 nodes are aimed at generic virtual machine and container workloads as well as electronic design automation, seismic analysis and reservoir modeling, CAD and crash simulation, weather modeling, genomics, computation fluid dynamics, online gaming, fraud detection, and high frequency trading – workloads where the compute ratio is higher than the storage or networking. We also think that Cisco will eventually put its HyperFlex hyperconverged storage, based on Springpath, on clusters of these machines, but the company is making no promises as yet. One more interesting thing: While there is no reason Cisco can’t make a Xeon SP variant of the modular compute sled – call it the C120 M5, perhaps – there is no current plan to do so. This speaks volumes to value proposition that AMD has been espousing for the Epyc chips and that Cisco is seeing come to fruition in parts of its UCS customer base.

For memory intensive workloads, Cisco recommends its B480 M5 and C480 M5 four-socket Intel Xeon SP blade and rack machines, respectively, as well as for machine learning workloads with fairly large numbers of GPUs. Data intensive workloads such as media streaming, content distribution, and object storage go on the S3260 M5 rack server, which has a lot more slots for media devices (either spinning disk or flash), and the two-socket B200 M5 blade and C220 M5 and C240 M5 rack servers based on the Xeon SP are aimed at more mainstream workloads such as virtual desktop infrastructure, distributed databases, application development, enterprise applications that ride on databases, and data analytics with a smattering of GPU accelerated machine learning.

The UCS C4200 chassis and C125 M5 node will be orderable starting in July, and will ship in the third quarter. Pricing was not yet available.

Be the first to comment