As an economic powerhouse and with a rising military and political presence around the world, you would expect, given the inherent political nature of supercomputing, that China would have multiple and massive supercomputing centers as well as a desire to spread its risk and demonstrate its technical breadth by investing in many different kinds of capability class supercomputers.

And this is precisely what China is doing, including creating its own offload accelerator, based on digital signal processors. This Matrix2000 DSP accelerator, which was unveiled at the ISC16 supercomputing event last year and which is being created by the National University of Defense Technology, is finally ready and is being deployed in the upgraded Tianhe-2A system that the Chinese military built initially with Intel Xeon processors and Xeon Phi coprocessors. The Matrix2000 DSP accelerator came into being because the US government halted shipments of Intel’s Xeon Phi coprocessors to China several years ago, since they are being used for strategic military purposes rather than for general scientific research.

The upgraded Tianhe-2A was unveiled at the International HPC Forum 2017 event this week, which is being hosted in the supercomputer’s hometown of Guangzhou in southern China.

A Three Horse Race, And Then Some

China is being careful to not become wholly dependent on the Shenwei family of massively multicore processors for its future HPC systems and which are currently used in the TaihuLight system that sits atop the Top 500 supercomputer rankings. The homegrown SW26010 processor that is at the heart of the TaihuLight machine has 260 cores running at 1.45 GHz and delivers about the same performance, at 3 teraflops double precision, as the “Knights Landing’ Xeon Phi 7000 series from Intel. TaihuLight, which is installed at the National Supercomputing Center in Wuxi, has 40,960 single-socket nodes in total, and after a lot of tuning since it first came out in June 2016, it is rated at a peak performance of 125.4 petaflops at double precision floating point and delivers a little over 93 petaflops of sustained performance on the Linpack parallel Fortran benchmark test that is used to rank supercomputers on the Top 500 list.

In fact, as we have previously reported, China is investing in three different architectures to push into the pre-exascale performance range, and including a hybrid approach that marries traditional processors to offload accelerators. Supercomputing centers in the United States are making similar investments and similar architectural choices, with Intel’s Xeon Phi line representing the many-core option for compute and IBM’s Power9 and Nvidia’s Tesla GPU accelerators representing the main hybrid alternative. (Some big HPC centers are using a mix of Xeon and Xeon Phi processors, with no offload, as their setup, but that is not a single architecture so much as two architectures side by side.) For China, the hybrid investment is in the Tianhe-2A system, which uses Xeon processors and the Matrix2000 DSP accelerators; at some point, China could switch the CPUs in the Tianhe-2A follow-on to a native processor of its choice, perhaps an offshoot of its “Godson” MIPS variant with an X86 compatible layer or perhaps an ARM processor. But interestingly, the Matrix2000 DSP accelerator has not been chosen, as far as we know, as one of the exascale architectures.

At least not yet.

NUDT has, according to James Lin, vice director for the Center of HPC at Shanghai Jiao Tong University, who divulged the plans last year, is building one of the three pre-exascale machines, in this case a kicker to the Tianhe-1A CPU-GPU hybrid that was deployed in 2010 and that put China on the HPC map. This exascale system will be installed at the National Supercomputer Center in Tianjin, not the one in Guangzhou, according to Lin. This machine is expected to use ARM processors, and we think it will very likely use Matrix2000 DSP accelerators, too, but this has not been confirmed. The second pre-exascale machine will be an upgrade to the TaihuLight system using a future Shenwei processor, but it will be installed at the National Supercomputing Center in Jinan. And the third pre-exascale machine being funded by China is being architected in conjunction with AMD, with licensed server processor technology, and which everyone now thinks is going to be based on Epyc processors and possibly with Radeon Instinct GPU coprocessors.

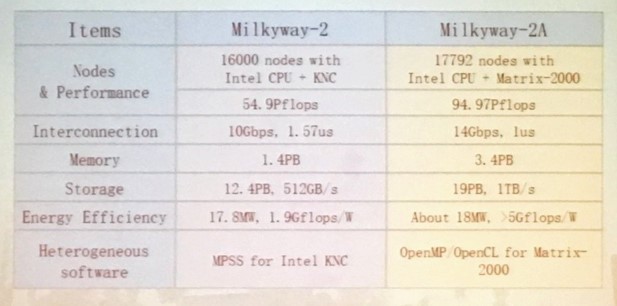

Kai Lu, a professor at NUDT, talked about the Tianhe-2A system. This is a kicker to the Tianhe-2 system, which is currently the second-fastest machine in the world, with 54.9 petaflops of peak and 33.9 petaflops sustained performance on Linpack; it uses a mix of Xeon E5 processors and Xeon Phi 31S1P coprocessors from Intel. With the Tianhe-2A, NUDT is swapping out the Xeon Phi coprocessors, which didn’t have very much oomph, for the Matrix2000 DSP accelerators. (Lin tweeted out a lot of Lu’s presentation slides, as did Satoshi Matsuoka, who attended the event as well and who is the professor at Tokyo Institute of Technology in charge of the Tsubame 3.0 hybrid CPU-GPU supercomputer, among many other machines.)

Here is the slide showing the comparison between Tianhe-2, which was the fastest supercomputer in the world for two years, and Tianhe-2A, which will be vying for the top spot when the next list comes out we suspect:

As you can see, every part of this system has been tweaked and improved, it is not just a matter of slapping new DSP accelerators in and using an OpenMP and OpenCL software stack to program the accelerators. The architecture of the Tianhe-2A machine pairs two Xeon processors with a pair of accelerators, and then puts two of these nodes onto a single motherboard. (Lots of other supercomputers do it this way with other components.) The machine has 199 racks in total, with 139 of them being used for compute, 24 racks being used for the “Galaxy” TH-Express-2+ proprietary interconnect, and 36 racks for storage and I/O subsystems.

The compute nodes on the Tianhe-2A system have, at 192 GB, twice the main memory of the setup used in the “Ivy Bridge” Xeon E5 nodes with the Tianhe-2 system. The node count has been increased by 11 percent with the upgrade, which boosts performance, but so does the fact that the Matrix2000 DSP accelerator packs more than twice the raw punch of the “Knights Corner” Xeon Phi it replaces in the system. The Matrix2000 DSP accelerator has its own memory as well, just like the Xeon Phi did, but Lu did not reveal (as far as we know) how much memory was on the DSP accelerator, but the goal when the Matrix2000 was revealed back in July 2015 was to put 32 GB or 64 GB of High Bandwidth Memory on the accelerator. We very much doubt that has happened, with Nvidia at 16 GB of HBM2 memory with its “Volta” Tesla GPU accelerators. As for storage, the Tianhe-2A upgrade has a 19 PB parallel file system which can move data at 1 TB/sec, a significant upgrade from the 12.4 PB file system that did 512 GB/sec. (We presume this is a Lustre file system.)

As expected, this new Galaxy interconnect now uses 14 Gb/sec signaling instead of the 10 Gb/sec used in the prior TH-Express 2 interconnect, which yields 112 Gb/sec ports instead of the 80 Gb/sec ports used in the prior system. The latency on the port hops has also been reduced, from 1.57 microsecond to 1 microsecond, and the Galaxy switches now have 24 ports instead of 16 ports with the prior switch, and the aggregate switching bandwidth has been boosted to 5.4 TB/sec per network ASIC.

The goal with the Tianhe-2A upgrade was to get a machine with more than 100 petaflops of performance, and to get to that scale of machine with a lot less energy per flops. NUDT did not quite get there on the first part of that goal, with the system coming in at 94.97 petaflops of peak performance, compared to 54.9 petaflops peak with the predecessor machine. But the energy efficiency is way up. Tianhe-2 consumed 17.8 megawatts, and delivered a performance efficiency of 1.9 gigaflops per watt. Tianhe-2A is burning a tiny bit more juice at 18 megawatts, and is yielding 5.3 gigaflops per watt of power efficiency.

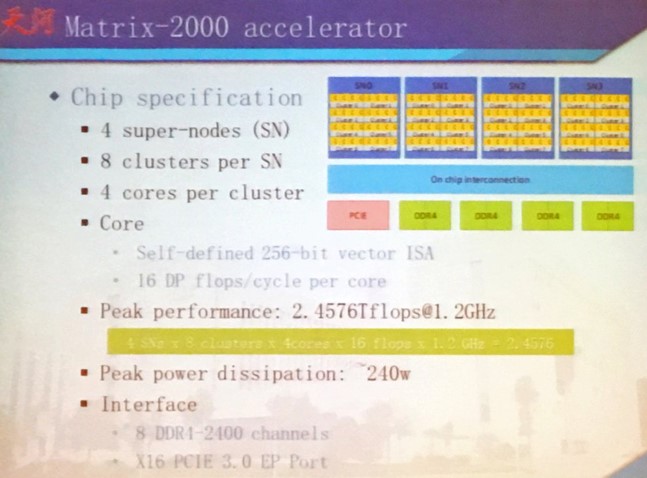

The key to that efficiency is, of course, the Matrix200 DSP accelerator. When it was unveiled as a project two years ago, the plan was to have a 64-bit compute device that ran at around 1 GHz and that delivered around 2.4 teraflops at double precision and 4.8 teraflops at single precision, all within a power envelope at around 200 watts. (As far as we know, the Matrix2000 DSP does not support half precision math, but a future variant could.)

So how did NUDT do?

Here is what we have learned. The DSP accelerator has a total of 128 cores, each of which can issue 16 double precision instructions per clock; the cores have a 256-bit vector instruction set and take in data in 512-bit chunks. The cores are organized into clusters of four, and eight of these are organized into super nodes, or SNs, on the Matrix2000 chip and four of these supernodes are linked to each other using a high-speed interconnect, called a Fast Interconnect Transport or FIT, that supports direct memory access between the SNs but which does not offer full coherency across those SNs. The bi-directional bandwidth on these FITs is 25.6 GB/sec and the roundtrip latency is on the order of 20 nanoseconds, which is pretty fast. That on-chip interconnect is also used to link out to four DDR4 memory channels and one PCI-Express 3.0 x16 port. So, forget about that HBM memory, it uses DDR4, specifically 2.4 GHz DDR4 memory just like a modern server does. Running at 1.2 GHz, the Matrix2000 DSP accelerator delivers 2.46 teraflops of double precision oomph with a peak power dissipation of 240 watts. So, performance is on target, but it runs a little hotter. We blame the DDR4 memory, and the difficulty and expense of manufacturing HBM memory. But the truth is, memory is driving up the heat at all levels of the system, not just the accelerator, and not just on Tianhe-2A but on all systems.

The rumor, according to Matsuoka, is that the cores used in the Matrix2000 DSP are based on an ARM core, but with 256-bit vector extensions and, in a way, akin to the way a Xeon Phi is a vector-boosted Atom chip. Which begs the question why this was called a DSP in the first place. Possibly, to throw us all off the scent a little. This is the Chinese military, after all. So that future pre-exascale machine that China is building could be ARM CPUs paired to ARM parallel coprocessors. Wouldn’t that be funny?

If they can reverse the dismal Byte/FLOP ratio of Taihu Light and its equally dismal computational efficiency with a modified Tianhe, then they seem to be backpedaling a bit architecturally and considering Taihu Light a dead end.

They threw a bunch of money and electricity at their flagships, but so far they’re only good for Top500 bragging rights. They didn’t really invest in the architectural framework required to get to useful exascale.

If Tianhe 2A does better with HPCG and Graph500, then maybe they’re making progress, but Taihu Light was a big step backwards from Tianhe 2 in both of those areas given its HPL FLOPS.