D.E. Shaw Research, the company founded by quantitative finance pioneer, professor, and entrepreneur, David E. Shaw, has always been shrouded in mystery. The company has a financial services arm, but also makes (among other systems) a special purpose supercomputer designed for the specific needs of molecular dynamics research. Based on a custom ASIC, interconnect, and proprietary software, the machine itself invites extreme curiosity—as does the business model that supports it.

As far as anyone is aware, there is only one Anton machine outside of the company’s New York-based research lab—at the Pittsburgh Supercomputer Center (PSC). Dr. Philip Blood, a senior computer scientist at PSC, worked with the first Anton machine when it was brought to the center and is now overseeing, as principal investigator, the new grant process and deployment of a forthcoming Anton 2 machine. PSC is being given a new system without charge from D.E. Shaw Research, aided with funds from the National Institutes of Health to pony up for the power, cooling, and personnel costs.

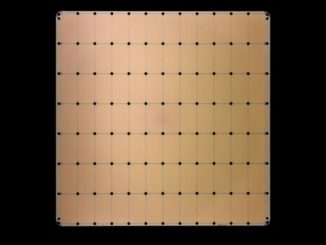

What is interesting is that the first machine, an Anton 1 installed in 2008, sported 512 nodes, but its replacement will have only 128 nodes yet is expected to provide a 4X boost in overall simulation speed while using up to 5X more atoms in simulations. This is an incredible improvement, Blood says, one that is difficult to understand in mere numbers. Practically, it means that the existing 150,000 to 200,000 atom simulations can get a boost to 700,000 or more, thus allowing for far more complex biological systems to be simulated with increasingly fine-grained time scales—moving from simulations in microseconds to milliseconds. For a system designed initially to study protein folding at scale, as Anton was, this opens new avenues for researchers targeting lipids, proteins, and other cellular structures in close detail.

Although one of the most knowledgeable parties on the topic of Anton, at least from an end-user research perspective, even Blood doesn’t know much about the architecture and underlying software and hardware optimizations that have led to a system capable of outperforming traditional commodity hardware by orders of magnitude. In fact, much of what is known can be found in a 2014 Gordon Bell prize-winning paper in which D.E. Shaw researchers described the architecture at the chip, communication, and algorithmic optimization level. At the highest level, “The structure of Anton 2 is tailored for fine-grained, event-driven operation, which improves performance by increasing the computation with communications, thus allowing a wider range of algorithms to run efficiently, enabling many new software-based optimizations.”

The newer Anton 2 system, which has 512 nodes (although PSC’s forthcoming machine is 128) provides an order of magnitude improvement in performance over Anton 1—and, as D.E. Shaw research says, two orders of magnitude improvement over general purpose hardware. Blood, who has gauged the impact of ongoing research says that even though there have been enormous strides in accelerators, particularly GPUs, on common molecular dynamics codes, and these may have closed the gap some, but this machine is the right tool for the workload. Scalability on a commodity system is tough for codes like GROMACS, for instance, and Anton 1 and 2 have solved that challenge along with the requisite performance and even power efficiency numbers.

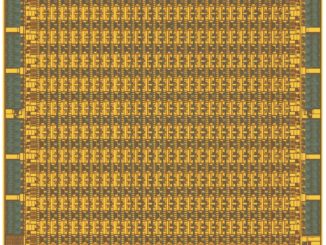

“Like its predecessor, Anton 2 performs the entire molecular dynamics computation within custom ASICS that are tightly interconnected by a specialized high-performance network. Each ASIC devotes a quarter of its die area to specialized hardware pipelines for calculation interactions between pairs of atoms, and also contains 66 general purpose programmable processor cores that deliver data to these pipelines and perform the remaining computations required by the simulation.”

The Bell prize paper, which can be read for greatest detail granted, explores these issues. But from what we are able to discern, the most salient feature, at least on the processor front, is what they describe as a “set of new mechanisms devoted to efficient fine-grained operation. The resulting architecture more aggressively exploits the parallelism of molecular dynamics simulations, which fundamentally consist of a large number of fine-grained computations involving individual atoms or small groups of atoms.” The idea here is that by having the direct hardware support for all of this allows for far greater efficiency of communication and synchronization while getting around many of the scalability challenges presented by standard commodity architectures—including GPUs in this case, which cannot have that level of specific tuning for the code, of course.

It would be far too easy to go off on a detailed tangent about the architectural ins and outs of the Anton machine—it is fascinating. But as it stands, all almost anyone knows—including the people who manage the only publicly available system at PSC—is in that paper and no extensive interview will yield more details than what is there. And besides, the story today is a bit larger than this. Because by fall, the most powerful system—a “computational microscope” as Blood describes it—will be in the hands of international researchers who can will be able to model complex biological processes in far greater detail and ever-increasing time scales , thus leading to new potential understanding, cures, and better models.

The performance advantages of such a system are clear, but in many ways, what is gained in potential is lost in flexibility. Users compile to an interface that can talk to Anton’s internal stack, but the code it is running is abstracted from the researcher. When using open source molecular dynamics code, there are a great many ways to explore ideas differently, but given the fact that the code is locked in the box, so to speak, there is no room there. This is a trifle, Blood says, because the ability to simulate and model at such scale is incredibly value and can inform the 3D structure analysis and understanding for other applications. However, as we think about the potential role for a new wave of custom hardware in other areas (as we’re seeing) this flexibility question will arise again. The rise of FPGAs, custom silicon for deep learning, and other trends seem to intersect, even if supercomputing only has isolated cases in domain-specific areas.

It is difficult to say how long such systems were available inside of D.E. Shaw, but Blood suspects that it wasn’t long before PSC acquired their Anton 1. As he tells The Next Platform, “I’ve been in the same room with D.E. Shaw but never met him, although I did meet his right-hand man, his deputy, Ron Dror, in 2003. We were at a conference in Lyon and he was talking about wanting to build a special purpose molecular dynamics computer. At that time, a number of such system had been designed and some built, but they were not successful. It could not have been that much longer from the time the first one was built to when we acquired ours at PSC.”

The selection of PSC as the site was due in large part to Dr. Joel Stiles, a CMU professor, PSC researcher, and collaborator with D.E. Shaw research who had worked with the company on its initial iterations of the system and advocated to have one placed at PSC. Unfortunately, he passed away just after the project was awarded, but Blood says that was the beginning of their journey to the first Anton—and that investment on both sides—continues because of that early work.

I am not sure that 4x increase in performance is that really shocking that;s probably simple down to the improvement of semiconductor manufacturing in 2008 they probably used a 45nm or more likely a 90nm node even (for affordability). The new one will be probably be at least on 28nm maybe even 16nm.

That’s a 4X improvement with a fourth as many nodes, so a 16X improvement on a per-node basis, way more than the process difference between 90nm & 40nm.

Specialized hardware certainly is making a comeback. I wonder if the Anton architecture could be mapped onto an FPGA? Or is it already…?

Really I wish rhe author had spent

time on that too. I had no idea the Anton even existed, and would’ve enjoyed reading how the math was mapped to the hardware.

For mainstream enterprise and web computing, the key is cloudability: can the accelerators be shared among multiple client workloads of differing types, and can the processing hardware configurations be made to adapt to the unpredictable and varying demands of the different client apps?